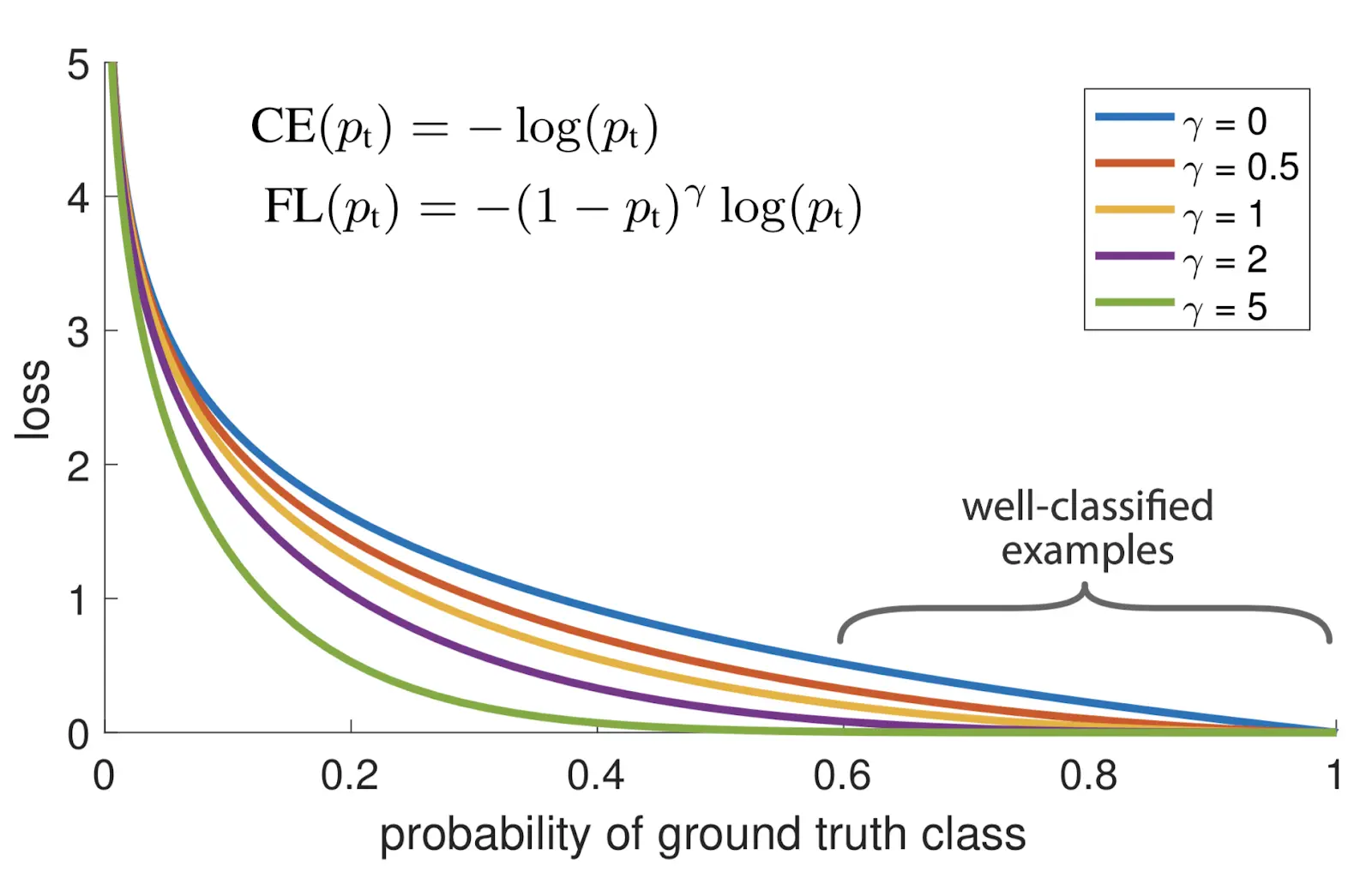

Fig: – The focal loss down weights easy examples with a factor of (1- pt)γ 1

Range:

Use case: Modulates cross-entropy loss by weighting well classified classes less, putting more emphasis on hard-negatives (bigger = stronger effect). Useful for inbalanced datasets, outperforms balanced CE loss.

(1) When an example is misclassified, and is small, the modulating factor is near 1 and the loss is unaffected. As , the factor goes to 0 and the loss for well-classified examples is down weighted.

(2) The focusing parameter γ smoothly adjusts the rate at which easy examples are down weighted. When γ = 0, Focal loss is equivalent to cross-entropy, and as γ is increased, the effect of the modulating factor is likewise increased.

The loss can also be (and is in practice) -blanced:

References

Paper: Focal Loss for Dense Object Detection

With label smoothing:

GPT

Keras implementation→ is wrong?