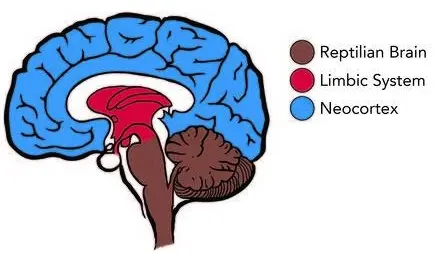

The brain has two quite different parts:

- The “old” part, the mammalian brain, which is concerned with all these low level functions like motor control, breathing, emotions….

- The “new” part, the neocortex, which is the part of the brain that is responsible for all the high level functions like language, abstract thought, planning, and so on.

The mammalian brain has many different parts, each looks different and has different tasks.

The neocortex on the other hand, it looks the same everywhere, even across species. The same building blocks are just repeated / scaled up, which seems to work well for all the different domains like language, hearing, touch, vision, … are all built on the same substrate. And this similarity is not even at a low level, but at a high level.

If you were to attach the visual regions to auditory input, it would simply become the auditory region.

Among these buillding blocks are grid cells: They evolved to make maps for navigation in the brain, but with the formation of the neocortex, they were repurposed for maps to all kinds of things, concepts, …

Through time, even a single cortical column can make a complete model of an object. Every column builds models of something. Upon receiving sensory information, e.g. from looking at a cup, many thousand models are being envoked, and they all vote / compete with eachother, trying to figure out what is going on. It is a distributed modelling system.

This is what the “thousand brains theory” describes.

The sensory-fusion model of the brain is the wrong idea.

The idea that there is one central model for an object, and that all the different sensory inputs come together somehow is wrong.

High level “voting process” among cortical columns

When presented with some stimuli, e.g. touching something with our eyes closed, the corresponding cortical columns each propose a set of objects that they think might be body is dealing with. With more time, e.g. when you touch the object from different directions etc., their probabilistic guess can be improved.

NOTE: The brain is not dealing with probabilities, but with sets sets of possibilities and “unions”.

In certtain layers of these columns, there are long-range connections. These send the projections across the brain. That’s where the voting among all the different columns which deal with different sensory inputs, at different layers, etc. occurs. They can all quickly agree if there is a single best answer → crystalization of the vote.

A similar process is happening at a lower level with the neuron firing process.

memory in the brain is associative. It is stored in reference frames based on grid cells.

This also explains why it is easier to remember things if you assign them to spatial locations, e.g. in a “thought palace” / “method of loci”.

The brain prefers to store things in reference frames.

The method of recalling things (“or thinking if you will” … not so sure about that yet. I think this is just the interpolative memory part - where is the “program synthesis” part?) is to mentally move through those reference frames.

One can physically move through reference frames, i.e. by moving through a room, touching an object, … or by thinking about them.

The cortex is the executive center - the executive center of the brain - where thoughts, memories and language reside.

Link to original

The hippocampus is a loopy structure around the cortex with mainy interconnections to it. It Sort of manages the information in the cortex, a bit like a librarian.