year: 2014/07

paper: https://www.researchgate.net/publication/262013945_Evolving_Neural_Networks_That_Are_Both_Modular_and_Regular_HyperNeat_Plus_the_Connection_Cost_Technique

website: Presentation | short 5min high level vid

code:

connections: HyperNEAT, modular, regularity, connection cost, Jeff Clune

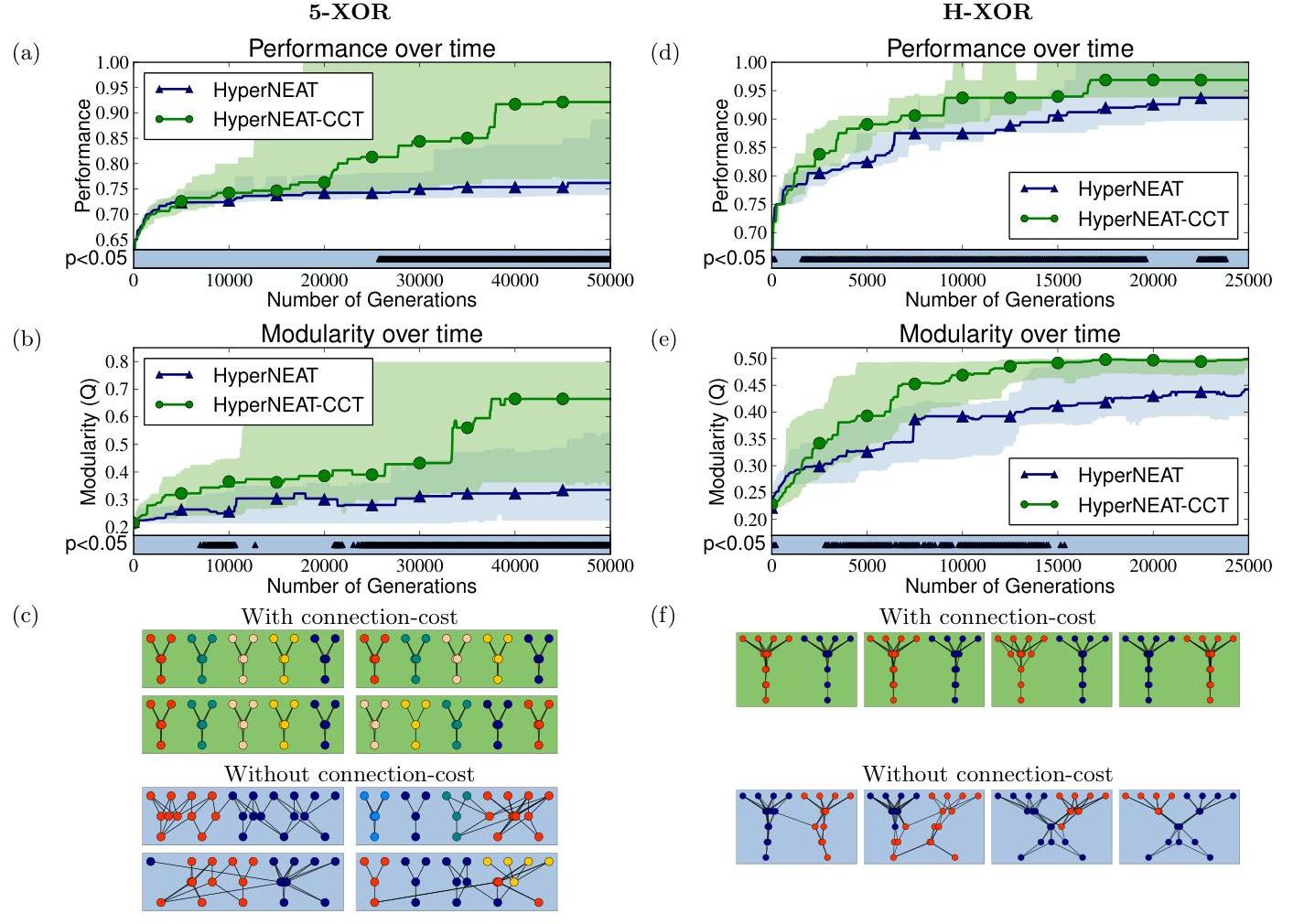

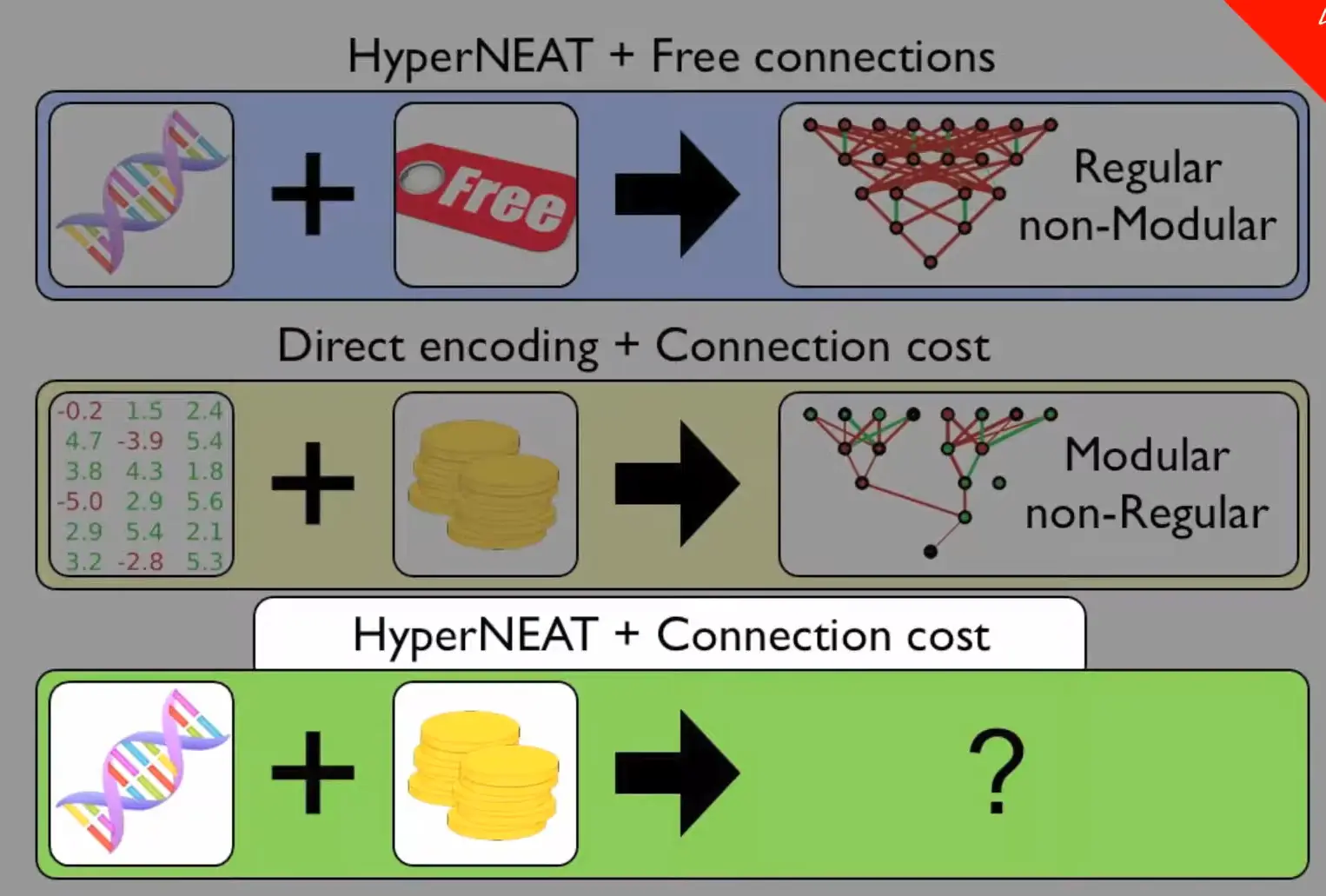

Connection cost is added as a third optimization objective (alongside performance and behavioral diversity).

The connection cost they use is the sum of squared lengths of all connections in the phenotype network.

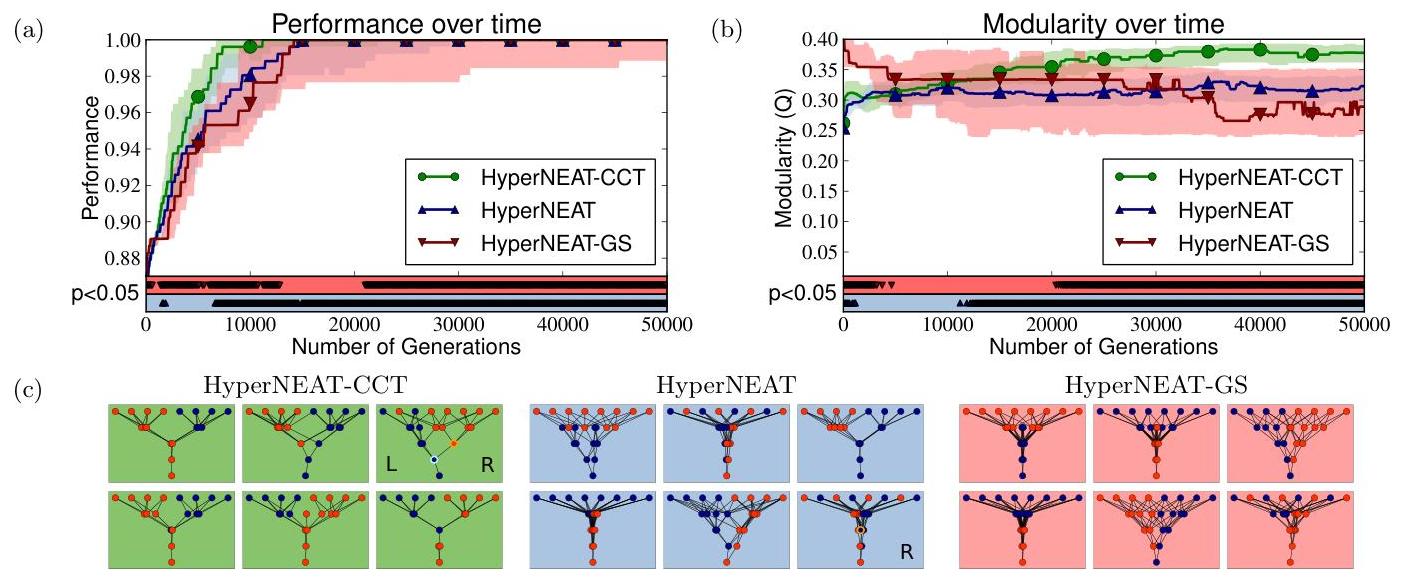

The paper tests four main algos:

- HyperNEAT - Standard HyperNEAT with Link-Expression Output (LEO)

- HyperNEAT-GS - HyperNEAT with Gaussian Seed (includes LEO + a Gaussian activation node to encourage local connections)

- HyperNEAT-CCT - HyperNEAT with Connection Cost Technique (includes LEO + connection cost as optimization objective)

- DirectEncoding-CCT - Direct encoding with Connection Cost Technique (comparison baseline from previous work)