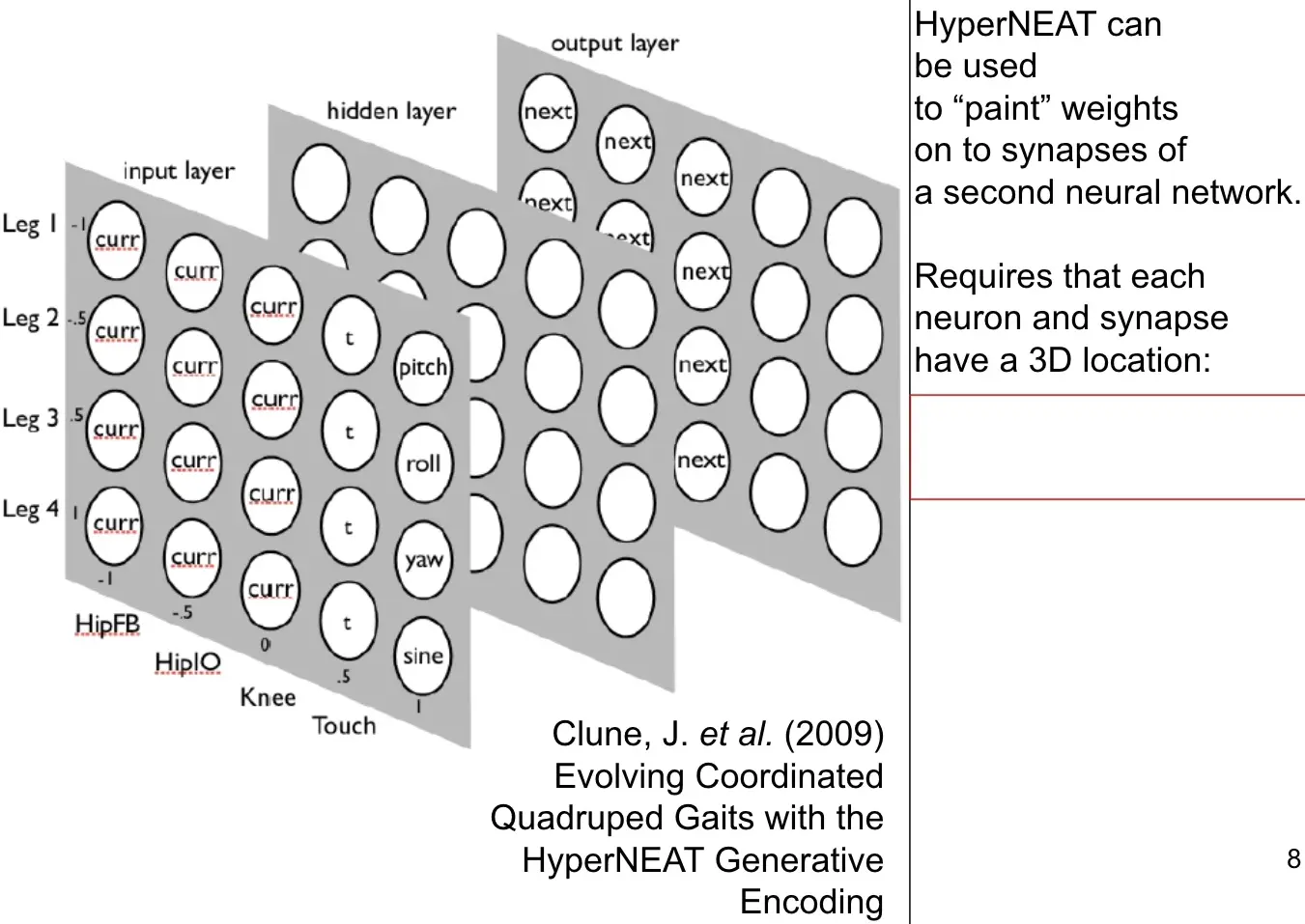

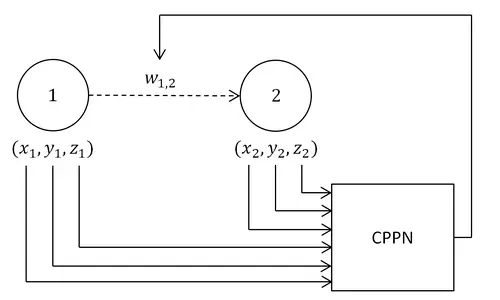

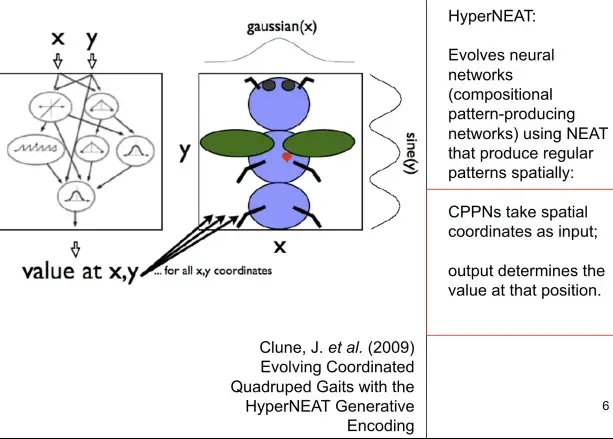

HyperNEAT is an indirect encoding method that evolves the weights of a CPPN that generates the weights of a neural network.

Biological inspiration: Compositional patterns

HyperNEAT is inspired not by biological evolution, but by biological development, where you see patterns within patterns within patterns, a composition all the way down.

You can not micro-manage each individual cell, telling everybody what exactly to do, but you can influence big masses so that they organize accross space and time.

HyperNEAT fixes something NEAT struggles with: Re-using useful discovered patterns.

HyperNEAT “paints” regular patterns onto a hypercube (of synaptic weights) using CPPNs. The dimensionality of the hypercube is determined by the input coordinates.

Turns out: Regular patterns in synaptic connections translates to regular patterns in e.g. gaits of robotic locomotion.

In the exmple in the image on the right “curr” are proproceptive (body senses) sensors which tell you the angle of the legs of a quadruped robot, “t” is whether the leg is touching the ground. Pitch, roll and yaw are not associated with individual legs, but tell you the inclination of the body, etc. And “sine” mimicks a central pattern generator.

Here, the CPPN paints in 6 dimensions: XYZ-in and XYZ-out - the neuron pairs beteween which synapses are placed. The neurons inside the CPPN is the genotype, the neurons inside the robot controller is the phenotype (to which we assign fitness), as opposed to directly evolving the set of controller weights.

- The indirect HyperNEAT encoding allows larger networks to be evolved with complex connectivity patterns.

- Additionally, because HyperNEAT can learn from the geometry of the task, it is possible to increase the number of ANN inputs and outputs without further training. [by finer sampling of the cppn]

- If the evolved CPPN creates a useful network connectivity pattern at a small scale, it often also produces a useful output at a larger scale. – src

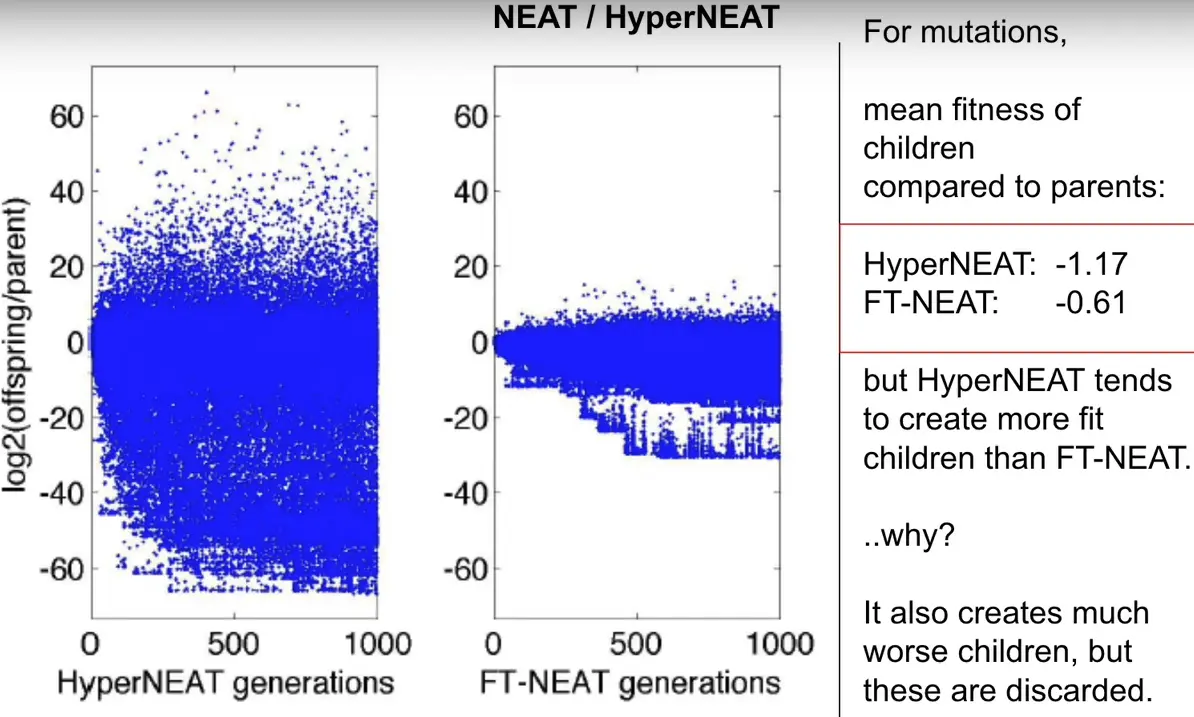

HyperNEAT = Sledgehammer; NEAT = Scalpel

With NEAT, you can get stuck in local optima easily, as it takes small steps.

A mutation in the CPPN network changes many neurons in the controller network.

Usually, making global changes with a sledgehammer sounds like a bad idea, but in many cases, it makes good global changes.

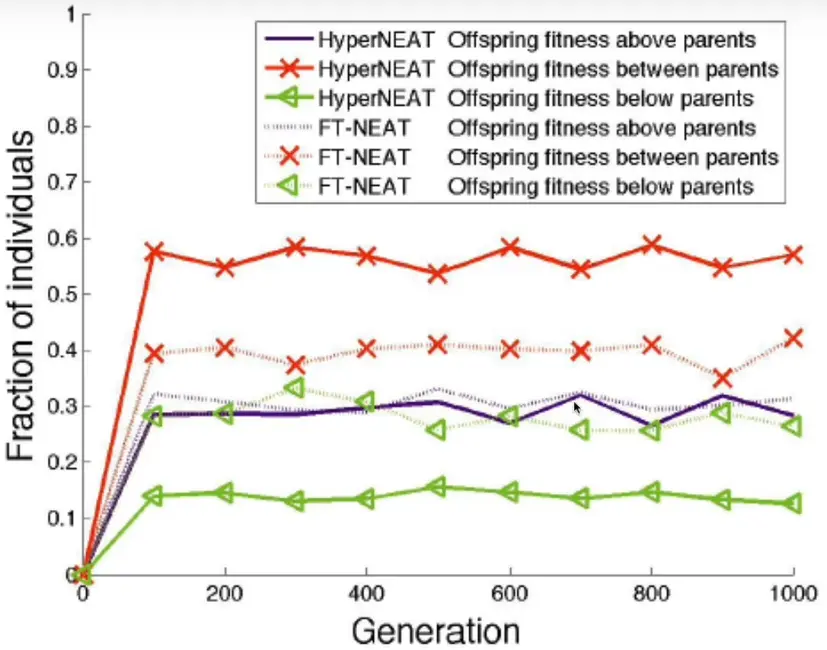

For mutation:

HyperNEAT offspring ( crossover) tend to be more like the parents in FT-NEAT.

HyperNEAT can cut two networks in half and glue them together without breaking things.

Crossover

Crossover works similar to NEAT, just that we are gluing together parts of the CPPN, not the controller.

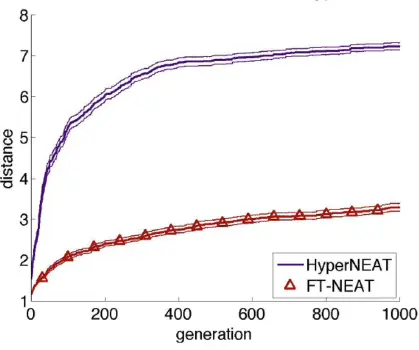

HyperNEAT makes it much easier for evolution to find regular patterns of motion → Much higher fitness than regular NEAT.

References

Link to original(ES-(Hyper-))NEAT resources

Pytoch (Hyper)NEAT code

HyperNEAT paper (high quality)

Good BP series:Could not find ES-HyperNEAT paper… There is “Enhancing ES-HyperNEAT” (same authors).

→ nvm, found it. See ES-HyperNEAT.

Bad ES-HyperNEAT code

Evolutionary Robotics, Lecture 16: NEAT & HyperNEAT (Josh Bongard)

hypernetwork

evolutionary optimization

Evolutionary Robotics, Lecture 17: Crossing the sim2real gap.