year: 2021/12

paper: https://arxiv.org/pdf/2201.10346

website:

code:

connections: michael levin, collective intelligence, cognitive light cone, self, cognition, basal cognition, GRN, emergence

TAME: Experimentally-driven way to recognize, compare, and control very different kinds of intelligences.

What is a true Agent?

For example, one view is that only biological, evolved forms have intrinsic motivation, while software AI agents are only faking it via functional performance (but don’t actually care). But which biological systems really care – fish? Single cells? Do mitochondria (which used to be independent organisms) have true preferences about physiological states? The lack of consensus on this question in purely biological systems highlights the futility of binary categories.

→

There is no magical jump to "true cognition"

For any putative difference between a creature that is proposed to have true preferences, memories, and plans and one that supposedly has none, we can now construct in-between, hybrid forms which then make it impossible to say whether the resulting being is an Agent or not.

→

Given the gradualist nature of the framework, the key question for any agent is “how well”, “how much”, and “what kind” of capacity it has for each of those key aspects, which in turn allows agents to be directly compared in an option space.

whatever true goals and preferences are, there must exist primitive, minimal versions from which they evolved

No privileged substrate is required for a self.

Basic hallmarks / fundamental invariances of being a Self

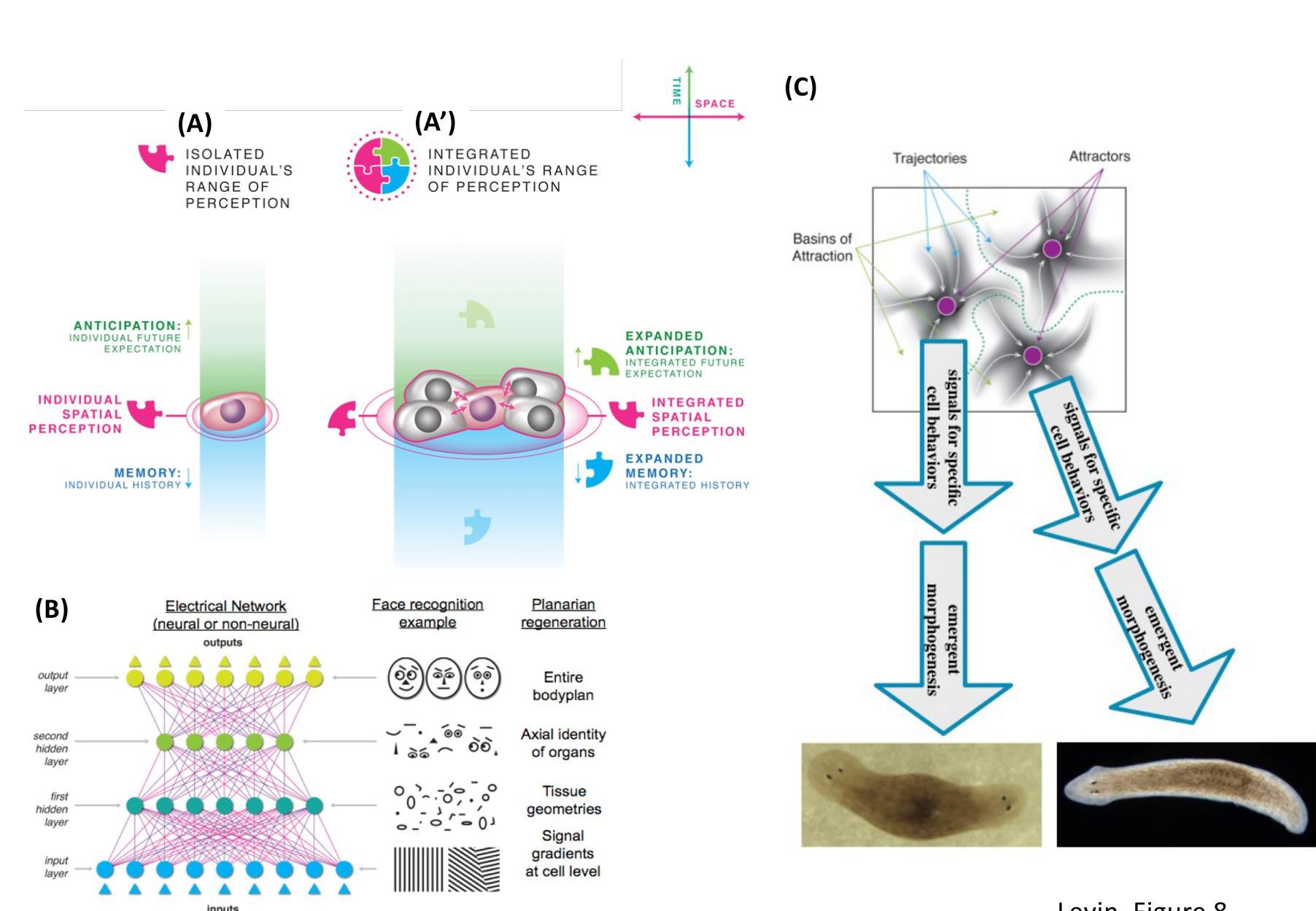

a) The ability to pursue goals, b) to own compound memories, and c) to serve as the center of gravity for credit assignment, where all of these are at a scale larger than possible for any of its components alone.

The expenditure of energy in ways that effectively reach specific states despite uncertainty, limitations of capability, and meddling from outside forces is proposed as a central unifying invariant for all Selves – a basis for the space of possible agents.

Scaling agents

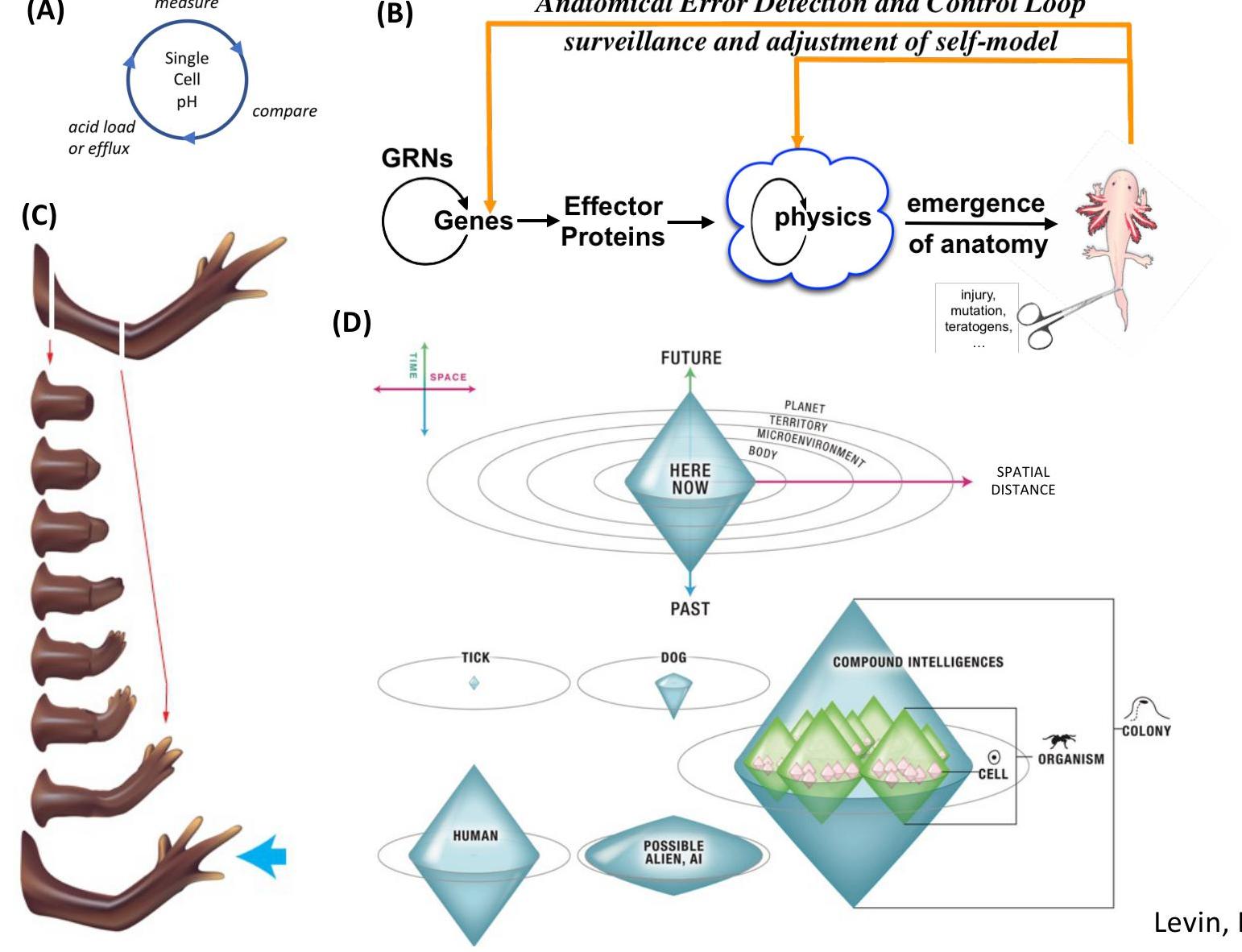

In general, larger selves 1) are capable of working toward states of affairs that occur farther into the future (perhaps outlasting the lifetime of the agent itself – an important great transition, along the cognitive continuum); 2) deploy memories further back in time (their actions become less “mechanism” and more decision-making, because it’s linked to a network of functional causes and information with larger diameter); and 3) they expend effort to manage sensing/effector activity in larger spaces (from subcellular networks to the extended mind)

Overall, increases of agency is driven by mechanisms that scale up stress – the scope of states that an agent can possibly be stressed about (in the sense of pressure to take corrective action). In this framework, stress (as a system-level response to distance from setpoint states), preferences, motivation, and the ability to functionally care about what happens are tightly linked. Homeostasis, necessary for life, evolves into allostasis as new architectures allow tight, local homeostatic loops to be scaled up to measure, cause, and remember larger and more complex states of affairs.

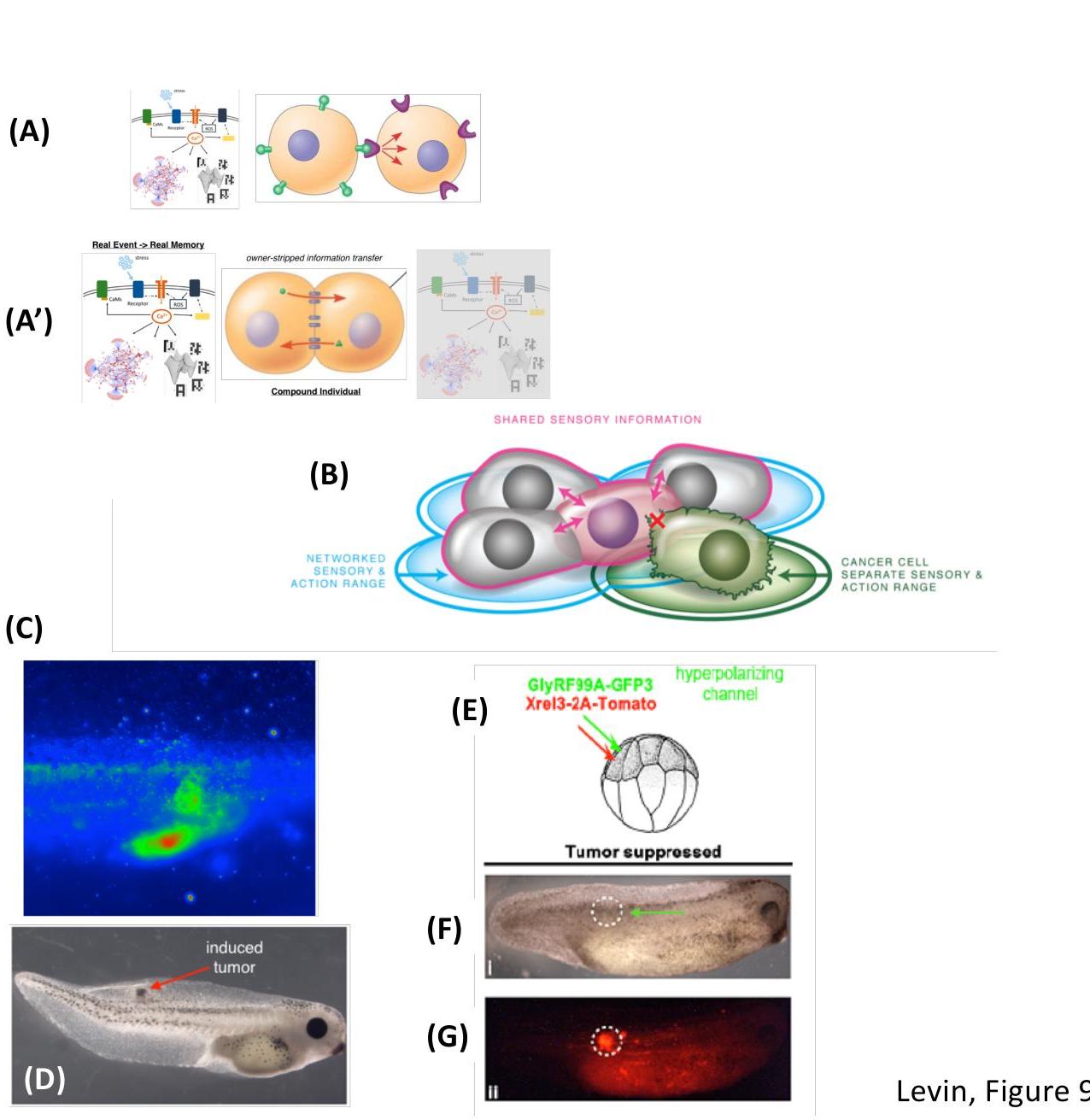

Additional implications of this view are that Selves: are malleable (the borders and scale of any Self can change over time), can be created by design or by evolution, and are multi-scale entities that consist of other, smaller Selves.

Hierarchical modularity

The lower-level subsystems simplify the search space for the higher-level agent because their modular competency means that the higher-level system doesn’t need to manage all the microstates.

In turn, the higher-level system deforms the option space for the lower-level systems so that they do not need to be as clever, and can simply follow local energy gradients.

Intelligence involves being able to temporarily move away from one’s goals

… but the agent’s internal complexity has to facilitate some degree ofcomplexity (akin to hidden layers in an ANN) in the goal-directed activity that enables the buffering needed for patience and indirect paths to the goal. This buffering enables the flip side of homeostatic problem-driven (stress reduction) behavior by cells: the exploration of the space for novel opportunities (creativity) by the collective agent, and the ability to acquire more complex goals

Stopped at 43:30; p14

---an-experimentally-grounded-framework-for-understanding-diverse-bodies-and-minds-20240728135907129.webp)

---an-experimentally-grounded-framework-for-understanding-diverse-bodies-and-minds-20240728135942810.webp)