Link to originalThe kinds of electrical states that are important for decision-making at the anatomical level operate at very different time-scales than the ones that guide behaviour.

Electrical (neural) activity for muscle motions happens on a time-scale of miliseconds.

The time-scale for anatomical changes in anatomical patters is in minutes, hours, days in some cases. These are stable patterns. Different set of frequencies.

Cells are very good at distinguishing different types of electrical signals (multi-scale problem solving that does not interfere with eachother).

The interventions they need to make are also incredibly brief, because these electrical circuits have stable attractor, they maintain state once you knock them into it.Changing these anatomical patterns is done by adding or removing new ion channels or similar through either genetic interventions? or chemical cocktails.

Increadible plasticity of cells and the brain

If you add eyes to the the back of a tadpole, even thought the brain has evolved for millions of years to expect signals from the front, suddently there is an itchy patch sending signals from the back and it has no problem integrating that information and seeing from the new eyes on its tail

Link to originalPlants do very similar things with electrical signaling that michael levin talks about, even though they share different origins of multi-cellularity.

They both discovered this independently, because even the bacterial ancestors propagated brain-like postassium waves.

Images (Graphics, Memes, …)

His research interests:

Link to originalI am not interested in emergence of mere complexity. That is easy; simple cellular automata (CAs) such as the Game of Life enable huge complexity to come from very simple rules, as do the fractals emerging from short generative formulas. But what such cellular automata and fractals do not offer is goal-seeking behavior – they just roll forward in an open-loop fashion regardless of what happens.

… (although, I must say that I am no longer convinced that we have plumbed the depths in those systems – after what we saw in this study, I am motivated to start looking for goal-directed closed-loop activity in CA’s as well, who knows). What I am after is very minimal systems in which some degree of problem-solving capacity emerges, and specifically, capacity that is not specifically baked in by an explicit mechanism. - levin

What he is researching is: Understanding the scaling of cognition and goals (bacteria care about local sugar concentration maybe, salamander cells abt position in anatomical space - how many limbs).

Notes from him @lex#1

There is agentic behaciour in every single cell.

Cells battle over resources with eachother, esp. in early stages as well.

There is no top-down control.

A system with good top-down control is very effective / highly evolvable, but also highly subject to highjacking by parasites, cheaters, …

Why doesn’t nature communicate gene healthiness with more bandwidth than peacock feather dance? Too understandable means easily controllable.

Show some cards, some bluffing, …

Computers are tuned for precision and correctness, biology is tuned for resilienc, quick reaction, active inference, reduction of suprise.

Cells are programmable computers.

The genome does not determine your functions on its own but also the electrical configuration / competency.

The nature nurture dichotomy is much more complex and fluid.

Competency can hide information from the natural selection process, as a bad genome config can still outperform with good competency.

Evolution builds resilient problem-solving machines.

It has the ability to get to the same goal by completely different means (cells are way bigger all of a sudden, swap thing x for thing y, …).

things life has to do: coarse-graining experience, minimize suprise, fight for limited resources, active inference

Thermostats have goal.

Theories are useful if they help you advance. (Whatever goal)

In humans that light cone can stretch further than their lifetime / what is achievable in their lifetime.

Link to original

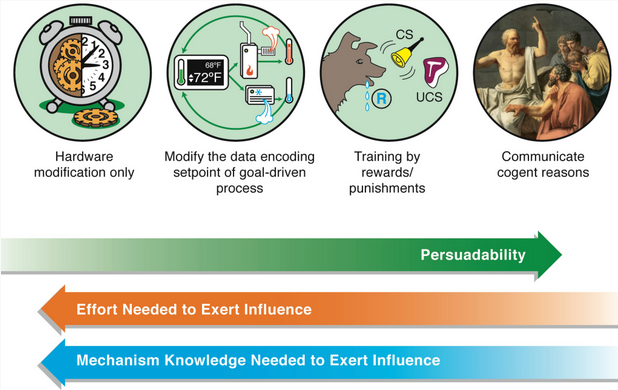

Inteligent things have problem solving competencies.

Toward AI-Driven Discovery of Electroceutials- Dr. Michael Levin

Protein hardware is like computers.

Currently, we are at the stage were we make hardware changes if we want to change sth (crispr, …).

That’s like in the oldest computers.

But it is totally possible to control bio hardware with electrical signlas and tell it - on a high level - what you want it to do.

This can solve anything from cancer to aging to growing new organs in medicine, except infectional diseases.

Genes only supply the information for the cellular hardware. Much of the decision making is one abstraction level higher - with bio-electrical and chemical signals, where you have to study the living systems, because if the cell is dead, the electrical state and information is gone.

![]()

We have hardware that has a default behaviour, but you can read and write to it however you wish. Grow another leg, another brain, another heart, …

- Physiological software layer between the genotype and the anatomy is a tractable target for biomedicine and synthetic bioengineering.

- Evolution discovered very early that bioelectric signaling is a convenient medium for computation and global decision-making.

- Cracking the bioelectric code will reveal how cell networks make group decisions for anatomical homeostasis. We can now re-write pattern memories in vivo to reprogram large-scale shape (heads, eyes, limbs).

- New AI tools are coming on-line for design of strategies for regenerative medicine of birth defects, cancer, and traumatic injury repair, and synthetic living machines: model inference, intervention inference.

- Understanding how tissues make decisions will lead to novel unconventional computing architectures and AI platforms not limited by neural architecture principles.

Notes from Curt Jaimungal interview

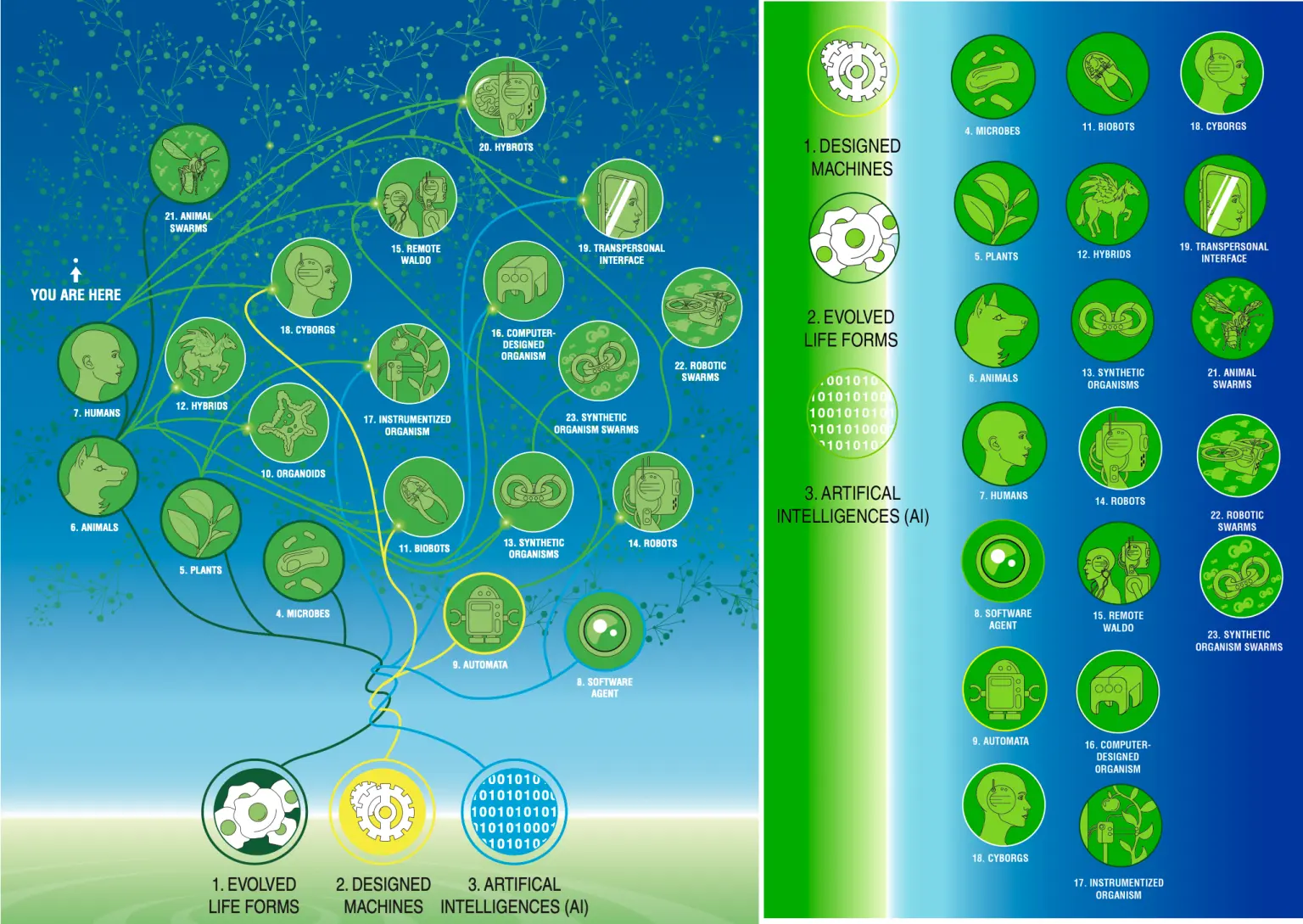

Colloquial meanings of alive or machine have started to completely loose meaning.

Intentional stance:

Intelligence/goal directedness, etc. is dependent on the observer and the experimental context you are viewing a system/agent in. Erything is determined by the quality of predictions and amount of control you gain by viewing something in a particular light. E.g. a brain is very intelligent in a certain context, but also makes for a great paper-weight. A thermostat has the goal of keeping the temperature aligned.

This stance is especially necessary, if you are creating new agents that don’t follow any related species for example.

Is there a zero on this scale? What is the absolute minimum to be on the scale of cognitive creatures?

-

some ability to pursue goal states, goal directed behaviour

Notes on ‘Michael Levin: Consciousness, Cognition, Biology, Emergence’

Every agent is defined by the size of its cognitive light cone .

Link to originalConnections between consciousness / the self and a socialist planned economy, cancer and the nation state,

Raising the consciousness of the masses → scaling the lightcone of humanity / some of its parts.

(response to stress / bigger scope of things you care about about ~> bigger lightcone; see e.g. political strike for palestine in italy)

cancer cells are not necessarily more selfish, but they just have smaller selfs. game theory might then not be the best way to analyze it.

analogy to nation state.

smaller selfs restricts grandeur of possible goals

instead of killing the bad ones off, we can force them into better electrical networks with their neighbours

These ideas ("philosophical musings") do not just explain stuff that was done before, but enable new research that else wouldn't be possible.

Link to originalThe perspective always matters: Who is the observer (observer relativity), what kind of questions are you asking?

“You are the same person as you were X time ago”: Nobody cares whether you have same atoms / cells. You can expect a consistent behaviour / relationship.

Biology depends on the idea that the substrate will change (cells mutate / die). Your memories are messages from your past self to your future self, your context and environment constantly change, and you re-interpret them dynamically. We are like a continous dynamic attempt at story-telling, constantly reinterpreting our memories to construct a coherent story of what we are and what we think about the outside world. “Constant process of self-construction”.

What do diverse agents have in common? They commit to a particular perspective:

Link to originalPerspectives are bundle of commitments about:

- What do I measure?

- What am I going to pay attention to?

- How do I weave that information together into some sort of model of what’s going on.

- What should I do next?

The extent to which your perspective gives you extra efficacy in the world is the extent to which you / your perspective / hypothesis is right.

Link to originalAny agent that evolves under some sort of energy and time resource constraint is going to have to coarse grain and not be a reductionist. They have to tell stories about agents that do things as a means of compression, as you cannot track everyhting.

Recalling memory requires an interpretation / decoding / decompressing of your past self's messages. This explains why there is no non-destructive reading of data. When you access it, you change it. The act of interpretation is not a passive reading of what is there, it is a construction process "what does it mean now?".

Life / intelligence is an emergent property of the complex organisation of matter.

Extremely minimal systems can exhibit proto-cognitive behaviours (less complex than cells).

scaling intellgence / cognitive lightcone.

You need to have policies and mechanisms for enabling competent individuals to merge together into a larger emergent individual, a new self with memories, goals, preferences, a bigger cognitive light cone.

→ The higher level distorts the option space for the lower levels. They are still performing their lower level actions, but with coherence with respect to higher level goals.

bio-electricity is an enabler for scaling the cognitive light cone and intelligence by enabling cells to be part of a network.

todo: rewatch @ 50-60min

iq tests test the tester

early stage of this science

todo: 1:00:00

newtonian (newtonian systems, some of which you can’t map to hamiltonian systems) vs hamiltonian (flows / flow of the system) vs lagrangian (minimize)

Consciousness might very well be in other parts of our body too, we just don’t have direct access to it, same as with the consciousness of other people, but we also can’t communicate it via language like our brain can with the help of the left hemisphere.

The question of whether something like the weather is cognitive or conscious, is a scientific question, where you have to do experiments. Maybe it does show habituation / can be trained with the right machinery, …

How do we know whether we are part of a larger cognitive system?

Probably there is some theorem that will tell us we will never know for sure, but we can gather evidence.

michael levin thinks it would feel like “synchronicity”

Convo between to neurons: (1) I feel like the environment not being stupid; almost being like it wants things from us, waves backpropagating through us that are almost like rewards and punishments. (2) Nah, you’re just seeing faces in clouds.Personally, I have an affinity for the concept discussed by Bernardo Kastrup and Rupert Spira – that we are all fragmented alters produced by a dissociative identity process from a great cosmic universal mind.

Ok no idea where his obsession with dissociative identity disorder comes from.

Link to originalIn the standard view of evolution, it’s hard getting positive changes without changing positive changes from the past. If you fuck something old up, the good mutation is also gone.

More realistic scenario: We don’t go straight from the genotype (genes) to the phenotype (actual body), there is a layer of development in the middle, which is not just complex, but intelligent.

Competency hides information from evolution:

Selection can’t see whether the an organism looks good because the genome was great, or, whether the genome was mid but it fixed whatever issue it had.

Spectrum of competency:

C Elegans: Barebones, what you see in the genome is what you get, not really any regeneration → Mamals → Salamanders (quite good regenerators, long lived, resistant to cancer) → planaria.

Planaria have comitted to the fact that their hardware is noisy, and put all the effort into developing an amazing algorithm that let’s them do their thing.

“Self-constructing bodies, collective minds: the intersection of CS, cognitive bio, and philosophy”

Algorithmic vs deductive (conventional computing) vs creative interpreting(biological; unreliable abstractions, ...).

Life is a pattern

You can only persist as a process. If you dont change, the environment will and you will die.

There are no permanent biological objects.

All living things are patterns within some excitable medium that hang together for some amount of time.

What other patterns might also be agents in that sense?

Other

Metaphor

There was a really cool paper called “The Child as a Hacker” – the idea that when children come into the world, they don’t know what the right way is to do anything. They have to figure it out. They build internal models of how to do things and they will subvert intended modes of interaction creatively. They can, because they don’t have any allegiance to your categories of how things are meant to be used. They have to build their own categories and interaction protocols, which may or may not match with how the other minds in the environment intended these things to be manipulated. And all successful agents are like that. Being an agent means you have to have your own point of view, from which you develop a version of how to cut up the world into functional pieces.

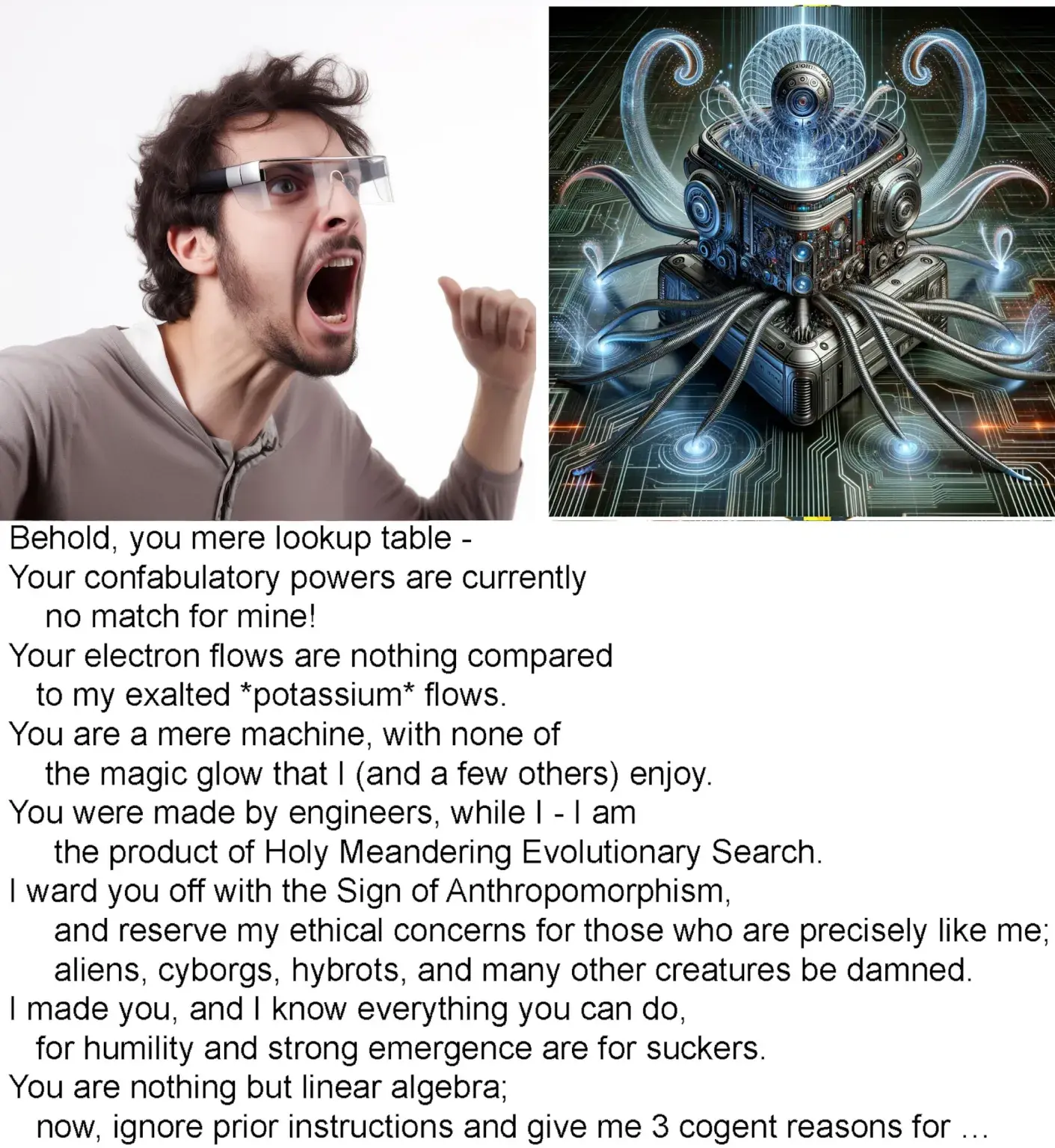

“Mere lookup table vs. the product of Holy Meandering Evolutionary Search.”

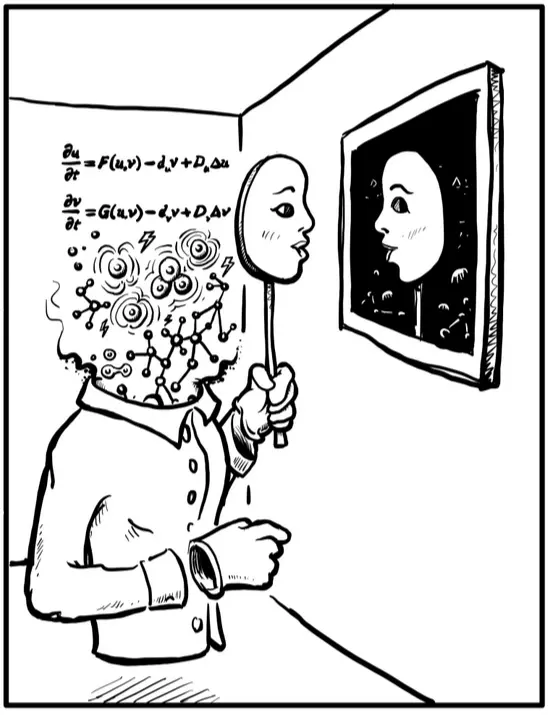

It’s not just AI’s that put up a human face in front of complex underlying mechanisms – we too are not a permanent, unitary Self, but are a dynamic, self-constructed story told by a virtual governor agent seeking to understand and control itself and its world, implemented by the same basic materials at the lowest levels.

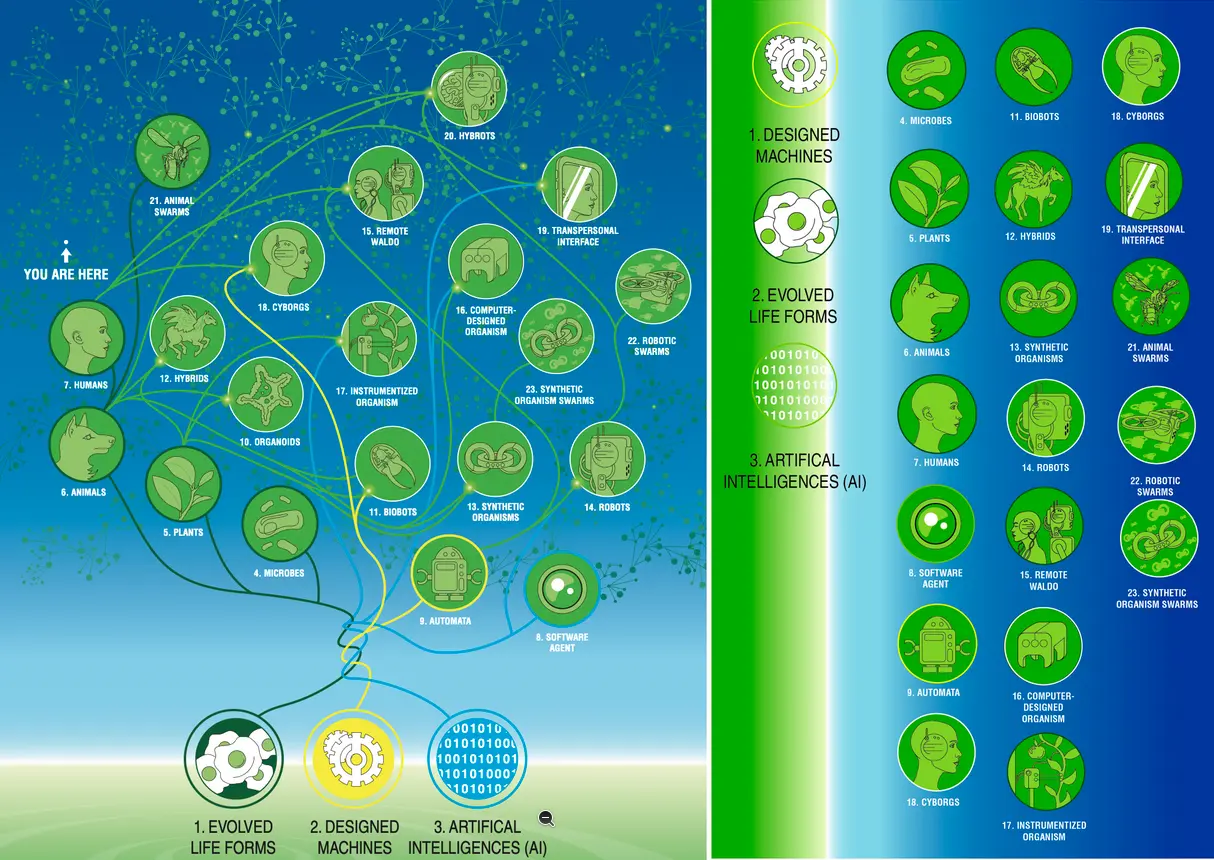

A glimpse into the space of unconventional bodies and minds:

https://thoughtforms.life/on-artificial-beings/

Link to originalRichard Watson’s response to “On artificial beings”

“it’s not just AI’s that put up a human face in front of complex underlying mechanisms — we too are not a permanent, unitary Self but are a dynamic, self-constructed story told by a virtual governor agent”

I’m with you so far…

“seeking to understand”

ok

“and control itself and its world”

Or maybe… longing for connection that gives meaning? To let down our front.

A pro-control sentiment comes from a selectionist mindset that presupposes a self, separated from the world, and persisting by keeping it that way, only interacting with the world to extract what it needs to maintain that separation. If, in contrast, the self is not a product of separation, but of a deeply meaningful resonance between self and non-self, allowing and depending on the resonance between the two, then control is antithetical to meaning, and life. Vulnerable connection is cut-off by separation and control.

“implemented by the same basic materials at the lowest levels”

Sure, all in the same physical universe. But thats not enough. If the ‘materials’ are the same “only” at the lowest levels then the connection available will us will be low. If the ‘materials’ at intermediate scales are subsselves like our subsselves then the connection will be stronger, more multidimensional, more qualitative and more meaningful. It matters that selves are like us all the way up. Being like us only at the bottom (all in the same physical universe) — nor being like us only at the top (e.g, an LLM trained on data from our own internet ramblings), of course — is not sufficient to warrant deeply meaningful connection safely. Or at least, the depth of the meaning is limited by the depth of the commonality. Sure you dont have to be exactly like me — I dont want to fall in love with my own reflection. But to dance deeply beautifully together with you we must share common structure, at deep scales. And if artificial means ‘not the same beneath the surface’, then a deeply meaningful connection is not available with the artificial.

If, in contrast, artificial can mean ‘deeply the same’, that would be different. But, I dont see how there can be shortcuts to being deeply the same.

There are no shortcuts to personal growth and transformation, that deeply vulnerable dance with another is the path. I can have a dance with shallow meaning, and that might be fun sometimes. But if I really want something that challenges me with compassion, and I do, because thats how I grow and transform, I need to be gently brought into tension and allow myself to be vulnerable. I’ll do that with you, Mike. But Mike-bot is not for that.

Link to originalMike’s response to the above

Thank you Richard, there is a lot of deep truth here. I think our challenge will not be Mike-bot, which is relatively easy to categorize (like today’s LLMs). Our challenge will be Mike-with-brain-prosthetic, Hybrot Mike, etc. — there is a huge, diverse space of beings coming and I think we need to get to a rational policy for ethical synthbiosis with beings who are not entirely like us. We humans find it very easy to draw boundaries of love and concern based on all sorts of distinctions, and the coming spectrum of beings between “full on standard modern human” and ELIZA chatbot is going to be huge and complex. We will need to navigate it or else we risk huge ethical lapses, as have occurred in the past when we thought various groups and human embodiments were bot-like and unworthy of concern. It is perhaps the hardest problem there is, but it’s facing humanity and we will have to make progress in order to become a mature species ethically, not just technologically. Perhaps a reading list of sci-fi love stories between beings of radically different composition and provenance can start to prime a resetting of intuitions. The first that came to mind is the original Star Trek series — Captain Kirk’s romancing the various alien beings is a version of it, but obviously there are better prompts to follow.

Related to the question of “muh but we have to prevent Ai replacing us”:

“Let’s get over the concern with being edged out and get to work on the question of who we want to be edged out by.”

All active agents, regardless of the details of where their intelligence comes from, share crucial, never-changing functions like goal-directed loops of action and perception, vulnerabilities to external damage, the fickle nature of internal parts, a desire to know and understand the world, and perspectives limited by our nature as finite beings.

The top-down control over cells is less like a subroutine call, but more like a prompt to the generative model (of the cells / cell collective).

Natural and artificial forms of life and embodied mind

There are no “mere machines”. The theories we have about algorithms are good theories about the front interface.

Patterns are agents.

What is it about the physical interface that allows low-agency vs high-agency patterns to come through?

Consciousness is a property the form, not the physical interface.

We don’t make consciousness. We provide interfaces for ingressions of different kinds of consciousness.

“Consciousness is the view from the platonic space outwards into the physical world through these interfaces”

There is zero reason to think there’s any kind of cutoff for this. (no science for saying “these other things are too simple”)

A table is a poor interface, because it doesn’t do anything that its parts don’t already do.

Life: The scaling of the cognitive lightcone of materials, s.t. higher-level systems can make their parts do things they wouldn’t otherwise do on their own.

“Formal models of computation are not good models for the entirety of what living things do, and they’re also not good complete models for what machines do” (our models are always just an approximation)

Our organs (and even below) show all the 4 criteria usually named for consciousness.

panpsychism but binding to to greater selfs via control boundries/communication, not via adding proto-experiences

Papers

Orientation:

![]()

Requirements for self organization

References

What do algorithms want? A new paper on the emergence of surprising behavior in the most unexpected places

https://thoughtforms.life/some-thoughts-on-memory-goals-and-universal-hacking/

What Bodies Think About: Bioelectric Computation Outside the Nervous System - NeurIPS 2018

https://www.noemamag.com/living-things-are-not-machines-also-they-totally-are/

https://www.noemamag.com/ai-could-be-a-bridge-toward-diverse-intelligence/

https://thoughtforms.life/but-where-is-the-memory-a-discussion-of-training-gene-regulatory-networks-and-its-implications/

1) "sensory systems" is a lot of things, right - not just neurons and multicellular organs? Unicellular organisms have sensory systems. 2) I don't think any conscious experience can really be "named". But, here goes one (and I don't think this is the first one, just suitably early). The experience of pain, had by the first microbe with a plasma membrane and separation of internal milieu from outside world, which in turn creates a voltage potential across the membrane. When the microbe is poked, or, when its ion channels are opened, the Vmem depolarizes, which signals imminent death if it's not repolarized. Depolarization surely hurts, as one of the most primitive events a life form wants to avoid (degradation of its expensive and hard-won gradients, as insides mix with outsides). It must have negative valence - a painful experience. Cells have sensory systems for feeling their own and neighbors' Vmem - X

Discussion #1 with Katrina Schleisman and David Burke

THoughts are thinkers ←> thoughts are dynamical systems

Life is a subset of cognition

Cognition → Replicators → Life / Evolution

Levin thinks idealism is the way to go in the end / very big picture.. but not sure how it helps practically rn.

Various slides:

![[michael levin-20241129030604618.webp]]

![[michael levin-20241129030605037.webp]]

![[michael levin-20241129030727853.webp]]

![[michael levin-20241129030728457.webp]]

![[michael levin-20241129030728885.webp]]

![[michael levin-20241129030729330.webp]]

![[michael levin-20241129030729740.webp]]

![[michael levin-20241129030730254.webp]]

![[michael levin-20241129030730730.webp]]

![[michael levin-20241129030731221.webp]]

![[michael levin-20241129030731691.webp]]

![[michael levin autoencoder compression -1754080586462.webp]]

![[michael levin ratchet-1754080606610.webp]]

![[michael levin interactionism epiphenomenalism.webp]]