year: 2025/10

paper: https://arxiv.org/pdf/2510.17558

website: Yannik explains the motivation well

code:

connections: transformer, VAE, teacher-forcing, causal attention, Meta, Francois Fleuret

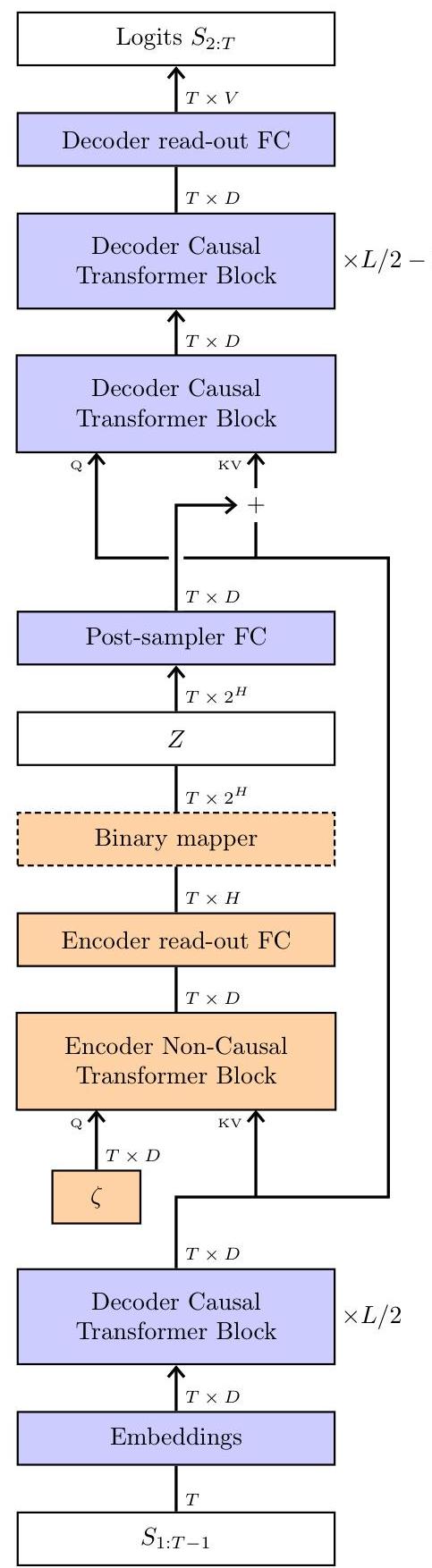

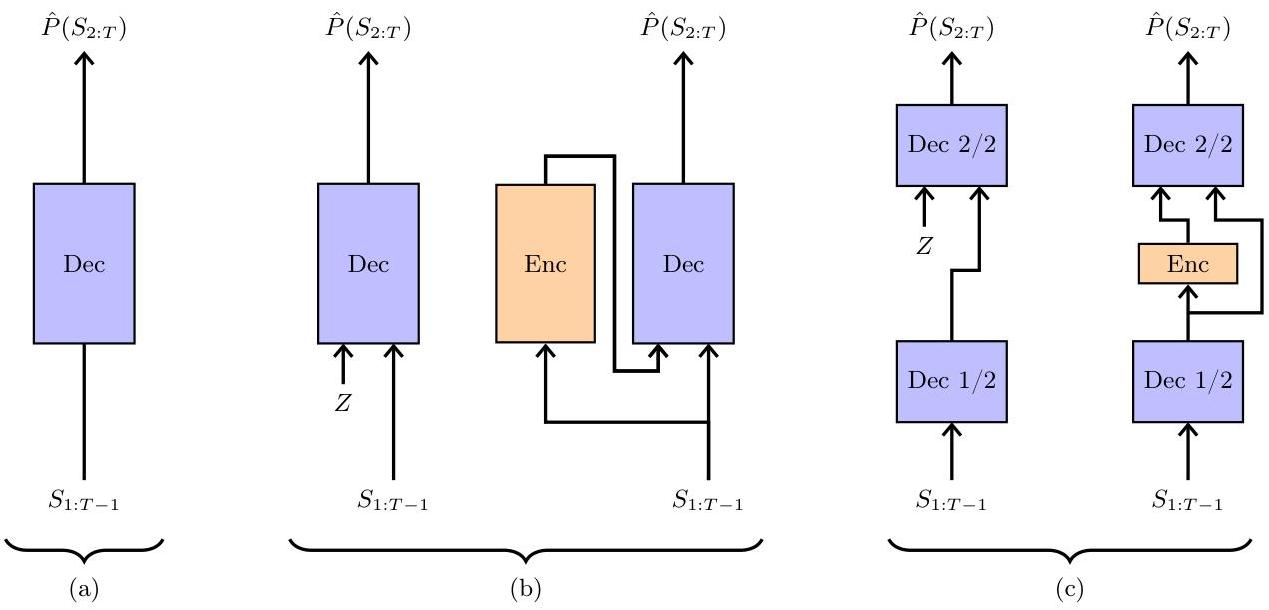

a) Regular decoder transformer b) VAE c) This paper

Very high-level overview:

The paper argues constantly re-inferring global structure from partial tokens can be brittle/complex if you force it to be purely autoregressive: The prediction at step can only condition on the prefix , so any global property (topic, style, strategy) has to be re-guessed from an incomplete, often ambiguous partial sequence – and it must be re-established again at every step. Without a persistent latent plan, the model keeps updating a fragile, implicit “global hypothesis” token by token, which is noisy early on and prone to drift or error compounding.

Solution? → You (efficiently) condition on a “global idea” which helps the decoder generate fine-grained tokens.

As you as a human e.g. make a decision to use dynamic programming or greedy algorithms to solve a problem, you are conditioning on a global idea based on your prior knowledge of the problem domain + current context.

This is the role of here, generated by a non-causal encoder.

is like a prior on which questions to ask (in general) globally, and not on a token by token level.

For similar reasons, they also limit the information that can go through the encoder zo bits/token, which further forces the model to learn to coarse-grain, and (crucially) prevents it from including the answer tokens (for teacher forcing → there’s a cross-entropy collapse during training if is too high).

is one-hot.

It’s like a gating / selector for different sub-routines.

is uniform at inference in the fully free version.

But it can also be tailored to the prompt once, during prefill to choose choose from .

Empirically, the resulting inductive bias helps on code/math & MCQ tasks (but is worse on some question answering tasks and such).