year: 2020

paper: https://arxiv.org/pdf/1703.10593.pdf

website: https://junyanz.github.io/CycleGAN/

code: https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix

connections: GAN, style-transfer, cycle-consistency

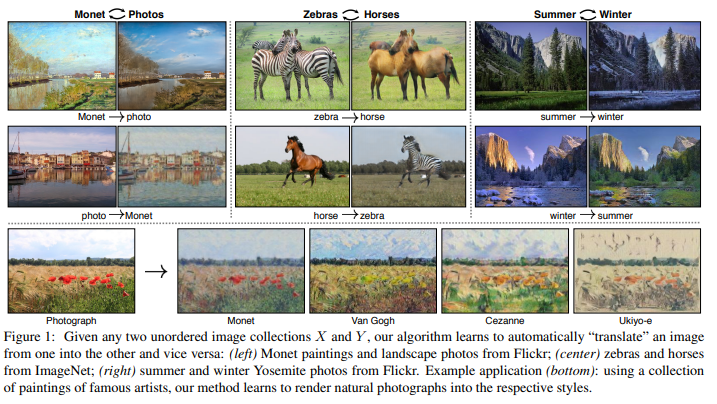

Abstract and Introduction are worth reading (especially for Monet enjoyers ;)).

The model learns relationships from unpaired training samples.

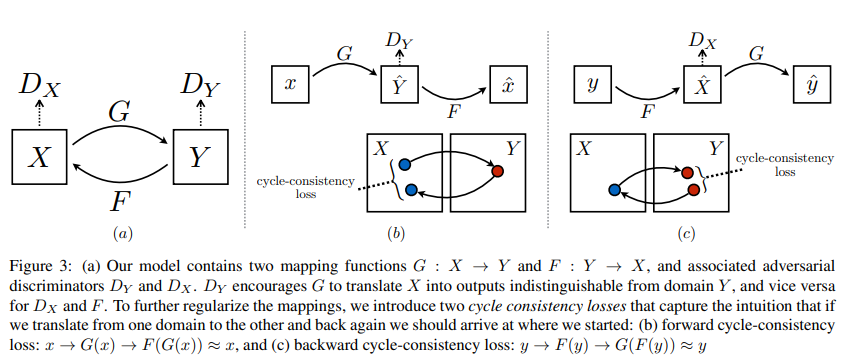

Learning a mapping such that the output , is indistinguishable from images by an adversary trained to classify apart from .

Put differently: The adversary needs to tell pictures that have been turned into “monets” by the generator apart from the real monets.

Takeaways

Problem with the above stated objective:

The optimal generator only has to generate a distribution of images that is merely identically distributed as . The individual and might not be paired up in a meaningful way (e.g. one of the infinitely many possible mappings that lead to the same distribution over could be random noise but in the colours of monet or something).

We want to change the style, while preserving the overall structure of the input.

The cycle consitency loss comes to rescue:

Cycle Consistency

The idea of using transitivity as a way to regularize structured data has a long history. In visual tracking, enforcing simple forward-backward consistency has been a standard trick for decades. In the language domain, verifying and improving translations via “back translation and reconciliation” is a technique used by human translators.