Compositional pattern-producing network

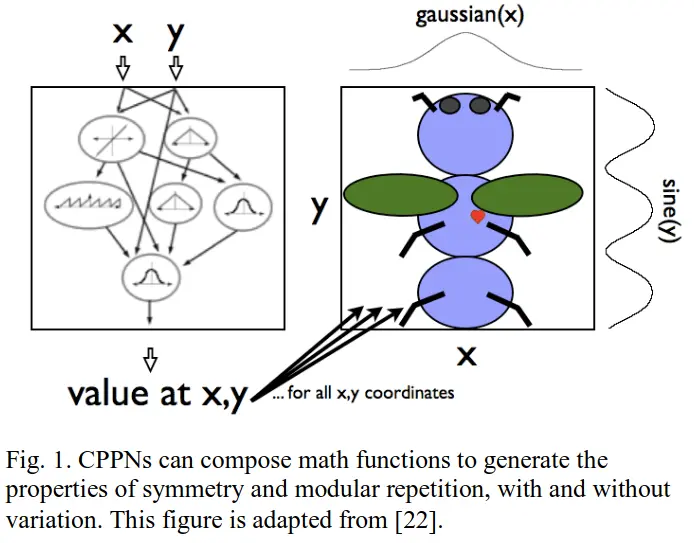

CPPNs are a type of neural network which takes (spatial, time, …) coordinates, and produces the value of e.g. a pixel at the output location.

They were an early form of generative AI, able to generate patterns of symmetry and modular repetition, as often observed in nature.

In fact, they tend to bias evolution towards parts of the fitness landscape that include regular structure (phenotypes) “compositional coordinate transforms”.

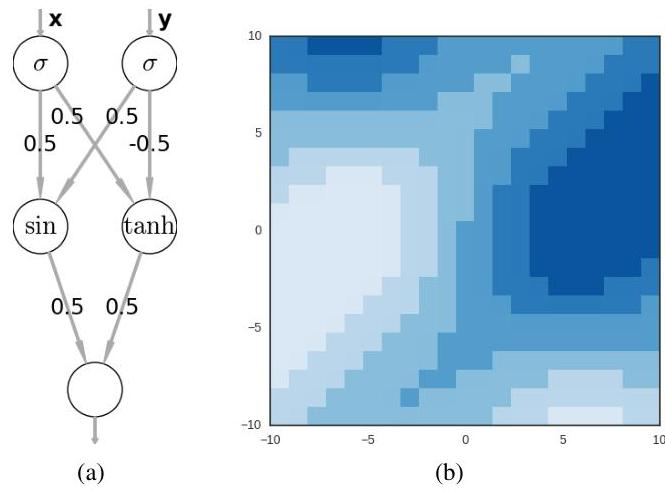

It’s usually a good idea to normalize inputs, so x, y are in [-1, 1] for images, i.e. the center of the image is (0,0)

Random CPPNs do not produce non-random patterns. They guarantee, that you get things that slowly change over space. For something that looks like noise, you would need large cppns.

→ Mutations are random, but the effects of mutations are non-random: The arrangement of outputs will still be regular.

CPPNs can scale resolutions arbitrarily

You justs sample the function more densely / with finer x,y,… values.

EXAMPLE

References

Evolving Coordinated Quadruped Gaits with the HyperNEAT Generative Encoding.