Effective rank

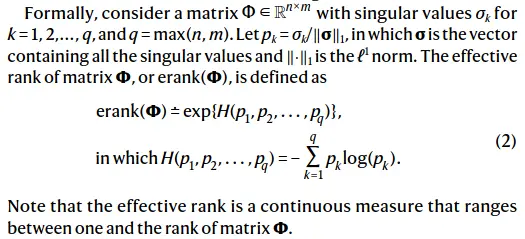

Similar to the rank of a matrix, which represents the number of linearly independent dimensions, the effective rank takes into consideration how each dimension influences the transformation induced by a matrix.

A high effective rank indicates that most of the dimensions of the matrix contribute similarly to the transformation induced by the matrix. On the other hand, a low effective rank corresponds to most dimensions having no notable effect on the transformation, implying that the information

in most of the dimensions is close to being redundant.

It is a continuous measure that ranges between one and the rank of the matrix.

In the case of neural networks, the effective rank of a hidden layer measures the number of units that can produce the output of the layer.

If a hidden layer has a low effective rank, then a small number of units can produce the output of the layer, meaning that many of the units in the hidden layer are not providing any useful information.

So it is literally like an entropy measure?