Model merging

Combining multiple neural network models by averaging their parameters, either fully or partially. Unlike ensembling which runs multiple models separately, merging creates a single unified model.

Given models with parameters and weights :

Applications (loosely in order of popularity)

Post-training merging: Combining models fine-tuned on different tasks to create a multi-skilled model.

Pre-training merging: Averaging checkpoints from a single training run.

Distributed training: DiLoCo uses periodic full merges; Improving the Efficiency of Distributed Training using Sparse Parameter Averaging continuously merges sparse subsets

Crash recovery: Merge last stable checkpoints after training instability

Performance preview: Early checkpoints predict final annealed performance

Why merging works

Model Merging in Pre-training of Large Language Models showed this can replace annealing - averaging checkpoints from constant LR training achieves similar results to cosine decay.

Models in the same loss basin can be averaged without performance degradation. During training, especially with constant LR, models oscillate around optimal points. Averaging cancels out these oscillations - like a low-pass filter removing high-frequency noise.Key insight: model weights change slowly compared to gradients. This enables asynchronous merging strategies like SPARTA where even outdated parameters still provide value.

The optimal merging strategy depends on checkpoint correlation. High correlation (>90%) allows simple averaging. Lower correlation requires sophisticated methods accounting for parameter conflicts.

Link to originalWhy model merging predicts annealing

During constant LR training, weights oscillate around the optimal point like a noisy signal. annea dampens these oscillations iteratively - acting as a low-pass filter.

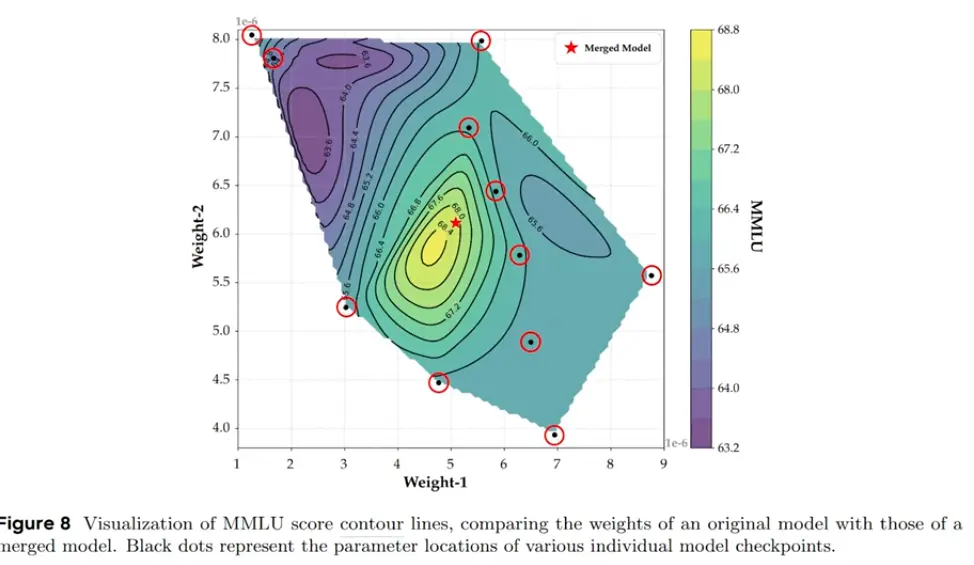

SMA achieves the same effect in one shot. It cancels out positive/negative deviations across the checkpoint window, extracting the smooth low-frequency component without changing the learning rate. On 2D weight contour plots, individual checkpoints scatter in a ring around the basin while the merged point sits at the peak:

Mathematical analysis shows merging works when deviation vectors have negative correlation in the Hessian sense: . This “complementarity” means checkpoints explore different directions in parameter space.