A multivariate gaussian distribution contains random variables, each of which is gaussian, as is their joint distribution.

EXAMPLE

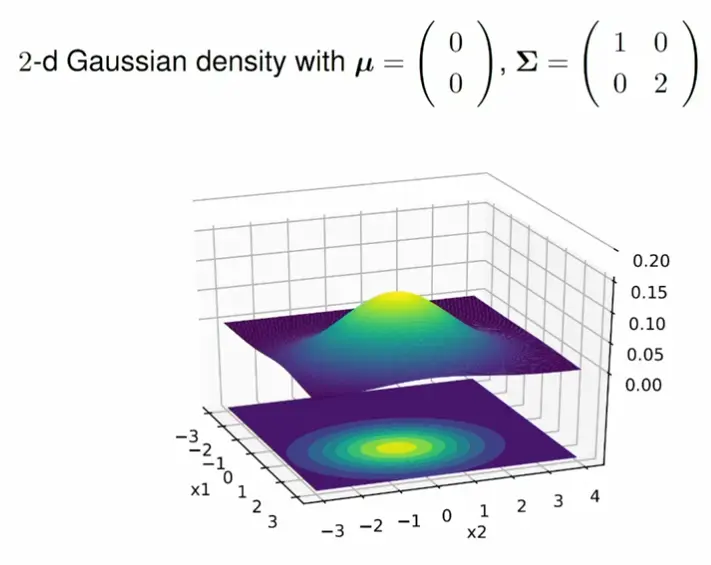

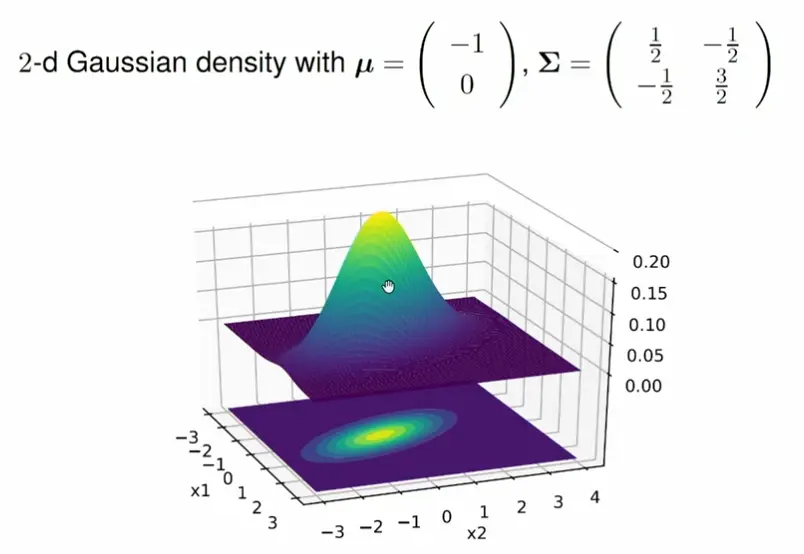

A multivariate distribution with mean and the covariance matrix

The multivariate distribution is fully described by its mean vector and a covariance matrix .

The mean vector describes the expected value of the distribution. Each of its components describes the mean of the corresponding dimension.

The covariance matrix models the variance along each dimension, it describes the shape of the distribution.

The main diagonal of the covariance matrix contains the central moments of the -th random variable, and the off-diagonal elements contain the covariances describing the correlation between the variables.

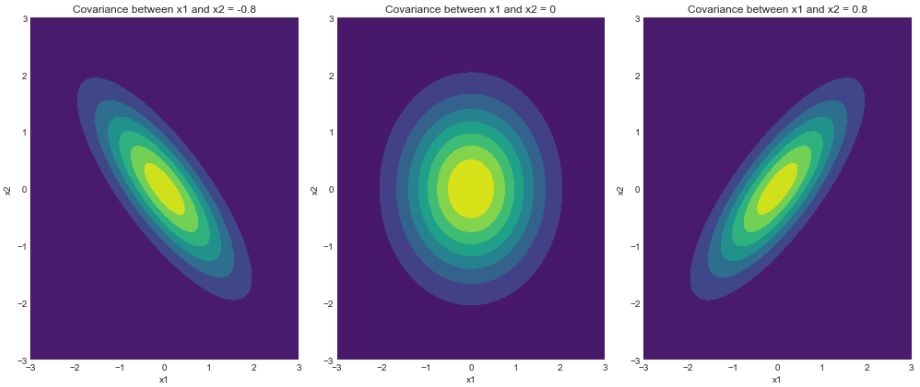

Visualization of different covariance matrices of a bivariate gaussian distribution.

Note: There is an interactive version at distill.

Depicted are multivariate gaussian distributions with a of and covariance matrixes:

, and

The one in the middle is a diagonal gaussian distribution: The two variables do not correlatete with eachother. The covariance matrix is zero except on the main diagonal.Here you can clearly see how the distribution is elongated along which has twice the variance of :

Here, the off-diagonal elements rotate the distribution, indicating correlation between the two variables. shifts the distribution along by , and again it is stretched along , this time 3x the variance of :

Note

The PDF is exactly the same as from a univariate gaussian, just that is switched for /everything is in matrix form:

… number of dimensions

… determinant of the covariance matrix. This part is a bit different from the univariate case?

… matrix inverse of the covariance matrix

Marginalization and conditioning

Gaussian distributions are closed under marginalization and conditioning, i.e. they return a modified gaussian distribution.

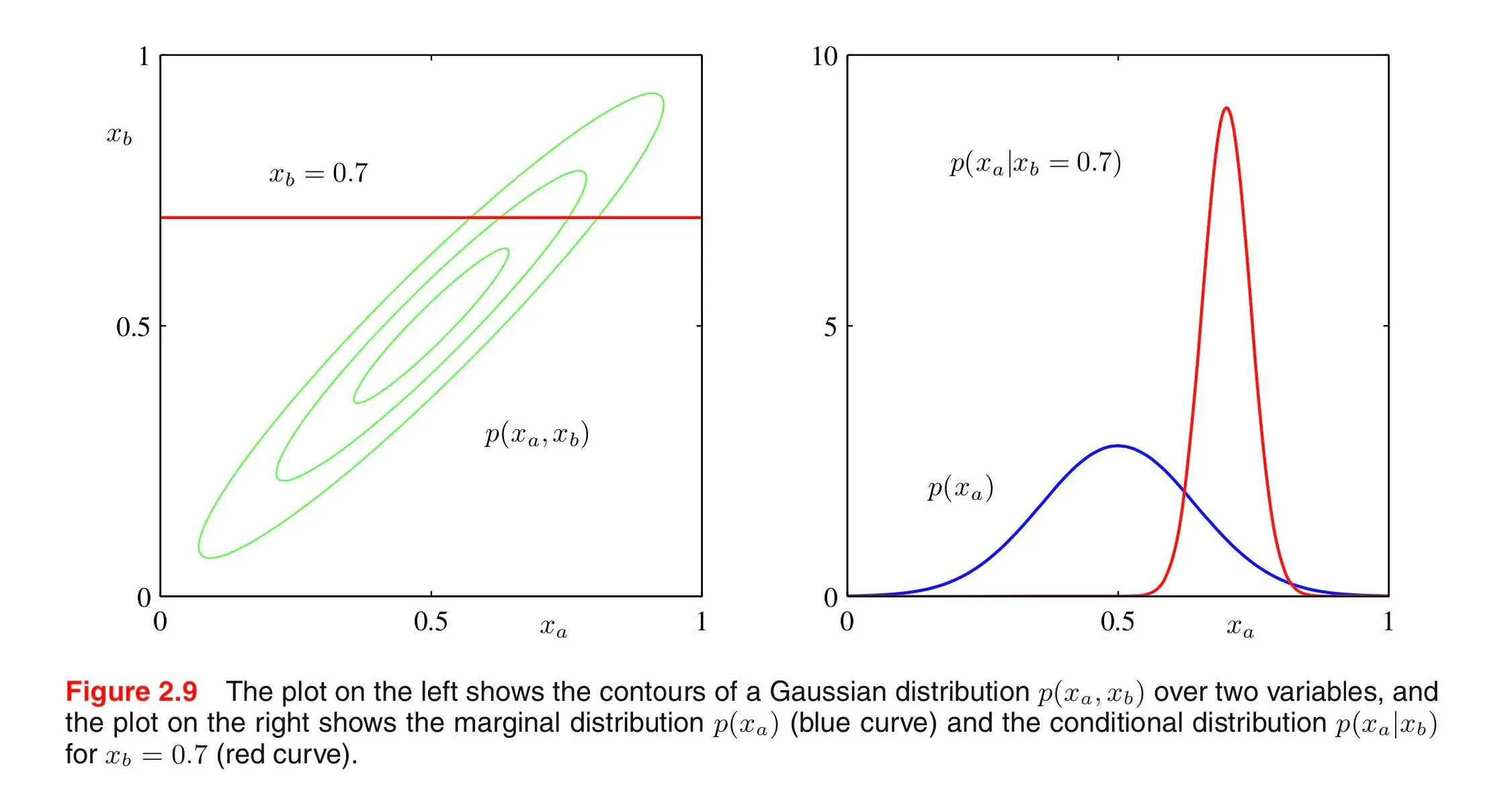

E.g. for the following bivariate distribution:

Marginalization

Marginalization lets us extract partial information from multivariate probability distributions. Given a normal probability distribution over vectors of random variables and , we can determine their marginalized probability distributions like this:

This means that each partition and only depends on its corresponding entries in and .

Another way to express this, mathematically, is that we view every possible value of under the consideration of all possible values of , e.g.: , everaging out Y’s contribution:

Conditioning

Conditioning is used to determine the probability distribution of one variable depending on another variable, i.e. how one variable behaves when another one is known.

The mean gets shifted by how much the known variable differs from its expected value , which is normalized by (think ), and scaled by the covariance between the two variables . This product can be thought of as translating the normalized deviation in to corresponding changes in ’s scale.

→ represents the amount of variance in that can be explained by .Conditioning is like taking a slice of the distribution at the known/given value of a variable.

Visualization of marginalization and conditioning

Note: There is an interactive version at distill.

The blue curve shows the entire underlying distribution of the random variable , and the red curve shows a slice of the joint distribution of and at a specific value of .

i

For normal distributions, uncorrelated variables are independent if and only if they are jointly normally distributed.

A pair is jointly normal exactly when every linear combination is normally distributed, i.e. the resulting distribution is a multivariate normal distribution.

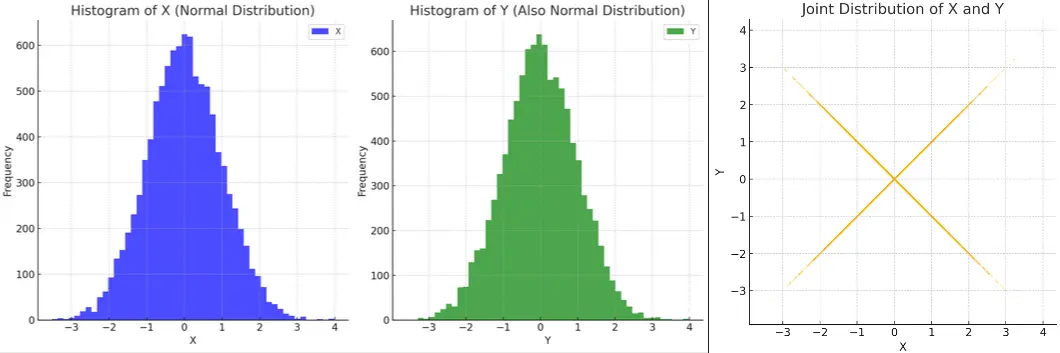

Visual intuition and where is with equal probability:

Consider

Individually and each look normal, but together their joint distribution is unusual: When is positive, is either or with 50/50 chance, when is negative, same thing.

→ and cannot possibly be independent, even though they’re uncorrelated!

References

Lesson 4: Multivariate Normal Distribution

probability distribution