year: 2024/06

paper: https://arxiv.org/pdf/2406.04268

website: https://icml.cc/virtual/2024/oral/35558

code:

connections: open-ended, icml 2024, Jürgen Schmidhuber - The Algorithmic Principle Beyond Curiosity and Creativity, Tim Rocktäschel

To further advance in levels of AGI towards ASI, we require systems that are open-ended—endowed with the ability to generate novel and learnable artifacts for a human observer.

Examples of open-ended systems

Autocatalytic systems, market economies, …

From the perspective of an observer, a system is open-ended if and only if the sequence of artifacts it produces is both novel and learnable.

I.e. you have a system producing artefacts and an observer making statistical models which based on up to , which have a prediction error for arttifacts until .

A system displays novelty if if artifacts become increasingly unpredictable with respect to the observersmodel at any fixed time :

… there is always something more surprising coming in the future.

A system is learnable whenever conditioning on a longer history makes artifacts more predictable:

This can also be defined in terms of compression:

The observer processes an artifact to determine its information content given a history of past ones. posses a history-dependent compression map - the map encodes into a binary string of length .

A system displays novelty if the information content increases, i.e. complexity increrases according to the observer:

A system is learnable if conditioning on a longer history increaes compressibility:

In other words, with a longer history (more data), the observer musst be able to keep extracting additional patterns that help it compress future artifacts.

Lossy compression is also allowed

loss(decompress(compress(X)), X) < epsilon, can also get rid of explicit epsilon and analyse properties of the “rate-distortion” curves.

Not open ended

- TV screen with white noise: Figure out it is a normal distribution → never novelty again, as you’ll be able to predict as good as possible: 50/50, with a rate of prediction accuracy change = 0 (also not learnable, as a longer history doesn’t help a bit).

- Presented with random movies (sequencing of unrelated information): Might be arranged in a way to be surprising (novel), but nothing about the current movie tells you anything about the next.

- LLMs appear open-ended to humans in broad domains because we have limited memory. An LLM can learn the daset increasingly well (learnability), but eventually, the observer will capture epistemic uncertainty - we see repeated patterns from the fixed distribution they are sampling from. It’s like a very big noisy TV.

The open-endedness of human technology, as observed by humans, relies on our ability to compress knowledge into a form that can be maintained within our collective memory.

Opposing positions

- Open-endedness just emerges from scaling - it will just eventually grok the fundamentals of reasoning.

- Open-endedness can be achieved with dataset that have these principles baked in.

- Open-endedness is not necessary for AI systems to have / human interaction causes enough open-endedness.

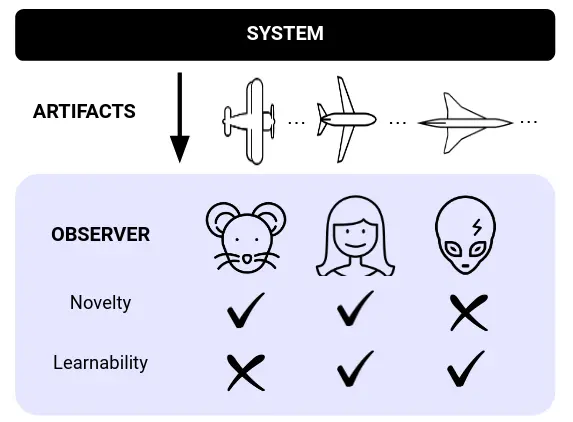

Open-endedness is observer-dependent.

The definition of open-endedness hinges on a system’s ability to continuously generate artifacts that are both novel and learnable to an observer. Consider a system that designs various aircraft: a mouse (left) might find these designs novel but lack the capacity to comprehend the principles behind them; for a human studying aerospace engi- neering (middle), the system offers both novelty and the potential for learning, making it open-ended. However, a superintelligent alien (right) with vast aerospace knowledge might not find the design novel, but would still be able to analyze and understand them. This highlights that open-endedness is observer-dependent and that novelty or learnability alone is not enough.

…

From the perspective of AI research, there is a pre-eminent class of observers, namely humans. In other words, we wish to generate artifacts that are valuable to individual humans and to society. This provides a level of grounding for the open-ended system which narrows the search space considerably.… once human memory capacity is saturated, the human observer will start to forget previous things…

Open-endedness is fundamentally an experiential process

Before proceeding, we must justify our claim that a future foundation model trained passively on some large corpus of human data is unlikely to spontaneously acquire open-endedness. In principle, should we reach ASI, there will be some sum total of data which the model has consumed during its training, possibly via several intermediate stages. Therefore, our claim is not about the impossibility of assembling such a dataset. Rather, we suggest that it is unlikely that this dataset can be pre-collected offline in an efficient way. The reason is that open-endedness is fundamentally an experiential process: producing novelty and learnability in the eyes of an observer requires continual online adaptation on the basis of the artifacts already produced, in the context of that observer’s evolving prior beliefs.

What would it take to collect offline a static dataset from which such an experiential skill could be learned? Such a dataset must contain a treasure trove of artifacts which themselves crisply show novelty and learnability. Yet the process by which culture evolves, ideas develop, inventions arise and technologies proliferate is seldom recorded neatly and comprehensively. The alternative paradigm, in which experience is “built in” to the open-ended system, is well illustrated by the scientific method. Since the Enlightenment, the simple process of making hypotheses on the basis of current knowledge, falsifying them with experiments based on a source of evidence, and codifying the results into new knowledge has yielded unprecedented progress in science and technology (Deutsch, 2011). In our view, the fastest path to ASI will take inspiration from the scientific method, compiling a dataset online by the explicit combination of foundation models and open-ended algorithms