year: 2024/10

paper: https://arxiv.org/pdf/2410.14606

website: https://x.com/RichardSSutton/status/1860818651953463542

code: https://github.com/mohmdelsayed/streaming-drl

connections: AMII, streaming RL, online learning

Extended Abstract (copypasta)

Although the prevalence of batch deep RL is often attributed to its sample efficiency, a more critical reason for the absence of streaming deep RL is its frequent instability and failure to learn, which we refer to as stream barrier. This paper introduces the stream-x algorithms, the first class of deep RL algorithms to overcome stream barrier for both prediction and control and match sample efficiency of batch RL

The agents, under the streaming reinforcement learning problem, are required to process one sample at a time without storing any samples for future reuse. Such requirements create additional hurdles compared to batch deep reinforcement learning, even though both learn from a non-stationary stream of data.

Streaming learning methods mainly require CPUs instead of GPUs since no batch updates are used, unless a very large neural network is used in which they might benefit from GPUs. In such case, the overhead of context switching between CPU and GPU might be negligible compared to the GPU computational cost required for the forward and backward passes in very large networks.Issues that hinder learning:

- learning instability due to occasional large updates,

- learning instability due to activation nonstationarity, and

- improper scaling of data

These issues are already present in batch methods causing several detrimental effects such as drop in performance, high variance, or inability to improve performance. However, they are exacerbated with streaming learning as updates can fluctuate more from one step to another due to non-i.i.d. sampling for updates. For example, streaming learning is more prone to instability as successive per-sample gradients can point in different directions, making it difficult to choose a single working step size. In contrast, batch methods mitigate this issue by averaging gradients from an i.i.d.-sampled batch drawn from a large pool.

3.1 Sample efficiency with sparse initialization and eligibility traces

Algorithm 1: SparseInit

In words: Initialize all weights and biases using LeCun Initialization, then set a random subset of those to 0.

3.2 Adjusting step sizes for maintaining update stability

In the streaming case, it is not clear if choosing a step size that reduces the error in the current sample is the best strategy. A more pertinent goal in streaming learning is to de-emphasize an update if it is too large, for example, if the update overshoots the target on a single sample.

Effective Step Size

Let be the error on a sample before making an update (pre-update error). For TD-learning, this might be . Let be the error on the same sample after making an update (post-update error).

The effective step size measures the relative amount of error correction:

This quantity indicates how much of the original error was corrected by the update:

overshooting: The update was too aggressive, possibly changing the error’s sign

full correction: The update perfectly corrected the error

partial correction: The update made progress but did not overshoot

no correction: The update had no effect on the error

negative correction: The update made the error worseE.g.:

(partial correction)

(overshoot)

Algorithm 2: Bounding Effective Step Size with Backtracking (idealized optimizer)

The algorithm prevents overshooting by adaptively reducing the step size until the effective step size is below a maximum threshold .

Small conservative updates, aggressive updates.Backtracking line search is an optimization technique that starts with an initial step size and progressively reduces it by a factor until certain conditions are met. In this case, we keep reducing until the effective step size falls below . This is similar to classical line search methods, but here we’re focused on preventing overshooting on individual samples rather than optimizing a global objective.

For supervised learning, the eligibility trace simplifies to because there’s no temporal component - we’re just trying to minimize error on individual input-output pairs. In reinforcement learning, eligibility traces serve a broader purpose by maintaining a decaying history of state visitations, helping to assign credit for rewards to earlier states.

The main drawback of this approach is that it requires multiple forward passes to compute for each new candidate step size. This can be expensive when many forward passes are required until we find a step size that satisfies the criteria.

Approximate Effective Step Size

To avoid the costly multiple forward passes required by the exact backtracking search, the authors use a first-order Taylor approximation, assuming local linearity (holds approximately when the updates are small, which is partly what we are aiming to achieve).

The post-update prediction for input can then be written as:where and are parameters after and before the update, and is the update vector.

For TD(λ), , where is the step size, the TD error, and the eligibility trace vector.

For supervised learning, .We use the already-computed gradients to compute the directional derivative, estimating changes due to a small weight update.

Local Linearity

Local linearity means that for small changes in the weights, we can approximate how the network’s output will change using just the gradient (tangent line) at the current point. Think of it like approximating a curve with its tangent - this works well when you only move a small distance along the curve:

f(x + \Delta x) \approx f(x) + f’(x)\Delta x

This leaves us with the following effective step size for TD() under nonlinear function approximation:

We compute the TD-error on a sample before and after the update, and divide the difference by the original error to get the effective step size.

This would still require an additional backward pass for the value function at the next state , so we further approximate the effective step size:[!note] Approximate Effective Step Size

To avoid multiple forward passes in the exact backtracking method, the paper uses a first-order Taylor approximation under a local linearity assumption. For small updates, we can estimate how much the network output changes using only the gradient at the current weights. This is cheaper than performing another forward or backward pass.

When we update weights from to by a vector , local linearity tells us

For TD(λ), , where is the step size, the TD error, and the eligibility trace vector.

For supervised learning, .

This approximation avoids re-running a full forward pass at the new weights, because the directional derivative in the above equation only needs the existing gradient. The local linearity assumption holds / the approximation is most accurate when the update is small, which aligns with our goal of preventing large, destabilizing steps.Local Linearity f as if it is linear in a small neighborhood around w. Formally, for small ∆w:

Local linearity treats

f(x; \boldsymbol{w} + \Delta \boldsymbol{w}) \approx f(x; \boldsymbol{w}) + \nabla_{\boldsymbol{w}} f(x; \boldsymbol{w})^\top \Delta \boldsymbol{w}

In TD-learning, the error depends on both the current state and the next state :

After the update, the new error depends on . The effective step size is defined as

Computing exactly at and would require an extra backward pass. Instead, the paper uses local linearity to approximate how changes at and , yielding

Even this approximation requires computing a new gradient at . We can avoid this by deriving a bound: The difference between gradients at nearby states can’t be too large (Lipschitz continuity), and for nearby states with discount factor close to 1, this difference is at most 1.

This lets us bound the effective step size using just the L1 norm of the eligibility trace:This bound tells us that to prevent overshooting, we should scale down our step size when the eligibility traces have large magnitude or is large.

Extra notes on bounding Note: They do this because something something lipschitz continuity of gradients tells us that nearby states will have similar gradients something something. exists so that if is very small, the bound does not become trivial. exists so … ???

Algorithm 3: Overshooting-bounded Gradient Descent (ObGD)

This algorithm avoids the need for multiple forward passes by directly scaling the step size based on the theoretical bound of the effective step size. The scaling ensures updates remain controlled without requiring backtracking.

The key idea is to scale down the step size based on:

- How large the current error is, )

- How “steep” the current update direction is ()

- A safety factor that accounts for potential nonlinearity

3.3 Stabilizing activation distribution under non-stationarity

→ They use layer normalization (applied to the pre-activation of each layer (before applying the activation ))

3.4 Proper scaling of data

They use Welfords method to scale observations and rewards.

Algorithm 4: SampleMeanVar

Algorithm 5: Scale Reward

Algorithm 6: NormalizeObservation

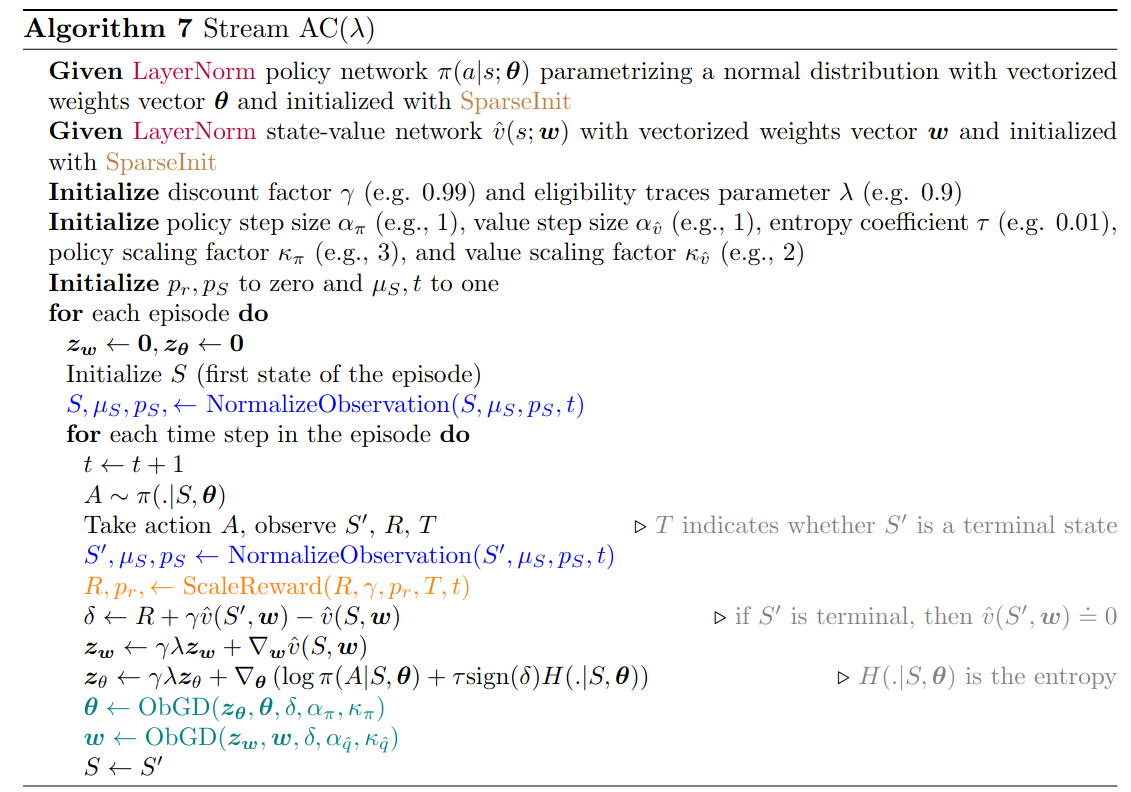

3.5 Stable streaming deep reinforcement learning methods

is the actor, is the critic