https://paperswithcode.com/paper/layer-normalization

Optimizing a Layer Normalization Kernel with CUDA: a Worklog

Types of normalization techniques in machine learning - comparison

Link to original

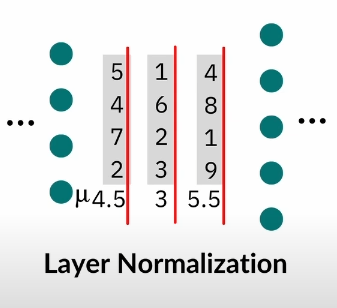

In contrast to batch normalization every datapoint gets normalized individually, but accross all the features (layers). Removes dependency on batch but features might have different scales.

Essentially BatchNorm:

Input: Values of over a mini-batch:

Link to original

// mini batch mean

// mini-batch variance

// normalize

… noise parameter in case variance is 0 (div by 0)

Since mean and variance are heavily dependent on the batch, we introduce learnable parameters (unit gaussion at initialization but optimization might change that).

Link to original

(scale) approximates the true variance of the neuron activation and

(offset) apporximates the true mean of the neuron activation.

but better:

where is the dimensionality of and is a small number used for numerical stability.

Why?

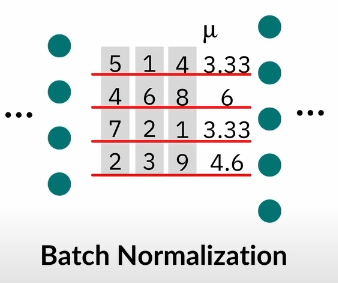

Batch Normalization vs Layer Normalization

Batch normalization is dependent on the batch size, as it normalizes on all the batches, whereas Layer Normalization normalizes per data point. (Batch Norm only good with bigger batch sizes >8)

Layer Normalization also performs the same operations during training and testing. (BN doesn’t)

Layer Norm good for:

- sequences

- variable batch num workers

- parallelization

Not so good for:

Link to original

- CNNs

- Features/Layers with different scales

Example

Refer to batch normalization for more explanation.

layer normalization for fully connected layer , neuron and feature size

Normalize feature

… noise parameter in case var is 0, see ^cd1cd1

Scale and shift normalized feature, with learnable params:

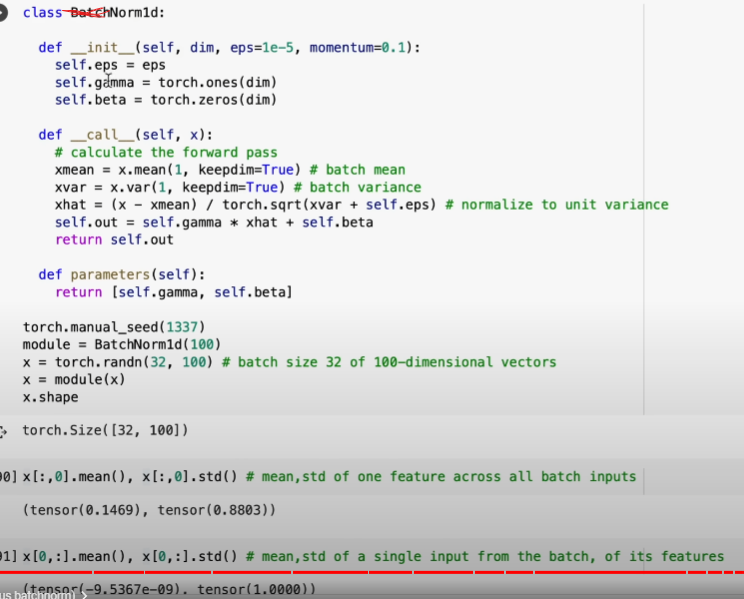

Code

(from Karpathy)