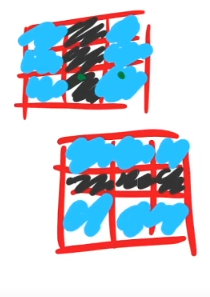

Types of normalization techniques in machine learning - comparison

Link to original

- Only goes across one sample / datapoint

- Layers being normalized individually like in instance normalization is good, but not enough statistics.

- We can expect groups of features / filters / layers in a NN to have the same scale. 1

- You decide a priori which groups should be normalized together (naturally it’s the ones next to one another)

- Through that you enforce that those groups will learn features that are simmilar in size.

- This introduces a new hyperparameter (number of groups) :(

- Groups / Channels per group

- 1 group → Layer Norm (worse performance)

- 1 channel per group → Instance Norm (worst performance)

Code

Group norm in pytorch for MNIST CNN (wandb blog)

Footnotes

-

Values of vertical and horizontal edge filters in a CNN are most likely about equal in size

↩

↩