Some square matrices have an inverse, which produce the identity matrix if you multiply them:

The inverse is commutative:

Matrices that have an inverse are called invertible or non-singular.

The inverse is unique because matrix multiplication is associative. See ^uniqueness.

Some equivalent definitions of (non-)invertibility

A matrix is non-invertible / singular if:

- It is not a square matrix

- The determinant is 0

- |

- Its rows are not linearly independent

A matrix is invertible if:

- gaussian elimination produces a full set of pivots (a full-rank matrix)

- Its rows (and columns) are linearly independent

- The determinant is non-zero

- The linear systems of equations has a unique solution for every .

- The linear transformation represented by the matrix is bijective

The inverse of a diagonal matrix is easy:

Or generally:

Note: Diagonal invertible only if all diagonal elements are non-zero.

Inverse of a product

The order reverses like socks and shoes: put socks on first (), then shoes (). To undo, remove shoes first (), then socks ().

Proof:

So is an inverse of ; by uniqueness, it’s the inverse.

Intuition + Examples

Negative case

Let’s have a look at a singular matrix:

Why is it not invertible?

a) Linear dependence

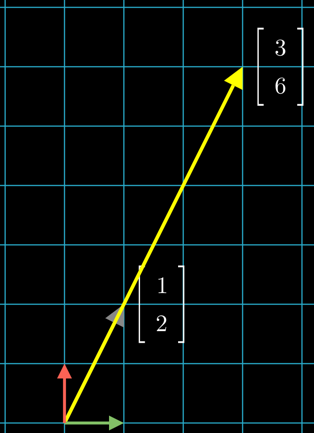

Its rows are a linear combination of eachother. They both lie on the same line going through the vector [1 2].

There is no combination of these vectors, which would yield the vectors of the identity matrix (red and green basis vectors).

There is redundancy in the information provided by the columns, and you can’t uniquely solve a system of linear equations that uses the matrix. This redundancy means that there is no unique inverse for this matrix.

b) |

We can find an that satisfies the above equation:

Now suppose exists, then only works if .

→ If there is some for which , there is no inverse matrix (such an exists due to the linear dependence of A’s vectors).

Positive case (finding the determinant)

Gauss-Jordan

A is invertible, since is positive / the vectors are pointing in different directions.

Now finding the Inverse is just like solving two systems of linear equations!

By the gauss-jordan method, we can solve them at once, by augmenting the matrix, i.e. carrying the right side of the equations with us / applying the same elimination steps to it. Our goal is to transform into . By the end of the elimination, the augmented part will have turned into the inverse matrix:

So we applied an elimination to , such that turns into the inverse, so by definition, is the inverse:

We discovered it step by step, as we multiplied the partial ‘s with the identity.

My first intuition was to view it like this:

It’s just like the usual transformations when solving equations

(, just imagine matrices being divisible).

Solving the systems of equations traditionally / explicitly

Another (crude) way of solving it.

This is how we did it in class (first):

The matrix multiplication consists of 3 separate systems of equations:

Adjugate determinant method

Another “interesting” method we learned in school (with zero motivation or intuition, naturally):

One can write down the inverse of an invertible matrix by computing its cofactors by using Cramer’s rule, as follows. The matrix formed by all of the cofactors of a square matrix A is called the cofactor matrix (also called the matrix of cofactors or, sometimes, comatrix):

Then the inverse of A is the transpose of the cofactor matrix times the reciprocal of the determinant of A: 1

The transpose of the cofactor matrix is called the adjucate.

References

Gilbert Strang Lecture 3: Multiplication and inverse matrices

Quote of the lecture (that some math teachers should rlly take to heart): “It’s good to keep the computations manageable and let the ideas come out”