year: 2017/08

paper: https://arxiv.org/pdf/1707.06347

website:

code:

connections: RL, TRPO, optimization

TLDR

PPO is a policy gradient method that optimizes the policy in a way that is less sensitive to the choice of hyperparameters. It does this by using a clipped objective function that prevents the policy from changing too much at each iteration. This makes PPO more stable and easier to tune than other policy gradient methods.

Motivation

Q-learning (with function approximation) fails on many simple problems and is poorly understood, vanilla policy gradient methods have poor data effiency and robustness; and trust region policy optimization (TRPO) is relatively complicated, and is not compatible with architectures that include noise (such as dropout) or parameter sharing (between the policy and value function, or with auxiliary tasks).

The vanilla policy gradient objective / loss:

… stochastic policy

… estimator of the advantage at time

… empirical average over a finite batch of samples (in an algorithm that alternates between sampling and optimization)

By differentiating it we get the policy gradient estimator:

But using this gradient directly is not a good idea (for reasons explained in TRPO).

The raw objective from TRPO (or originally conservative policy iteration) attempts to fix the issue of destructively large policy updates of the policy:

But it still needs some constraint to prevent excessively large policy updates (large advantages; risk of overfitting to current batch; learning instability):

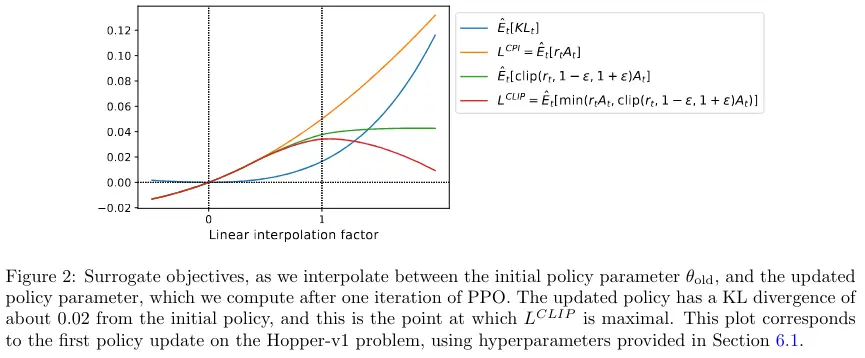

PPO penalizes changes that move away from :

When would make a large positive improvement (beyond ), we ignore it and use the clipped value instead

When would make things worse, we keep that negative signal.

→ We take a lower bound on the original objective with a penalty for having too large updates.

The final objective for PPO is usually something like:

is a value function loss, e.g. mean squared error:

is an entropy bonus to encourage exploration:

Note: We maximize the above objective. So with standard optimizers, we would minimize the negative of this.

Implementation Details

https://iclr-blog-track.github.io/2022/03/25/ppo-implementation-details/

https://github.com/vwxyzjn/ppo-implementation-details

Terminology / Flow:

- Collect trajectories using policy (in episodic or open-ended environments in parallel).

- Trajectory:

[(s, a, r, s+1, ...), ...]of length . - PPO epoch: Policy optimization epoch over minibatches (slices) of a trajectory.

- Iteration: One or more PPO epochs + optimizing policy using PPO on averaged values.

- Repeat.

Don’t reset environment every time you collect experiences; only when it’s actually done. Use a cyclic buffer where the last obs and dones will become the first obs of the next iteration.

→ We collect fixed-length trajectory segments, which allows us to optimize long or even infinite horizon problems.

A shorter trajectory length means more emphasis on bootstrapping.

Shuffle trajectory to prevent bias from ordering of experiences / highly correlated consecutive timesteps.

Not sure about the blog’s claim on mini-batch level normalization of advantages. For what I can tell everyone normalizes on batch level.

Some evidence that clipping value loss hurts perf.

gradient norm (l2) is clipped to 0.5; some evidence that it boosts perf

References

Very clean jax impl: https://github.com/keraJLi/rejax/blob/main/src/rejax/algos/ppo.py

DPPO (parkour mujoco): https://arxiv.org/pdf/1707.02286

https://pettingzoo.farama.org/tutorials/cleanrl/advanced_PPO/