Motivation

Cursor uses pure online VPG on acception + heuristics rewad to train their completion model and roll out a new one every few hours https://cursor.com/blog/tab-rl

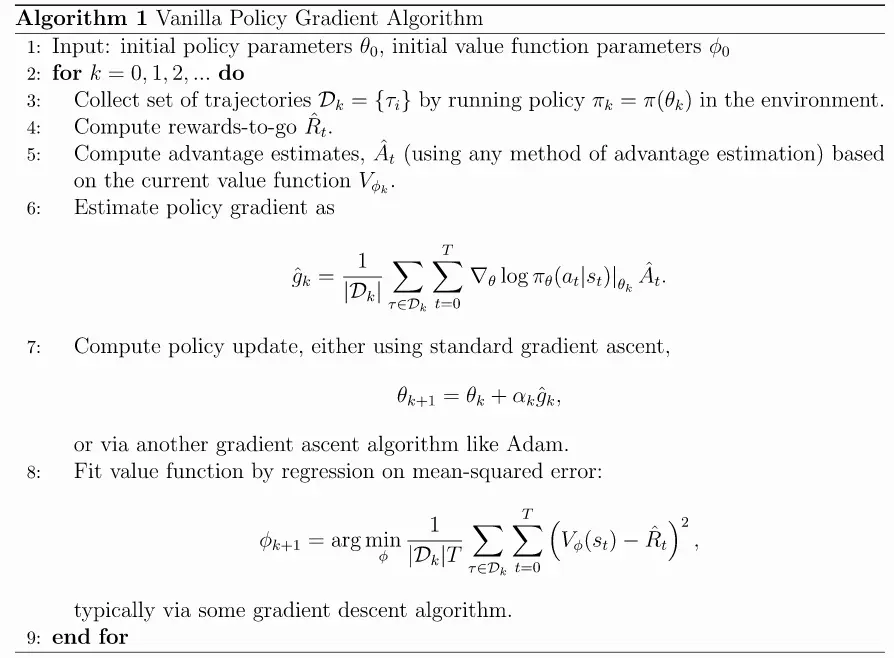

Spinnig Up: Intro to Policy Optimization | code

The goal of reinforcement learning is to find an optimal behavior strategy for the agent to obtain optimal rewards. The policy gradient methods target at modeling and optimizing the policy directly. The policy is usually modeled with a parameterized function respect to . The value of the reward (objective) function depends on this policy and then various algorithms can be applied to optimize for the best reward.

- it is on-policy

- can be used for both discrete and continuous action spaces

We have a stochastic, parameterized policy, and want to maximize the expected return , so we are going to optimize the policy parameters via gradient ascent:

The is just a very explicit notation, that the gradient is calculated with a specific set of parameters in time - I think.

, the gradient of the policy performance is called policy gradient.

Policy gradient algorithms are e.g. VPG, TRPO, PPO.

derivation for basic policy gradient

The Probability of a Trajectory given that actions ome from is

( is the start state distribution, see Introduction to RL)

The Log-Derivative Trick:

Transclude of derivative#log-derivative-trick

So we can write

For the Log-Probability of the Trajectory, we can then turn the products into sums:

The environment has no dependence on , so gradients of are zero.

Why? The actions directly influence the environment, but the network internal parameters do not directly influence the parameters. and can lead to the same action in some states, or even to the same policies. The environment only depends on its current state and the actions it receives to generate the next state:

The gradient of the log-probability of a trajectory is thus

Putting it together, we derive the following:

Derivation for Basic Policy Gradient

means that wer are integrating over all possible trajectories.

We estimate the expectation of the policy gradient with a set of trajectories, where each trajectory is obtained by letting the agent act in the environment using the policy :

where number of trajectories.

The reward function is defined as:

where is the stationary distribution of Markov chain for (on-policy sate d)

The expected finite-horizon undiscounted return is

trajectory

advantage function of current policy

(the log simplifies the gradient calculation, as it turns the product of probabilities into a sum)

The weights are updated via stochastic gradient ascent:

Policy gradient implementations typically compute advantage function estimates based on the infinite-horizon discounted return, despite otherwise using the finite-horizon undiscounted policy gradient formula.