year: 2025/04

paper: https://arxiv.org/pdf/2504.02639

website:

code:

connections: reservoir computing

Link to originalReservoir computing

In 2001 a fundamentally new approach to RNN design and training was proposed independently by Wolfgang Maass under the name of liquid state machines and by Herbert Jaeger under the name of echo state networks. This approach is now often referred to as the Reservoir Computing Paradigm.

Reservoir computing also has predecessors in computational neuroscience (see Peter Dominey’s work in section 3.1) and in machine learning as the Backpropagation-Decorrelation learning rule proposed by Schiller and Steil in 2005. As Schiller and Steil noticed, when applying BPTT training to RNNs the dominant change appear in the weights of the output layer, while the weights of the deeper layer converge slowly.

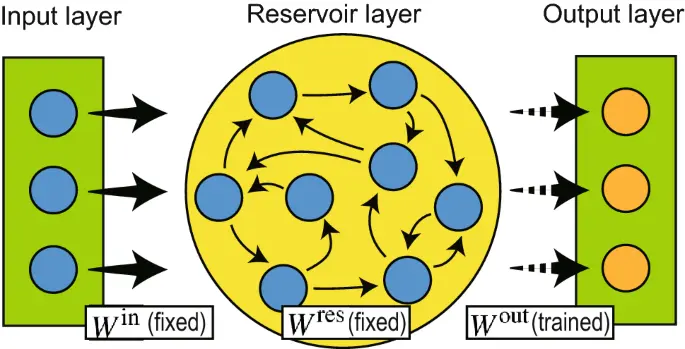

It is this observation that motivates the fundamental idea of Reservoir Computing: if only the changes in the output layer weights are significant, then the treatment of the weights of the inner network can be completely separated from the treatment the output layer weights.

Link to originalThe Echo State Networks (ESNs) method, created by Herbert Jaeger and his team, represents one of the two pioneering reservoir computing methods.

Having observed that if a RNN possesses certain behavioral properties (separability and echo state), then it is possible to achieve high classification performance on practical applications simply by learning a linear classifier on the readout nodes, for example using logistic regression. The untrained nodes of the RNN are part of what is called the dynamical reservoir, which is where the name Reservoir Computing comes from. The Echo State names comes from the input values echoing throughout the states of the reservoir due to its recurrent nature. Because ESNs are motivated by machine learning theory, they usually use sigmoid neurons over more complicated biologically inspired models.

Link to originalLiquid State Machines (LSMs) are the other pioneer method of reservoir computing, developed simultaneously and independently from echo state network, by Wolfgang Maass.

Coming from a computational neuroscience background, LSMs use more biologically realistic models of spiking integrate-and-fire neurons and dynamic synaptic connection models, in order to understand the computing power of real neural circuits. As such LSMs also use biologically inspired topologies for neuron connections in the reservoir, in contrast to the randomized connections of ESNs. However, creating a network that mimics the architecture of the cerebral cortex requires some engineering of the weight matrix; and simulating spiking neurons, which are dynamical systems themselves in which electric potential accumulates until it fires leaving a trail of activity bursts, is slow and computationally intensive.

Both these facts make the LSM method of reservoir computing more complicated in their implementation and have not commonly been used for engineering purposes.