Blogpost which first made this connection obvious.

Successive generalizations influenced by Why Greatness Cannot Be Planned.

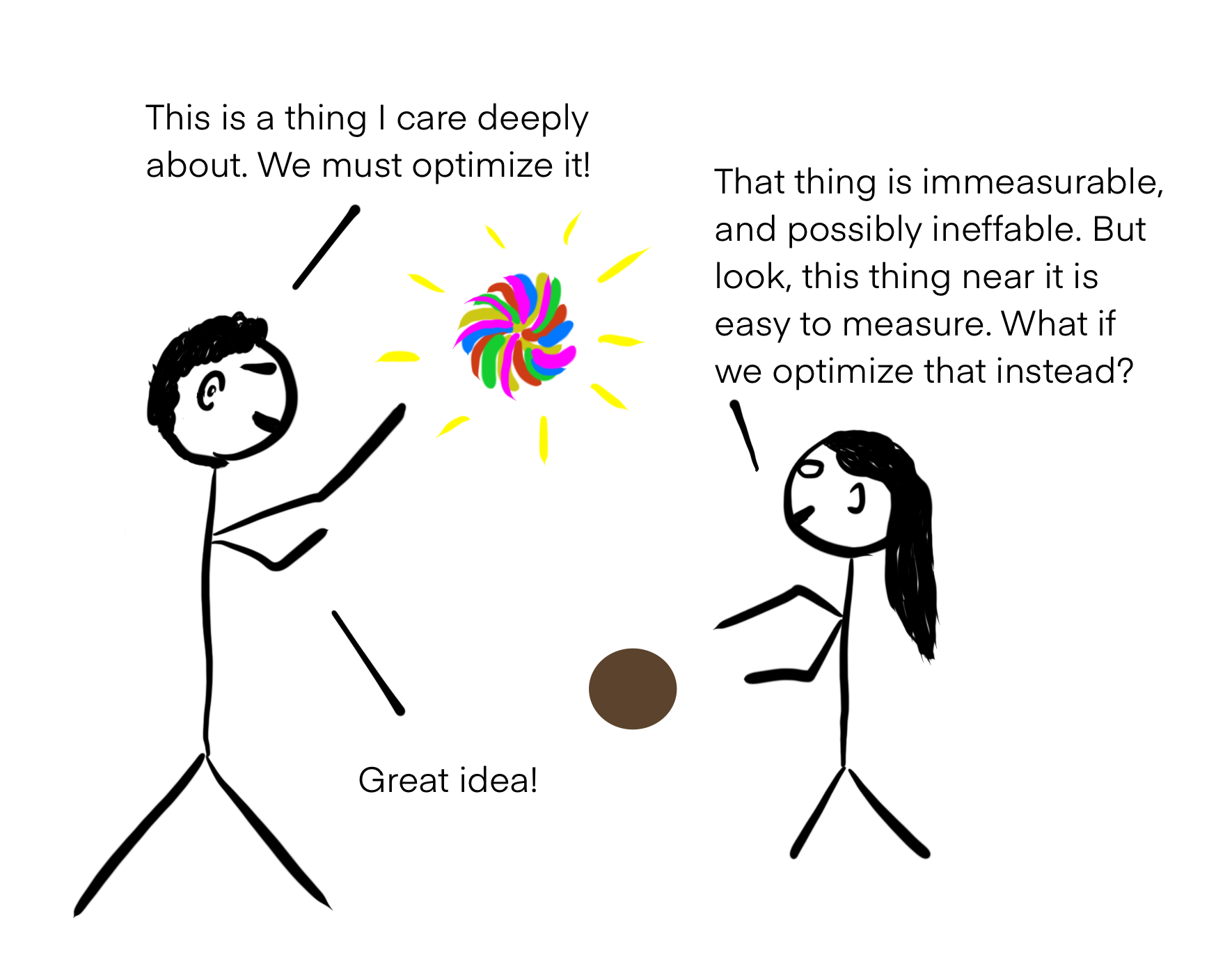

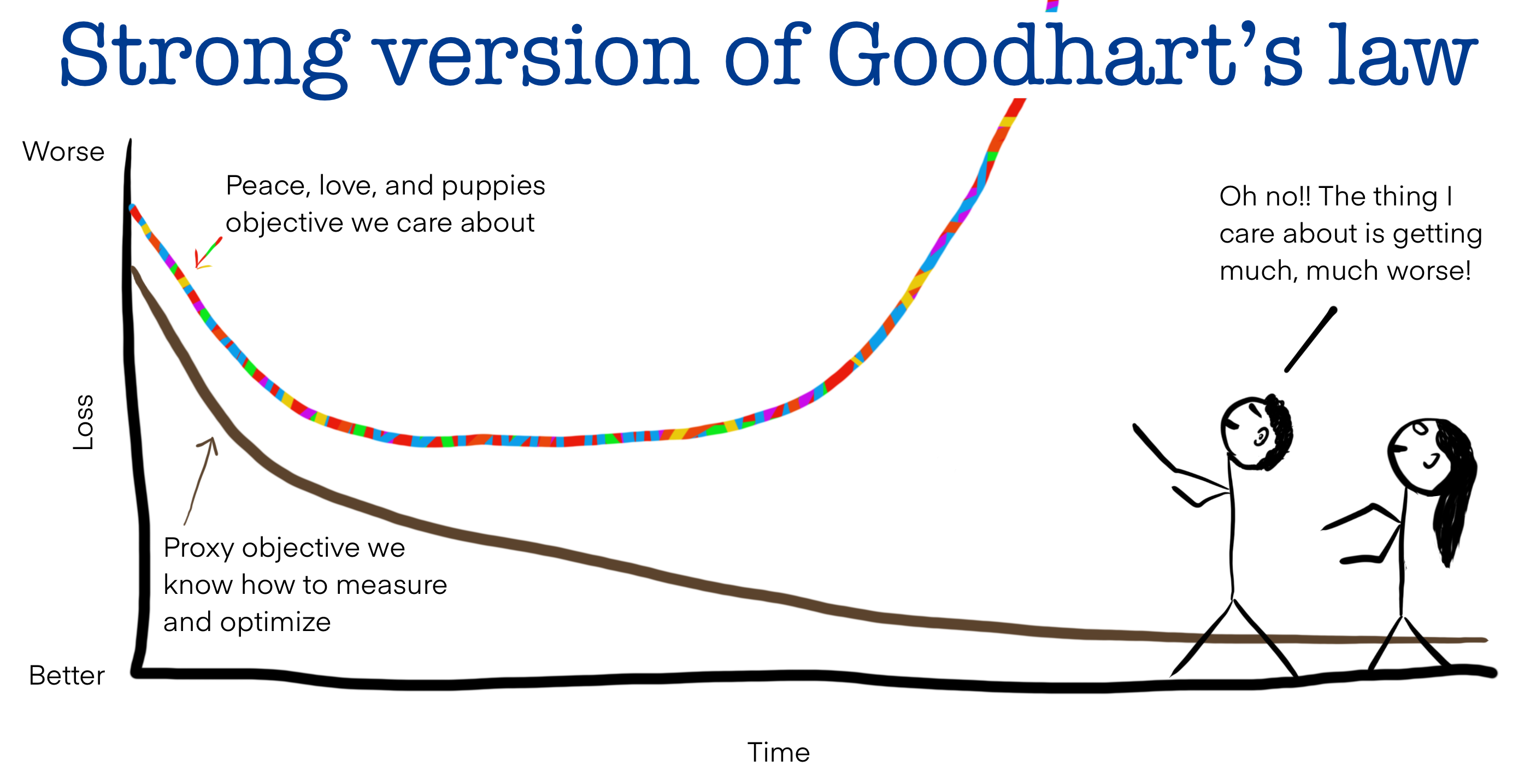

The strong version of Goodhart’s law: When a measure becomes a target, if it is effectively optimized, then the thing it is designed to measure will grow worse.

Setting objectives can actually be bad for creative achievement, innovation, or even achieving the objective itself that you wanted to achieve. It can get in the way because it closes your mind to all the other things that could lead in different directions that would actually be opportunistic for you. In other words, it can be deceptive to set an objective.

And we did a lot of research in AI that showed this algorithmically. But then we wrote this book for general audiences, not AI audiences—although it was meant to be interesting to AI audiences as well. We were hoping other people could appreciate it because we started to believe—Joel Lehman and I, my co-author who worked with me on novelty search—we started to believe that the lessons there aren’t just algorithmic, but are actually social.

In other words, they apply to the way we run institutions, the way people run their individual lives, the way we run educational systems, the way we run businesses and investment—everything. Because everything in this world is saturated in objectives, including, by the way, social networks. They are extremely objectively driven. They’re based on maximization principles: you maximize likes or you maximize follows, you try to maximize exposure by maximizing attention, and then you get more, and it’s reinforced. It’s very objective.

So it reflects a widespread, ubiquitous culture, which is worldwide, which is really interesting. It’s just like we all believe in this idol of objectives, that it should guide everything that you do. The book is arguing that there are other incentives and other gradients that you should follow, like especially interestingness. Not knowing where you’re going to end up, but knowing that this path looks really interesting for independent reasons because it opens up a whole new playground of possibilities, even though I don’t know what the payoff will be. That is very important to the advance of civilization, but it is not recognized in the way that we institutionalize everything that we do.

And so, the algorithmic insight led to this kind of social critique view, and that is why we wrote this book. Because we wanted to—we thought this is a big problem. Granting and funding agencies run on objectives. This is probably the most salient thing for us because we were researchers. So when we have to ask for money to do research, we have to tell them what our objective is, and we’d be evaluated based on the objective and whether it’s worth funding the research. This is completely backwards if what I’m saying is actually true, which I’m very confident that it is.

… It’s not just science funding; it’s investing, it’s the way that we run the country, the way we run education …I became the worldwide focal point for people who don’t like the fact that everything in the world is objectively driven. It’s like I was the place you go to complain about the system. And everybody thinks they’re in this system. I mean, I shouldn’t say everybody. Of course, there are some people who are just invested in objectives, but you’d be surprised how few that is. I thought at first the book would be very polarizing and that there would be these two sides and it would be super controversial. But I rarely meet someone who really is willing to stick up for objectives. That’s actually quite unusual.

So the book seems to be a relatively popular message that most people agree with. But it’s very paradoxical because a lot of the people who I talked to are people who are literally perpetuating this objective system, and they actually hate it, you know? So I started to realize this. But the reason that they perpetuate the system is because everybody feels like they’re locked into it because of the next level up. So you could talk to somebody who’s running some billion-dollar government federal lab or something, and they allocate money very objectively to projects. But if I talk to that person, they’re like, “I hate the way this whole system works. We would love to change things like the way that your book describes, but we answer to Congress, or we answer to an executive, or we answer to our investors.” It depends what the organization is, but everybody feels like they answer to someone, and the someone they answer to is objectively oriented, and so there’s no way to just do something radical and tamp down those objectives.

And so I got to meet people at all the levels—at the top levels, the bottom levels, everybody affected by this system—and they all hate it. They’re all sick of it. And even in their personal life, they feel like they can’t actually just do things because they want to just do them because they’re fun or to explore. They have to justify everything they do. This is particularly true of adults. The younger you go, the less this is true, which is one of the sad things. If you’re five years old, this is not true. You just go to the playground and you do whatever you want; you don’t care what it’s going to do for payoff. But this is basically sucked out of us over the course of our education because the education system is extremely objective.

So I met people from all levels. I would meet diverse walks of life and also diverse age groups. I’d meet people like artists and doctors and retirement planners and military planners. I’ve met such a diversity of people. I met everything from the most senior person to a 14-year-old high school student. And the 14-year-old, I actually met because his grandmother found me from hearing some of my stuff and asked me if I would come talk to her grandson because he’s too obsessed with objectives.

It would take a conscious mass movement, a phase-transition in our collective thinking and organization (→ collective decision making) to change this.

Our civilization in its current form is a paperclip maximizer. We are taking the diversity, richness, complexity and potential of our planet and turning it into landfill. Per default, our society is headed for collapse and our future looks bleak. – Joscha Bach

The root strong version of goodhart’s law in todays society

Countless examples are in the blogpost, although the root cause of all of them or the common “root proxy objective” which we optimize for in our current system is profit.

The free market / capitalism, the profit objective has lead to a massive increase in the rate of societal and technological progress in early periods of capitalism,

But obviously this stopped many decades ago, with sure, the rate of progress still increasing but more and more behind the theoretical max our current technological capabilities would allow (“Capitalism cannot develop the productive forces anymore. It has long outlived its progressive phase.”).

Psychological effects

When we overly fixate on maximizing a single factor, we tend to ignore other ones or downplay them. 1

“Weeelll maybe something should be done, but it is too complex to model“

Slowdown of cultural progress & convergence to already agreed upon standards.

Usually during NN training, we use proxy objectives (loss instead of accuracy) on proxy datasets (train instead of test).

For concrete examples, see:

Examples for capitalism not being the most efficient system, producing for our needs

Goodhart’s Law

- https://geohot.github.io//blog/jekyll/update/2025/02/19/nobody-will-profit.html

- https://geohot.github.io/blog/jekyll/update/2025/07/05/are-we-the-baddies.html