See also:

Consciousness as a coherence-inducing operator - Consciousness is virtual

Machine Consciousness - From Large Language Models to General Artificial Intelligence - Joscha Bach

We are not just goal directed, but also goal-finding systems

Bach’s opinion on consciousness - X

I currently think that consciousness may be a learning algorithm for a self organizing information processing system, a colonizing pattern that entrains itself on a brain once it is discovered early in development. This is an unusual hypothesis and thus not unlikely to be wrong.

Perhaps we can think of consciousness in similar terms to the principle of government in human society. A primitive government is bullying people based on a personal reputation system and does not scale beyond the tribal level. Scalable governments require “recursive bullying”.

Recursive governments are colonizing by imposing order that can harvest more energy than it costs to maintain the administration. Once the principle of recursive bullying is discovered, it spreads until it meets another government with similarly efficient implementation.

Efficient implementation of governance requires a shared language, a coherent model of reality, a universal reward system and mechanisms for learning and error correction. The same things may be true for a mind, established by a consciousness that unifies it.

This perspective on consciousness makes a number of predictions that may be wrong: 1. Consciousness is not caused by a single specific circuit in the brain and can exist in many brain architectures (but forms specific circuitry that leads to loss of consciousness when disrupted).

2.) Consciousness is a software, a causal pattern. It is not bound to particular chemistry or cellular mechanisms, and can in principle be recreated in artificial systems. It may even exist in plants, if plants can be shown to have coherent enough information processing.

3.) Consciousness may have non local and superpositional properties, similar to quasiparticles, but its physics is classical, not quantum. This prediction is based on mental processes being observably slow, and quantum error correction being unlikely in biological organisms.

4.) Consciousness is discovered independently in every brain, and tied to its development. (This predicts that all animals gain consciousness very early in life, and we won’t see fully developing brains in permanently vegetative subjects.)

5.) Consciousness is necessary but not sufficient for developing self modeling and subjective experience. (There will be subjects that are conscious but have no self model, but there will be no subjects that have a self model and are not conscious.)

6.) Consciousness is not necessarily tied to any particular contents beyond self reflexivity (second order perception) and nowness (coherent region of representation in a perceptual or analytical model). Consciousness is not a specific story or narrative.

7.) Unlike a biological ur-cell, consciousness is simple. This means it should be possible to discover it via searching for learning operators in self organizing AI paradigms, rather than requiring a fully formed ur-consciousness in a biological organism.

My contention that consciousness is simple may be wrong; perhaps consciousness is as difficult as a cell, and can only form in organisms by migrating from existing organisms? (Note that new cells don’t appear, cells always divide.) Perhaps consciousness is even tied to the cell?

It is also conceivable that consciousness is a biological software pattern that forms first on a global scale, and then manifests in organizations of cells. Consciousness could even originate below the biological level. Many panpsychists believe this, but I don’t know any

My hypothesis on the nature of consciousness as a self organizing, coherence optimizing learning algorithm has the benefit of being fully testable in an AI paradigm, but its formulation is still in an early stage, which makes it a highly speculative idea.

I think we need to test this idea outside of a business context, and propose the formation of the California Institute for Machine Consciousness. I may need your support for this: organization, funding and engineering. Please watch this space: https://t.co/KYSNFptidj

Joscha Bach: Artificial Consciousness and the Nature of Reality | Lex Fridman Podcast #101

The universe is a computation and everything is functions.

Pi in classical mathematics is a value. But it also a function.

But a function is only a value if you can compute it. And as you can’t compute the last digit of pi, you only have a function.

There can be no physical process in the universe which depends on having known the last digit of pi.

The state of AI research.

Todays thinkers in AI don’t have interesting opinions or their ideas are inconsequential, because what they are doing is applying SOTA with a small epsilon. Good idea if you think this is the best way to make progress.

He arrives at the exact same conclusion I arrived at when Eric convinced me 2 years ago that the current paradigm will lead to AGI and is the fastest way to progress…

But that’s first of all extremely boring. If somebody else can do it, why shouldn’t I relax at the beach and read a good book? But if you don’t think that we are currently doing the right thing and are missing some perspectives, it is necessary to think outside of the box but also to understand the boxes! (What worked, what didn’t and for what reasons?)

(not good career advice obviously haha)

General intelligence is ther result of the necessity to solve general problems.

By itself intelligence is just the ability to make models.

For him,

Idealism and Materialism are a not a Dichotomy but two different aspect of the same thing, since “We don’t exist in the physical world but we do exist inside a story that the brain tells itself”.

(somewhat cringe take, not regarding the story of the brain, but regarding the connection to idealism)

The brain or individual neurons can not feel anything. Physical systems cannot experience anything. But it would be very useful to know what it is like to feel like a person, etc. So the brain creates a simulacrum.

There is a self in this virtual world within your context, virtual in the sense that you cannot pinpoint where exactly this self is. Obviously it is nothing immaterial, but it is an emergent property.

And what we are seeing is not 1:1 the real world. It is just an interpretation of the patterns of sensual input we get.

This representation of the world can be compared to the mandelbrot fractal:

Imagine you live inside this fractal. You have no idea where you are in the fractal or how it is generated. All you see is a little spiral and that it goes to the right…

We have a accurate but incomplete experience and model of the infinite world.

There are actually no spirals in the mandelbrot fractal. It is just a represenation we have in 2D at a certain resolution. And at some point the spirals dissapear and we have a Singularity

Then you can come up with 30 layers laws that explain some patterns and arrive at a reasonably predictive description of the world. However, it does not cut to the core and explain how that universe is created and how it actually works.

@42:20 Can we get behind this generator function? “If you have a turing machine with enough memory, you’d arrive at the conclusion that it must be some kind of automata and just enumerate all of them which could produce your reality”

Some people think a simulation can’t be conscious and only a physical system can. But they’ve got it completely backwards: A physical system can’t be conscious, only a simulation can be conscious; Conscousness is a simulated property of the simulated self.

He’s saying that our minds are puppets.

The brain is writing a story, a model of what the person would feel if it existed, like characters in a book.

Hä, but what is the difference between this “simulation” and that “person” actually existing?

Part of the brain is tasked with modelling what other parts of the brain are doing.

TODO confront sandro with this (What did I mean by this? Was it the argument he made abt the calculator not being self-aware? Which is… true, and likely also not the case for llms, tho I’m not up to speed on the latest interpretability work, but there’s absolutely no reason to assume this can’t be achieved artificially. the opposite. very concrete plans…)

Parts of the world that are not determinstic are not long-lived.

Phenomeny that endure give rise to stable dynamics. Anything complex in the world is the result of some control/feedback that is stable around certain attractors.

So the region of the universe, which allows for complex structure must be highly controlled / controllable and allow for interesting symmetries and symmetry-breaks that allow for the creation of structure.

TODO he also mentions this here

Capitalism is such a divergent system. !! todo link this thought w/ economics and dialectics of attractors / asdasjdhasjkdhaskjdh

Obv. he says universe first, minds later, otherwise no causality.

What happens if someone dies? “The implementation of that person ceases to exist”. Fair enough. But the implementation of a person is not only centered around the brain, but the entire body and all that is involved. So sure, if you spend a lot of time with your kids and teach them your way of thinking, a part of you(r implementation/software; your traits and behaviours) will live on.

But Joscha takes it to the extreme and says that “the dalai lama identifies as a form of government, so if the next one does so too, the dalai lama(‘s implementation / “governmental software”) lives on; I don’t have an identity beyond the one I construct”, which is kinda ridiculous, right?

But I like the thought of (as Hasan put it in the introduction to Engels’ The part played by labour in the transition from ape to man) “innovations accumulating through time”. Consciousness evolving as a collective, being negated through death and coming back on a higher stage, preserving some of the old in a new person, which explains its foundation in society (which Dan Morley emphasized to me on the WC).

Just like the proletarian movement and thoughts of Marx and Engels living on in the revolutionary party.

So what he is saying is, that consciousness is the function of a brain module, which reflects on the own sensory inputs and creates a persona around it, so the brain is not only forming abstractions (models) of the external world but also a model of the itself (and the body).

So in that sense, consciousnes is self-reflection. But we need to disinguish between reflection and self-reflection.

The ability to make good predictive models of the world is intelligence.

(Which makess it easy to argue that intelligence and goals are largely independant of eachother (orthogonality thesis).)

So the example Sandro made: “A calculator is reflecting, so it is to some degree conscious” … is not accurate. The calculator has a very simple model of the world, it is reflecting a tiny fraction of the infinite complexity of the world (mandelbrot set example), but it is not reflecting on itself, its purpose, inner workings, …

But again, Joscha kinda overextends his theory, basically saying the mind is not real and you can just assume an arbitrary identity by assuming agency over it, hence it is just a software construct… Obviously not. Obviously this is limited by the underlying material conditions of your experience. Obviously it is strongly tied to the body, the environment, …

Consciousness is the mechanism which has evolved for a certain type of learning. A model of the contents of your attention (states of different parts of your brain).

Colors and sounds are types of representations you get if you want to model reality with oscilators (Colors form circles of hues; Sounds having harmonics is a result of synchronizing oscilators in the brain).

The world that we subjectively interact with is fundamentally the result of interpretation mechanism of the brain. These representations are universal to a certain degree as there are regularities you can discover in the patterns.

When looking at a e.g. a table, we only look at the aggregate dynamics.

Geometry that we are interacting with is the result of discovering those operators that work in the limit. You get them by building an infinite series that converges. Where it converges it is geometry, where it doesn’t it’s chaos.

He’s talking about the reality of the brain. But we as humans are able to generalize, interact with and intentionally modify and measure objective reality with modern technology in ways that exceed our basic senses, allowing us to make closer and closer approximations to true (objective) reality.

STOPPED AT 56:29

biologically inspired:

What are ANNs? Chains of weighted sums with non-linearity and they learn by piping and error signal through these chained layers adjusting the individual weights.

“The error is piped backwards till it accumulates at certain junctures (where you had the actual error) in the network (everything else evens out statistically).” So you only make the changes at these junctures, where you had the actual error, which is a very slow process for which our brains don’t have time.

What the brain does instead:

Pinpointing the probable region of where we can make an improvement and store this binding state together with an expected outcome in a protocol…

Indexed learning with the purpose of learning to revisit commitments later requires memory of contents of attention. 1

If I make a mistake in constructing my interpretation of the world (mistake shadow / reflection for an animal e.g.), I need to be able to point out which features of my perception gave rise to the present construction of the perceived reality.

And this Attention mechanism is self-reflective / reflexive:

- Need to train what it pays attention to.

- Pay attention whether it is paying attention itself (Gets trained by the same mechanism)

- Attention lapses if you don’t pay attention to the attention

- Periodic loop: Am I still paying attention? Or am I daydreaming?

- That’s where we wake up. Becoming aware of the contents of the own attention and making attention the object of attention.

What is the difference between Transformer attention and the brains’?

- Periodic loop: Am I still paying attention? Or am I daydreaming?

- Attention lapses if you don’t pay attention to the attention

It does not understand that everything it says has to refer to the same universe (i.e. it is not in constant feedback with the real world / learns to model that world in a static way).

Transformer attention does not go beyond tracking identity (i.e. relation of words / different words with the same meaning, identity of some object / agent).

What it learns is aspects / relationships of language (a projection of a conceptual representation (an evolving scene in the real world) into discrete string of symbols).

Models of the world:

TODO … See Boltzman Machines !!! The kinda mechanism we want to develop is something that has distinct ways of training boltzman machine and making the states of the boltzman machine settle…

1st wave of AI: alg that plays chess

2nd wave: alg that learns to play chess

3rd wave: need to find the algorithm which finds the algorithm which implements the solution (again, that’s what the GWS of agi journal sketch is missing). meta learning system.

Antoher way to view it is: (← The original inspiration for “soup” right here)

Brain does not have a global signal that tells each neuron how to fire and wire.

Each neuron is an individual RL agent.

Each neuron has the desire to get fed. It get’s fed if it fires on average at the right time.

There are different special “loss funcs” in the brain. Some areas get specially rewarded when you look at faces.

Keep in mind, that we can just have them communicate information in the latent space and dont have to restrict it to signals…

The env for neurons is the chemical env

TODO stopped at 1:09:00 (rewatchj meta learning segment).

Short clip from Bach X Levin (Jan 23)

Consciousness: (self-)reflexive attention as a tool to form coherence in a representation. - Joscha Bach

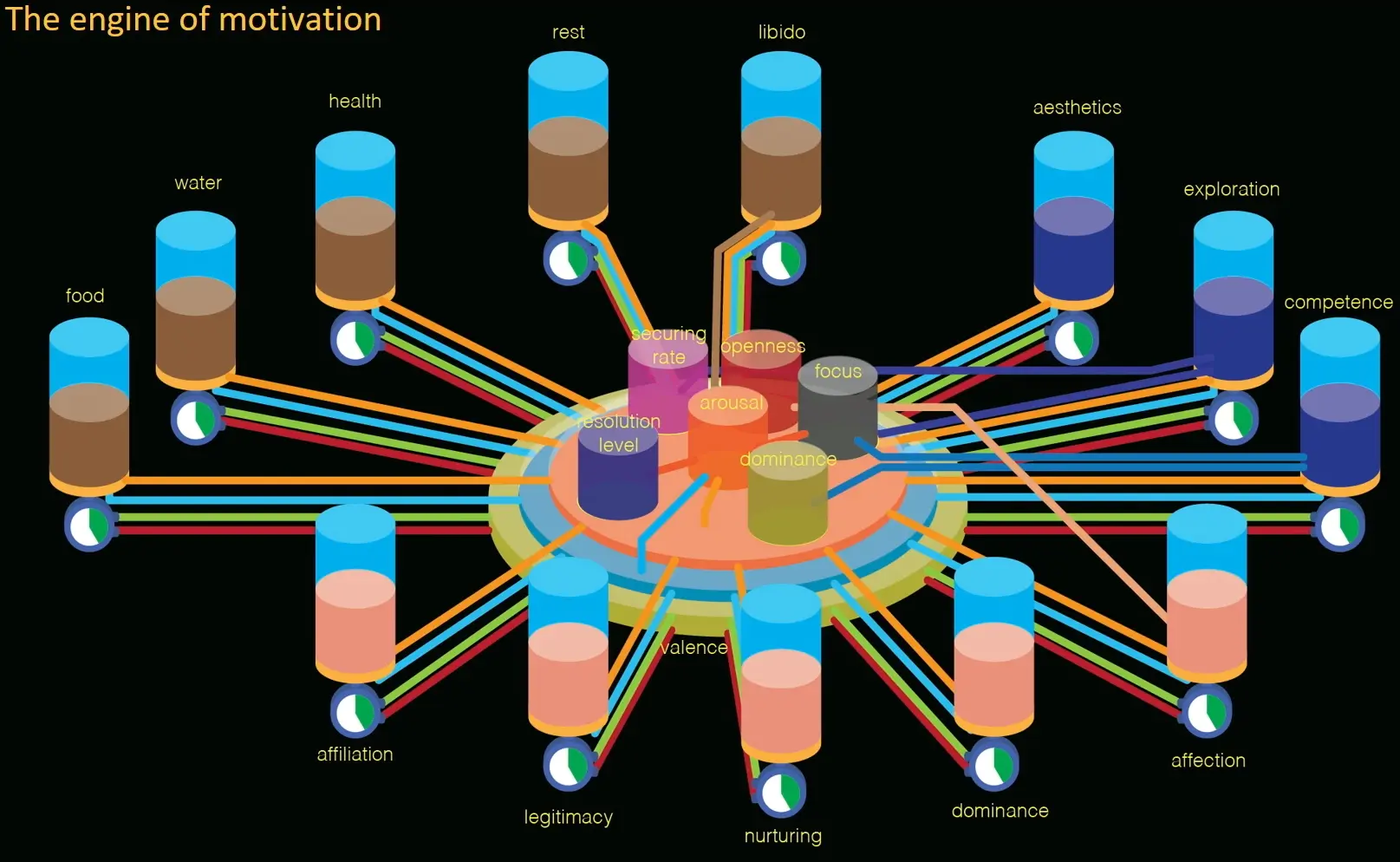

The NN in the brain is like a complex system, a social net, a society of agents wanting to get fed but needing to collaborate or they all die.

So they have to form an organization that distributes rewards among eachother.

This gives us a search space.

Mostly given by the minimal agent that is able to learn how to distribute rewards efficiently (while using these rewards to make us do something useful).

But this is not fully decentralized!

democratic centralism:

Hierarchical form of governance is emergent, also in the brain.

See dopaminoergic system. There are centralized structures that distribute rewards in a top-down manner.

Not a control from outside like in traditional ML, but an emergent pattern amongst RL agents.

Every regulation has an optimal layer where it needs to take place. Some stuff needs to be decided very high up. Some stuff needs to be optimally regulated very low down, depending on the incentives.

Game theoretically, a government is an agent that imposes an offset on your payoff matrix to make your nash-equilibrium compatible with the globally best outcome.

Potential of Social media to be a global brain (“emergent collective intelligence in real time”) and motor in planning.

Neural darwinism among different forms of organization in the brain, until you have a model of a self organizing agent that discovers what it is computing is driving the behaviour of an agent in the real world (discovered in a first person perspective).

genetic algorithm for architecture search / etc. in the GWS in the brain?

Michael Levin Λ Joscha Bach: Collective Intelligence

Weight-sharing e.g. like in convnets … is it necessary to have some special types of neurons @ the visual cortex?

The master class for soup, would of course be to somehow search the entire architecture space for the neuron-agents. But in a first instance, we prlly have to experiment with a few select types (inhibitor, exitatory) and complexities (extremely simple, shallow nn, deep transformer).

synaptic pruning does not hinder learning ability of brain, it only optimizes the computational efficiency

Bach

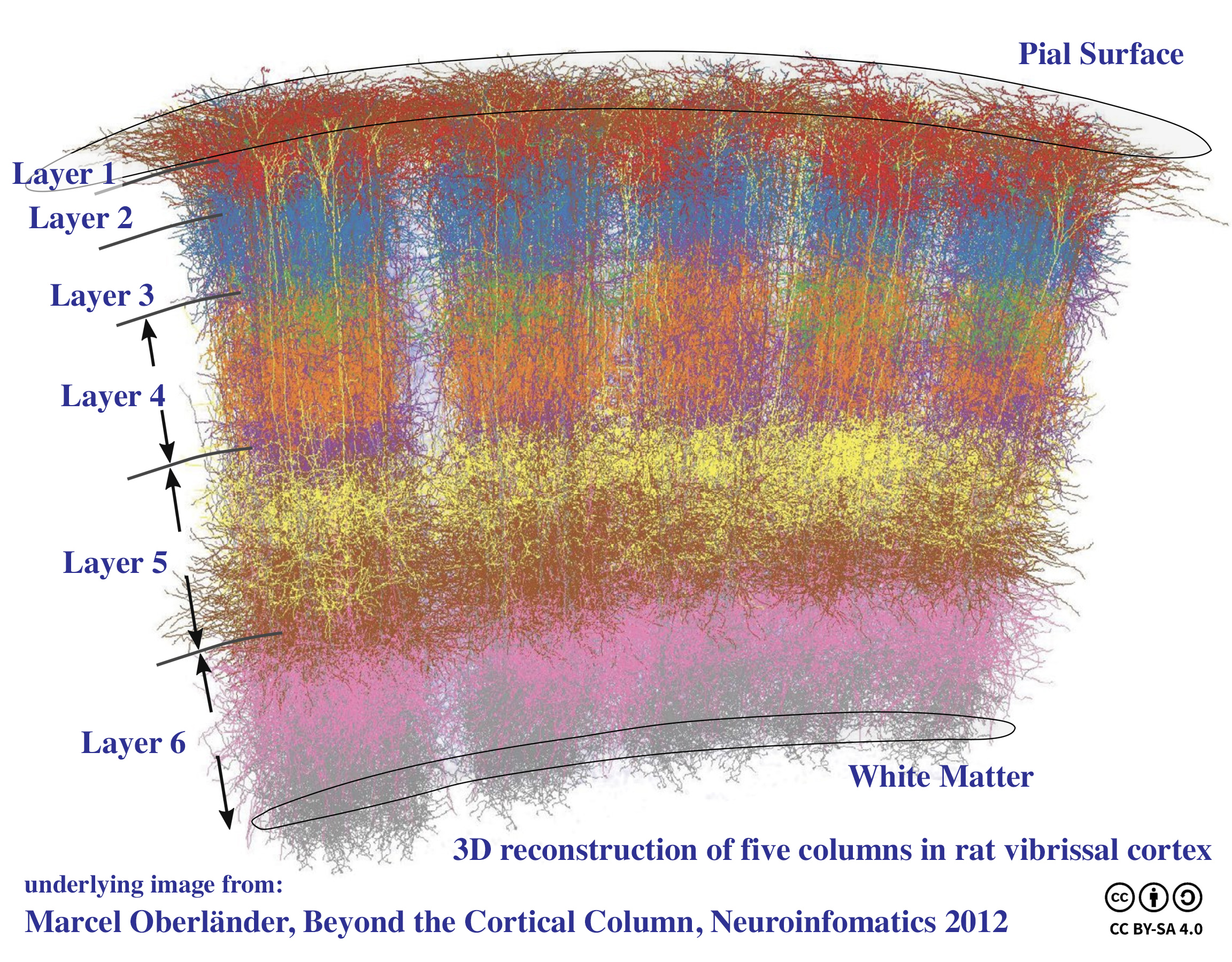

Neurons are not learning a local function over its neighbours, but they are learning how to respond to the shape of an incoming activation front - the spatio-temporal pattern in their neighbourhood.

“Densely enough conneccted, so that the neighbourhood is just a space around them”. And in this space they basically interpret this according to a certain topology e.g. 2.5D, 2D, 1D convolution “or whatever the type of function they want to compute”. And they learn how to fire in response to those patterns and thereby modulate the patterns.

Neurons store computational primitives (responses to incoming activations), which can be distributed to other neurons via RNA, if they find them useful.

Neurons of the same type will gain the knowledge to apply the same computational primitives (not certain how much this aspect actually utilized, but this is heavily underexplored in AI and ties into the entire evolutionary architecture search aspect of soup).

Caterpillar brains undergo a complete reassemlbing, when they transform from living in a 2D to 3D world - a complete restructuring/remodeling - but (core?) memories stay intact nontheless.

memories can move from the brain to other places!

They taught planaria to recognize that’s where their food was and then cut of their brains - as the brains regenerated, the information moved back to the brain!

Link to originalDalle vs Baby: Moving vs. Static World

A baby couldn’t learn from being shown 600 million static, utterly disconnected images in a dark room (and remember most of them!). Our statistical models way surpass the brain in that respect.

For us, information stays the same, but gets transmogrified over time, so we only need to learn the transmogrification. We mostly need to learn change.→ Learning in a moving, dynamic environment is much easier, as it imposes constraints on the world (world model).

Cats, for example, can track moving objects much better than static objects.In moving vs static, the semantics of features change:

Static: Scene is composed of features / objects, which are in a static relationship, based on which you need to interpret the scene (ambiguous, hard, …). The features are classifiers of the scene.

Dynamic: Features become change operators, tracking the transformation of a scene. They tell you how your world-model needs to change. To process that, you need controllers at different hierarchical, spatial and temporal levels, which can turn on/off/change at the level of the scene. → Features become self-organizing, self-stabilizing entities, that shift themselves around to communicate with other features in the organism, until they negotiate a valid interpretation of reality.A similar thing happens also in the spatial domain (i.e. in biological development as opposed to neuroscience, which usually deals with the temporal domain): The delta of voltage gradients modifies the controllers for cells (gene expressions etc.).

Open question: Encoding and Decoding

How do molecules communicate information - without a pre-existing shared evolutionary decoding?

Or even: How do the neurons in your brain deocde memory-information, i.e. messages from your past self to your future self?

I would say, that the soup simply learns how to deal with multi-spatial and temporal patterns over times but yh idk. Esp. how encoding of input signal works is the biggest question mark for me. (upadte: isn’t that the easiest part? pre-training encoders from data to leverage patterns? fixed incoming spatial structure note below supports this assumption? And regarding memory encoding / decoding, isn’t this possible because the hardware and the resulting algorithms are shared / co-evolved. As one part of the picture at least.)

noisy samples → feature hierarchies → objects → scene interpretation / object controllers

darkness? → (scary) shapes, things, etc. spawn into our brain caus we have no data to disprove those hypotheses (at least / especially as a child, when world model is still wanky).

visual information is concentrated at visual nerves (fixed incoming spatial structure).

connectome dead end

A lot of resources are dedicated towards precisely mapping the neural connections of brains…

But it might very well be (and I think so too) that it doesn’t matter at all - that what matters is just the density of the neurons arranged stochastically and how they communicate with eachother.

They are acting as if we can get the entire story out of the brain as if it was circutry (nothing in nature is rigid like that).

Neuroscience seems stuck in that regard, to how the brain processes information.

None of the recent advances in AI were due to advances in neuroscience, but much more statistical insights into information processing.This podcast was also literally recorded >1yr ago. Sutton has been saying this stuff for years. How is nobody researching this? (it is time for soup)

There is incredible, non-genetic memory, e.g. in deers: You carve sth into their antlers, they drop off, and every year they re-grow in the following ~5 years, they will re-grow with a tuffer spot or sth at this exact location. So the skull not only has to remember that exact location for years, but also the bone cells need to know exactly where to put it.

Planaria: The animal with the “worst genome” (all sorts of mutations, different number of chromosomes, …) has the best anatomical fidelity, is immortal, incredible at regenerating, very resistant to cancer, etc.

This again, goes completely against the standard view of genomics, which tells you that good genome is what determines your fitness and abilities in all ways etc.

Evolution vs. Competency

Competency of individual can complement for subpar hardware.

So in a way, having a really good algorithm hinders evolution in generating the best hardware, since it doesn’t know whether it’s doing well or whether the self-organization of the sub-units is just too good (analogy: if error correction algorithm super good, don’t need to focus too much on improving storage medium).

→ Emphasis is really put on the correct algorithm, which can make up for all kinds of bad HW.

→ Again: soup

“Best effort computing”: Don’t rely on the other neurons around you working perfectly, but make an effort to be better than random.

→ Evaluating by stacking probabilities with high error tolerance / evolving a system which learns to measure the unrealiability of components until it becomes deterministic enough (/or maybe there is a continuum, maybe there is a phase shift from this to that between organisms).

Spatially, for information processing between neurons:

Absolute voltage doesn’t matter, but the difference between regions.

Cells have the competency to recruit other cells autonomously to get a job done.

Competency Definition

Levin: Given a problem space → Ability to navigate in this problem space to get towards a goal.

Two magnets vs Romeo & Julia. Both try to get together. Degree of flexible problem solving is incredibly different (can avoid local optima, have memory where they’ve been, look further than local environment, …).

Simple Controller / Reactive System vs. Agent

Bach: Goals can be emergent.

Agent == controller for future staes

Difference between reactive systems, like a thermostat which doesn’t have a goal by itself, but only has a target value and a deviation from it, to which it reacts if it passes a threshold, and agents is, that agents are proactive: They also try to minimize future deviations (integral over a timespan).

The ability to create counterfactuals, causal simulations, e.g. possible future universes, reasoning over alternative past trajectories, etc. needs to be present.

For this, you need a turing machine - a computer. Cells have this ability.

Full emergence or encoded representations?

If a system were to be fully emergent, e.g. there are local rules that always lead to the formation of the correct planaria, then, in order to change the resulting system, you would need to understand / correctly manipulate those local rules to spit out what you like (hard).

However, Levin found, that there is a distributed encoded representation of 1 head, 1 tail, … within the cells!

In fact, planaria can store at least 2 different representations, as you can inject a voltage gradient to change the pattern to say 2 heads, 0 tail, while the planaria is still intact with one head, but if it would get cut off, it would grow 2.

So one important ability is to also store counterfactual states (= something that is not true now but may be true in future or may have been true under some conditions, etc.).

Bach’s definition of intelligence

Intelligence is the ability to make models.

This definition also accounts for the fact that many very intelligent people are not good at getting things done.

Intelligence and Goal-Rationality are orthogonal to eachother.

→ Excessive intelligence is often a prothesis for bad regulation.

Levin is obviously also working on soup / creating a model of how autopoiesis (self-organization) / collective agents first come from.

They already have a model of cell rewarding eachother with neurotransmitters and things like that to keep copies of themselves nearby to reduce surprise because the least surprising thing is a copy of yourself.

An embrio is an embrio, because there is 1 cell that decides it’s gonna be the lead and break the symmetry (local activation, long range inhibition) and every cell has to decide where it ends or the outside begins in biological systems.

Levin:

one part for agi is this plasticity about where you end and where the outside world begins. you have to make this decision as an organism. have to make a model of your boundaries, your structure, you are energy- and time-limited, … All of these are self-constructions from the very beginning.

What gives rise to a lot of the plasticity and inteligence in nature is that you cannot assume that the hardware is what it is and that you also always have a completely different environement (also e.g. bacteria in a sugar vat could go up the gradient to the source, maximizing it or work in metabolic space to break down the adjacent molecules to sugar or sth).

I think some of these constraints are crucial to take up for self-organizing aspects (namely: energy, time, spatial, …), but others, like being basically hardware-agnostic.. are assumptions or generalizations we cannot make or afford with silicon hardware, where we do need to exploit stuff in order to be efficient enough and where there is not such an incredible flexibility as in nature.

Soup - How?

Minimal agent to learn how to distribute rewards efficiently, while doing something useful.

How can we get a system that is looking for the right incentive architectue?

Emergence of GI in a bunch of cells / units:

- each is an agent, able to behave with an expectation of minimizing future target value deviations

- agents are connected to eachother, communicating messages with multiple types (sending and recognizing to a certain degree of reliability)

Open questions:

- a type in the language (like different neuro-transmitters) is simply one more / less bit in a general latent message

- the rewards / proxy rewards also need to come from the connected agents which are also adaptive

- enough agents

how deterministic do units need to be

how much memory do they need / what state can they store

how deep in time does their recollection need to go / how much forward in time do they need to be able to form expectation

how big is the latent dimension the agents communicate in?

(personal question) Is “exchange of functions (‘RNA’)” necessary and - if yes - how to implement it?

The conditions that are necessary are relatively simple. If you just wait for long enough for the system to percolate, compound agency will emerge from the system through competition.

From Artificial Intelligence to Artificial Consciousness | Joscha Bach | TEDxBeaconStreet

Our minds are not classifiers, but simulators and experiencors.

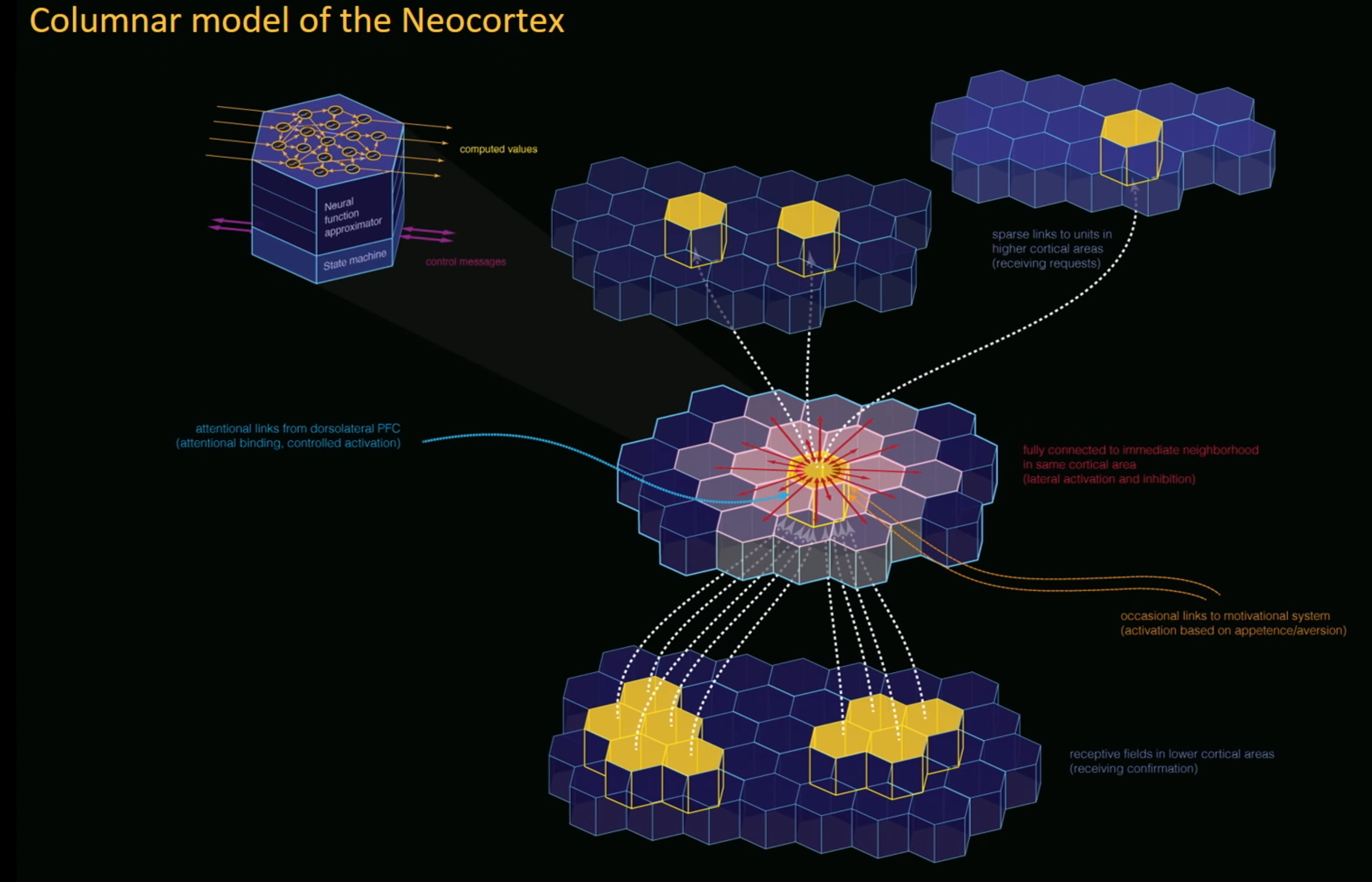

the neuron as an agent: (organized in cortical columns)

In the picture:

Neural function-approximation units (neurons, agents). [top left; the individual hexagons]

Fully connected to a local neighbourhood. [red]

Occasional links to motivation system (activation based on appetence / aversion). [orange]

Receptive fields in lower cortical areas (receiving confirmation) [bottom]

Attentional links from dorsolateral PFC (attentional binding, controlled activation) [blue]

Sparse links to units in higher cortical areas (receiving requests) [top right]

Here again this notion of a conductor in the dorsolateral cortex, which tells the orchestra what is being played tonight and how. The core of consciousness in his opinion, without which we would be sleepwalkers, without goal-directedness, etc.

Conversation between Joscha Bach, Chris Fields, and Michael Levin

- intelligence arises through symmetry breaking sth sth

- first happens at the level of molecules

- the smallest intelligent thing most likely the cell

- but not the simplest. one can imagine large storms at jupyter to have energy minimization properties / entropy stuff see ^dce27a, which can only be fullfilled if the system has some intelligence / memorization capability / … which you might only observe over very long time-horizons

- economy also yk, etc. but we need some experiments some agreed upon stuff for calling sth intelligent

Increasing coherence is the minimization of constraint violations across models / in a dynamic model. It is like a conductor, an instrument which has the role of superficially paying attention to other instruements, paying attention to inconsistencies and stepping in and resolving them.

→ It is a consensus algorithm in the mind, trying to find an interpretation which maximizes the number of simultaneously true statements in the working memory.

→ It is self- reflective, stabilizing, observing, and self-organizingWhat is the minimal self-observing observer and how to formalize it in a substrate-agnostic way?

Do we like music because of the sophistication of the structure that is being encoded as opposed to cultural habits?

[people playing an instrument in the woods and wild animals approaching, for example]

elephants struggle with controlling their trunks for much longer than what it takes them to get on their feet (the trunks have more degrees of freedom).

the trunks can be seen as something simmilarily sophisticated as our hands, so why have they not picked up drawing sophisticated things (bach says they can only draw stuff sbdy has drawn alrdy) or play good music when a xylophone is placed in front of them (hmm).

References

People

dialectical materialism

consciousness

intelligence

Footnotes

-

That’s actually the function of the “Actor” (doesn’t actually act, but plan actions / run through world model / … (decided by Global workspace?)) network which I sketched in agi journal], just on a lower - the learning algorithm level. (Somewhat; many nuances; How store?; …) ↩