Dalle vs Baby: Moving vs. Static World

A baby couldn’t learn from being shown 600 million static, utterly disconnected images in a dark room (and remember most of them!). Our statistical models way surpass the brain in that respect.

For us, information stays the same, but gets transmogrified over time, so we only need to learn the transmogrification. We mostly need to learn change.→ Learning in a moving, dynamic environment is much easier, as it imposes constraints on the world (world model).

Cats, for example, can track moving objects much better than static objects.In moving vs static, the semantics of features change:

Static: Scene is composed of features / objects, which are in a static relationship, based on which you need to interpret the scene (ambiguous, hard, …). The features are classifiers of the scene.

Dynamic: Features become change operators, tracking the transformation of a scene. They tell you how your world-model needs to change. To process that, you need controllers at different hierarchical, spatial and temporal levels, which can turn on/off/change at the level of the scene. → Features become self-organizing, self-stabilizing entities, that shift themselves around to communicate with other features in the organism, until they negotiate a valid interpretation of reality.A similar thing happens also in the spatial domain (i.e. in biological development as opposed to neuroscience, which usually deals with the temporal domain): The delta of voltage gradients modifies the controllers for cells (gene expressions etc.).

Link to originalOpen-ended exploration

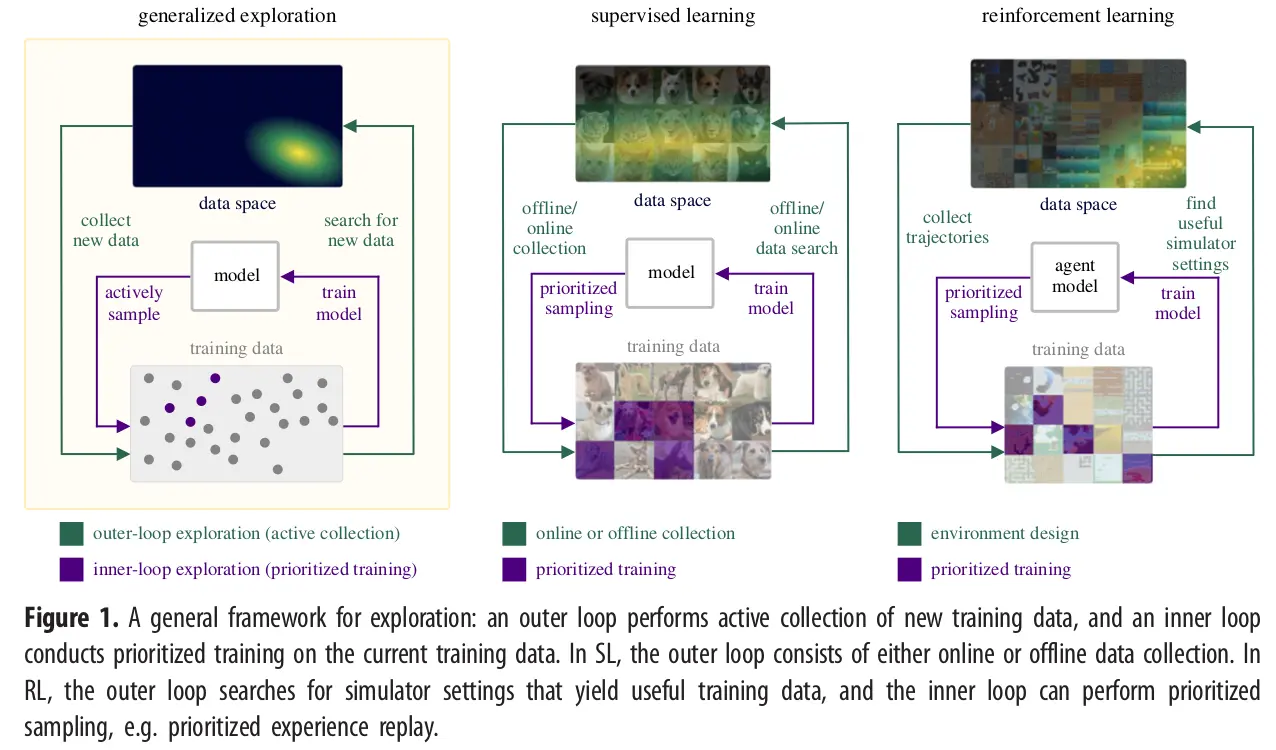

The problem afflicting both classes of learning algorithms reduces to one of insufficient exploration: SL, largely trapped in the offline regime, fails to perform any exploration, while RL, limited to exploring the interior of a static simulation, largely ignores the greater expanse of possibilities that the simulation cannot express.

We require a more general kind of exploration, which searches beyond the confines of a static data generator, such as a finite dataset or static simulator. This generalized form of exploration must deliberately and continually seek out promising data to expand the learning agent’s repertoire of capabilities. Such expansion necessarily entails searching outside of what can be sampled from a static data generator.

This open-ended exploration process, summarized in figure 1, defines a new data-seeking outer-loop that continually expands the data generator used by the inner loop learning process, which itself may use more limited forms of exploration to optimally sample from this data generator. Within each inner loop, the data generator is static, but as a whole, this open-ended exploration process defines a dynamic, adaptive search process that generates the data necessary for training a likewise open-ended learner that, over time, may attain increasingly general capabilities.

References

[[Joscha Bach#[Michael Levin Λ Joscha Bach Collective Intelligence](https //youtu.be/kgMFnfB5E_A)]]