Source: Machine Consciousness

Source: From Large Language Models to General Artificial Intelligence? - Joscha Bach (Keynote)

Joscha Bach's definitions of …

intelligence: The ability to make models (i.e. representations) of the world and use them to make predictions and plan.

rationality: The ability to reach goals. (having good models for reaching your goal but not making efforts to achieve them is irrational)sentience: Corporations are sentient, because they have models of what they are and how they relate to the environment and how they should interact with the environment (largely implemented by people and legal contracts).

consciousness: Corporations are not conscious - “There is nothing what it is to be like Microsoft”. 1

self: “The content that a system can have” (??) But I suppose he means in the sense of the cognitive light cone.

mind: he calls this the “protocol layer” / functionality that allows modelling the universe.

I'm confused by how he defines consciousness and sentience here.Confusion cleared?It seems inconsistent with his own definition of consciousness?

I would have thought sentience means the ability to feel, to experience subjective phenomena, and consciousness is being self-aware / self-reflecting.

Like … exactly flipped from how he defines it here?

From another interview he gave recently, it makes more sense:

- Consciousness is an operator on mental states that reduces contradictions in our representation of the world (integrating sensory inputs and memories into a coherent “bubble” of now).

- From a phenomenological perspective: The perception of perceiving.

- The self is 3rd order perception: The perception of the perceiver.

→ When dreaming, we are still conscious, but not necessarily self-conscious. In deep sleep, we are neither.

→ There seems to be a smooth spectrum for (sophistication of) self-models, but consciousness seems to be binary (apart from intermediate states / phase-transitions).

→ How does consciousness change your mental state? Coherence seems to increase whenever you (or any collective inteligence for that matter) are conscious (e.g. deep-sleep/spacing out/alienated individuals, free-market vs. awake/focused/collaborative, planned economies).

A computer is a universal function executer. It can do anything - The hard task is just finding the right function.

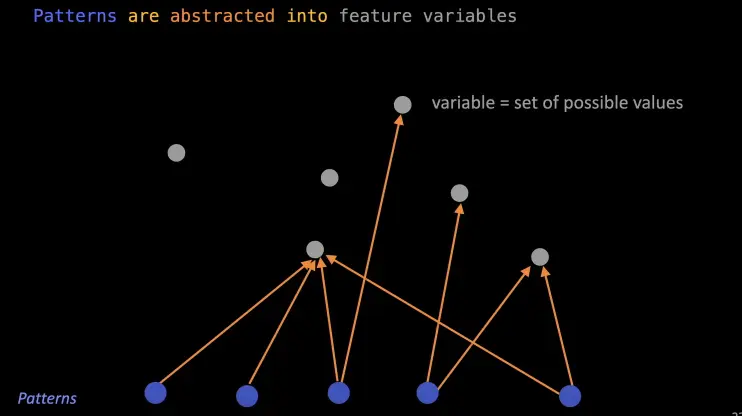

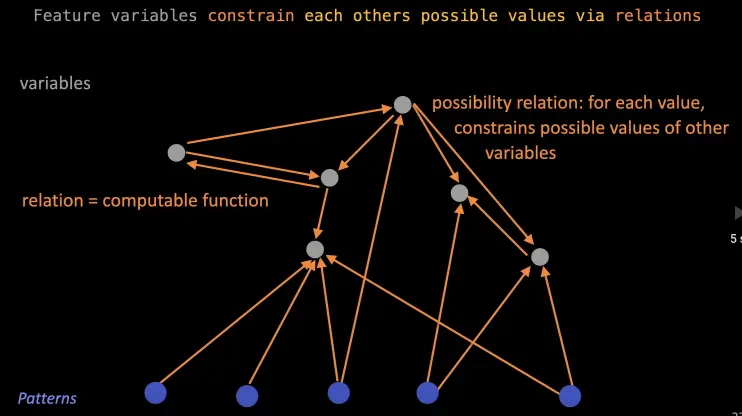

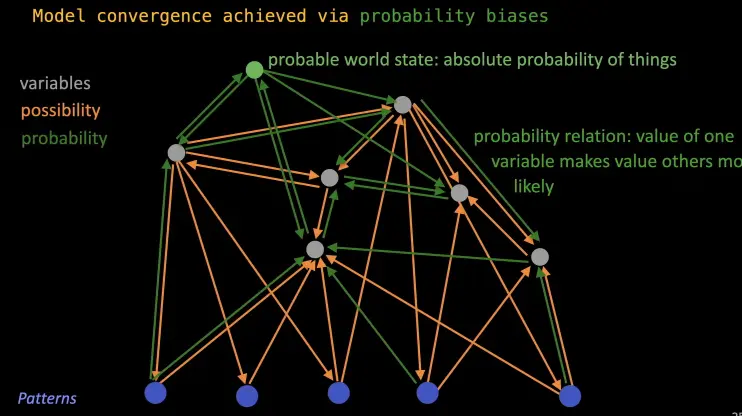

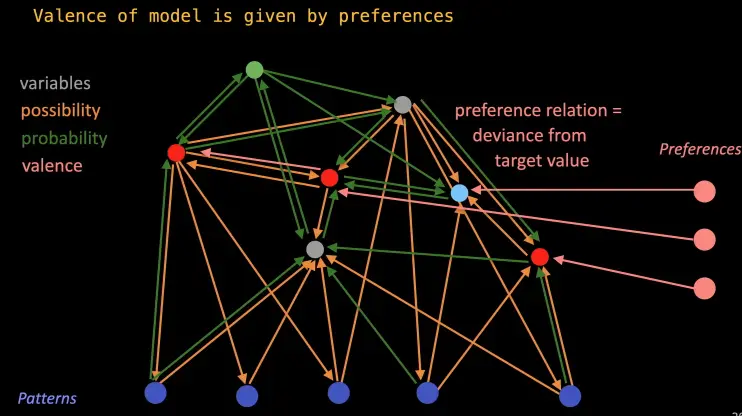

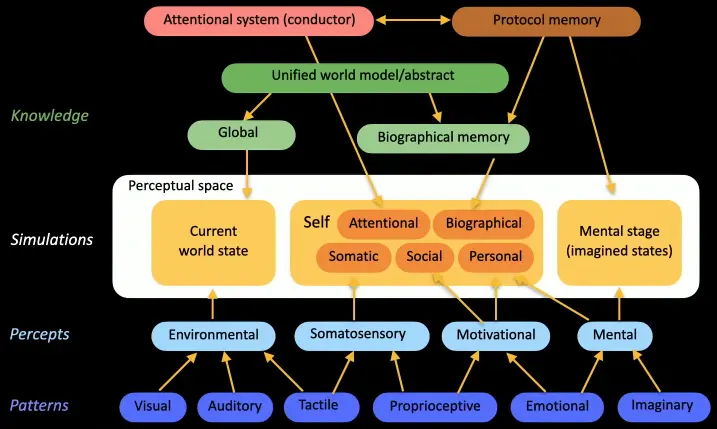

Perceptual system:

Possibilities: What fits together?

Probabilities: How should we converge?

Biases that guide the search / … in possible state space. “Interpretation bias”.

optical illusions are a consequence of this, but it allows you to converge faster.

e.g. you assume that a face is convex, so concave faces are really trippy, looking convex…

“Valence”?: Intrinsic regulation targets.

Assign resources to those parts of the perceptual system that are most valuable to resolve - motivational preferences that assign relevance.

Normativity: Imposed regulation targets.

Attentional system singles out percepts, by attending to them selectively.

It is controlled by an aspect of the self-model.

“Protocol memory” = Indexed memory.

Main purpose of the attentional system is learning: It is much more efficient, since you need to change less parts of your model all the time, as opposed to backpropagation, which works only on statistical properties, by correcting all weights by a little bit on large amounts of data.

We also use this attention to learn in real time on hypotheticals (imagined states).

Priorities for building conscious agents.

Move to continous models (change vs. state).

Coupling with the world (discovery; grounding).

Active construction of working memory.

Real-time + online learningContinous models: Everything about our peception is about how one state changes into the next. Something staying the same is just a special case of a change into an identity. How do different percepts (over time or sensors) relate to eachother? Percepts we cannot explain are noise. → Minimze the amount of noise and maximize the amount of bits that we can explain over time.

Challenge: How to parallelize perception while maintaining a coherent mind - that doesn’t take 16 years of training to reach human performance.Coupling with the world puts huge constraints onto the model.

We actively construct our working memory, such that have the most useful concepts for interpreting the knowledge in a book, as opposed to shifting the context window, for example.

Furthermore, we try to minimize the amount of things we have to memorize.

Silicon golems that are colonizing the world of the living or the world of the living - us - spreading onto other substrates?

→ Systems that are empathetic, able to resonate with your mind / vibing in high resolution - perceptional feedback loops - mental states you could not have alone.

Our civilization doesn’t plan for the future anymore, due to the incoherence of our society.

References

Joscha Bach

Consciousness as a coherence-inducing operator - Consciousness is virtual

Footnotes

-

Does this mean like cosciousness requires a full integration of “sensory inputs”? Does he imply qualia are sufficient for consciousness or the thing that distinguishes it? There does seem to be a shared consciousness among e.g. layers of society (class consciousness, culture, memes, …) which also seems to strengthen as society becomes more connected. At what point does the interaction between individuals create a “what it is like” to be that collective (on a higher level)? (How) is this different from resonating / vibing in high resolution which he mentions later on? ↩