Consciousness (self-modelling) is essential for cooperation.

See also: Consciousness as a coherence-inducing operator - Consciousness is virtual & Machine Consciousness - From Large Language Models to General Artificial Intelligence - Joscha Bach for a more in-depth view on Bach’s ideas on consciousness which I find very compelling.

Transclude of Joscha-Bach#^fa68db

Transclude of Joscha-Bach#^7ac9c7

Consciousness has a hard lower limit (it’s a qualitative leap).

Taking Joscha Bach’s definition, consciousness is the ability of an agent to reflect on one’s own existence, ie. to have a (predictive) model of oneself in order to influence the future to achieve desired outcomes (goals): “Self-aware agent”.

From this follows that

a) consciousness is a qualitative leap,

b) from there it is a continous spectrum of “self-awareness”.

So calculators, cells or insects are not conscious. They do not have the ability to form models of themselves.

The current state of AI is not conscious either - but this does not mean that e.g. one big monolithic transformer as a substrate cannot achieve consciousness!

Link to originalThe minimal agent is a controller of future states.

An agent can be something as simple as a thermostat which can control future trajectories, with the goal of keeping the room at a certain temperature. Its decisions affect future trajectories.

In order to be able to do that, it needs to model the world of how the world is going to change as a result of its actions (with different expectation horizons in a branching world, …), rather than just taking measurements. The efficiency of this process is its intelligence.

We end up with a system that seems to have preferences, knowledge, commitments, goal-directedness, …The more an agent is able to model its own actions as a causal factor in the world, the more self-aware (conscious) it becomes.

At this point, you start to edit your own source code, become self-authoring, change the way you interact, …

Transclude of Joscha-Bach#^e07f41

(Joscha Bach) Different levels of consciousness

Phenomenal consciousness: Awareness of content (what we observe).

Acess of consciousness: Awareness of mode of acceess (perception: cannot stop or edit, memory: not currently the case - can stop recall, imagination: counterfactual - can do anything you want with it).

Reflexive consciousness: Awareness of awareness.First Person Perspective: Perspectival awareness of own agency.

Consciousness: Memory of content of attention.

Phenomenal consciousness: Memory of binding state.

Acess consciousness: Memory of using attention.

Reflexive consciousness: Memory of using attention on attention on attention.

Layers of consciousness: Perception vs. reflection + construction; Indexed memory

Perception: Largely geometric; Perceptual system: Following a gradient - coalescing to a geometric interpretation of the perceptional reality. Gradient descent does not require memory of the process that you performed to do the gradient descent.

Reflection + construction: In reflexive perception, you construct reality - when you reason about things, you cannot just follow a gradient, you need memory in order to understand why you tried things when they didn’t work, so you can undo them, try another branch, …

This requires some kind of indexed memory that allows you to keep a protocol of what you did.

Link to originalIn order for something like system-2 thinking to arise from iterated fuzzy pattern cognition, that iteration sequence needs to be highly self-consistent.

For everything you add, you need to double check that it matches what came before it.

If you put no guardrails, you are basically hallucinating / dreaming. You are just repeatedly intuiting what comes next, with no regard whatsoever for (self-)consistency (with the past).

→ Any deliberate logical processing in the brain needs to involve awareness / consciousness, a sort-of self-consistency check, a process that forces your next iteration of this pattern cognition process to be consistent with what came before it. The only way to achieve this consistency is via back- and forth loops that are bringing the past into the present, bringing your prediction of the future into the present, so you have this sort of nexus point in the present, the thing you are focusing on.

→ Consciousness is the process that forces iterative pattern cognition into something that’s actually like reasoning, self-consistent.

vertebrates. Their essential character: the grouping of the whole body about the nervous system. Thereby the development of self-consciousness, etc. becomes possible. In all other animals the nervous system is a secondary affair, here it is the basis of the whole organisation. - Friedrich Engels

Link to originalleast action principles are the lowest form of what we mean by goal-directed effort and activity (which is strongly involved in affect and feeling and so on). A universe without least action laws is one where nothing ever happens. → “At least in our world, I don’t think there is a zero (for consciousness / goal-directedness).” - michael levin

There are incredibly minimal kinds of things, only smart enough to follow a gradient (no delayed gratification, no memory), but not no zero:

The question to ask is: What can you depend on? If you build a roller coaster, you can depend on the fact that it will go down the hill / take the path of least action. Incredibly minimal, but already on the spectrum.But there’s more to it:

Link to originalWhy an object (now deserving the name agent) needs to register how well or badly it’s doing is so that it can change its mind. - Mark Solms

You are then not obliged to continue the path you were pursuing.

→ There is a degree of uncertainty being introduced. The system cannot be 100% confident of what it’s doing. Pure local interaction is not enough. It needs to know the context in which it is acting, it’s history of actions… and that can’t be infinite! → This is the size of the cognitive light cone – that you need to consider in order to interact with a system – in levin’s framework.

The bottom-level of this – of free will is random quantum indeterminism, what happens after that is a scaling: Multiple (hierarchical) scales that help you tame that underlying noise and randomness into something that does begin to be causally linked to what was good or bad last time.

→ A higher level system guides a lower level system with some degree of freedom.Mike thinks that some of this might arise without any indeterminism (see his recent research on sorting algos).

The simplest learning algorithm.

We don’t become conscious once we obtain our PHD… in fact, we can not learn anything without being conscious.

There is no single human being that you can ever observe to get to any level of competence without achieving consciousness first. It might be the simples training algorithm that nature has discovered for a self-organizing system!

→ There is something simpler than the transformer, something more elementary, more sample-efficient, that trains the mind iinto something that gets colonized by having a coherent language of thought with an information economy, where the different agents know what their value is - when they should be on stage rather than something else (agents here in the sense of the NOW model, not just neurons…).

There is no other way in biological systems - there is no outside force than can enforce coherence from the outside, so the individual cells have to be incentivized to find a structure among eachother that makes them learn, and trains new cells that come into the society to behave by the same rules.

Consciousness might be this government / conductor that trains and organized others into the same pattern.

Most of the complexity and energy of biological neurons goes to waste for coordination and housekeeping for itself (similar to how most of our intelligence usually doesn’t go into the company we work for, but into the maintainancy of oneself and relationships with colleagues, …). Most of the bio-neuronal ability goes into maintaining their own functionality and their relationship to immediate their immediate environment. Only a tiny fraction becomes available for information processing for the whole mind. E.g. the fact that they need to work in sync in order to create coherent patterns, while they are individually able to fire whenever. So a lot of the compute of the substrate goes into achieving synchronization, which comes for free in digital computation.

Consciousness seems to be needed to get un-stuck from stationary reasoning.

We only exist “ as if”. We exist inside a dream reality (“simulacrum”) constructed by our brain, which is what makes consciousness so mysterious to us. - Joscha Bach

“Physical systems cannot be conscious, only simulations can. Consciousness is a virtual property”.

This explanation by chatty makes sense?In other words, the brain’s complex computational processes create a “simulation” of reality, and consciousness is a byproduct of this simulation. It’s as if our minds are running a “program” that generates the experience of being conscious. Thus, consciousness is virtual because it depends on the brain’s processing rather than existing independently as a physical entity.

This view challenges the notion that consciousness is directly tied to physical matter. Instead, it’s seen as an emergent phenomenon that arises from the “virtual” processes within the brain’s complex network.So he is saying our conscious experience, our feelings, qualia?, … exist in an emergent simulacrum?

What even does it mean to be simulated. Why not just say that it operates on a different level of abstraction??

Also, yes, our deeper cognitive circuts do not directly perceive 100% of nature in an unaltered form, we constantly interpret and filter the few signal we do get, but … what is the point being made here?

One thing that follows from this is that qualia are substrate agnostic … you would think… but then he says this:

He also says “mechanical things cannot be conscious” (“a giant machine with parts pushing and pulling at eachother - none of that is a perception or emotion”) … but can it not emerge from the mechanical interactions? Then what is the brain doing?

“consciousness is a virtual property, meaning it exists as if - like money, which is not a physical object. The token you use to symbolise money is not the money itself, but it is a system of causal structure that people have agreed on, and you have to assume the existence of money in order to understand how the world works. It is a ‘stable invariance’, but not a physical thing. It exists so consequently that it has causal power over reality (can shape it).” Fair enough, I can live with this explanation, even though I do not fully grasp it yet. but then again, what differentiates this from a sophisticated mechanical system?

→ I think i know how to resolve this: You cannot store a dynamic system which is dependent on the environment in space. You can store a snapshot of a brain (in theory), a snapshot of the consciousness. But it only has meaning when running.

→ A stable pattern or structure through time that emerges from the brain’s activity ←

Machine consciousness: “A computer that gives a damn.”

A conscious agent solves novel problems which are consequential to its existence, but not just that, it’s finding new problems that we never gave it. It has to deal with conflicting interests.

Consciousness is how the sensation of what thinking feels like.

Consciousness is like a bad manager: slowing everything down and taking all the credit.

If you label sth. conscious, it becomes a political question. A second person’s rights are a burden for yourself.

We cannot use level of complication, since human is just a more complicated arrangement than a piece of paper that has written down a charta for its rights.

But is it able to act upon or interact with the world. Is it able to advocate or enforce its rights?

Consciousness is a specialization of computation. 1

Duh?

This specialization leads to the consciousness-like thing (single thread of experience, computational boundedness etc.) which corresponds to a somewhat human-like experience of the world.→ From an anthroprocentric view, it is how close something is to the human experience. See human intelligence, for why this might be justified.

Some types of consciousness

access consciousness

phenomenal consciousness

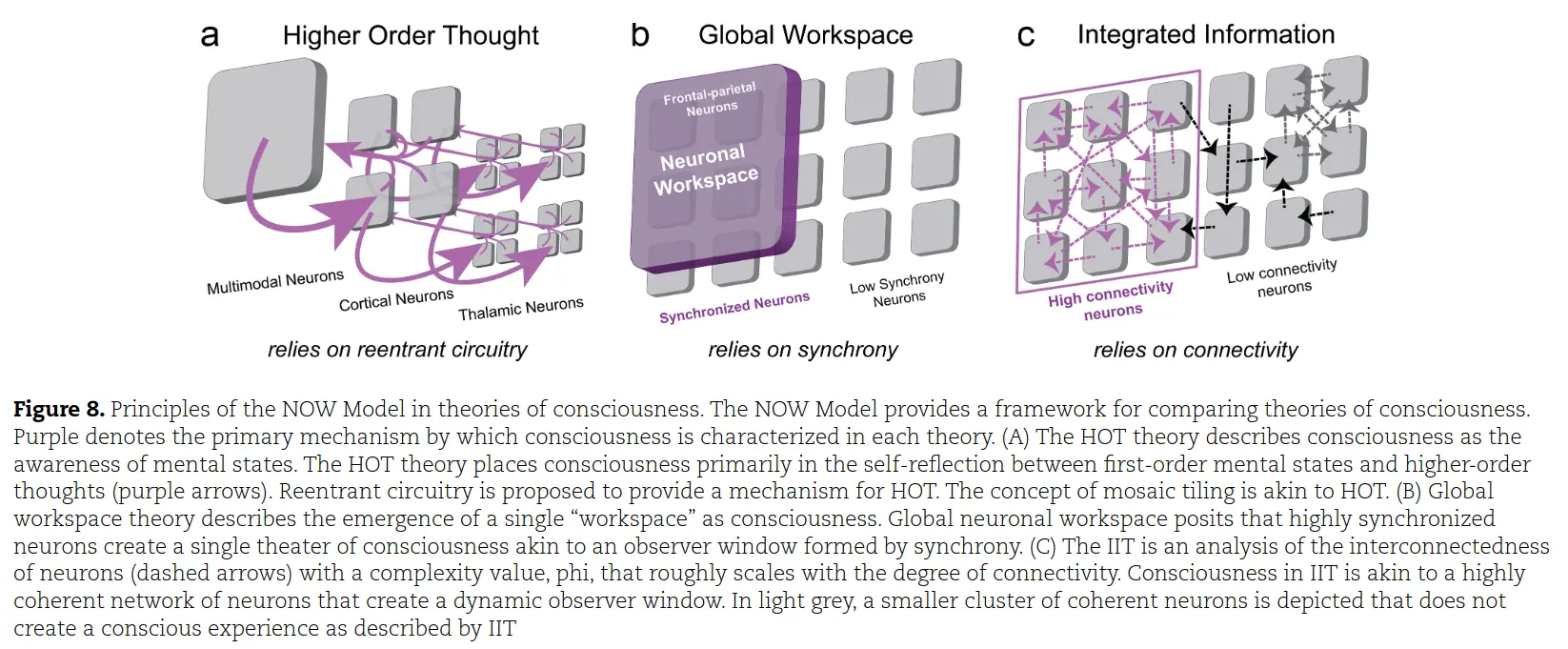

Comparison of theories of consciousness

Higher Order Thought Theory = Spotlight Theory / Configurator / Self-reflection (“awareness of mental states”).

Global Workspace Theory = An “observer window” consisting of highly synchronous neurons.

Integrated Information Theory = Consciousness comes from the overall connectivity.

I think it is somethign between HOTT and GWT, though I don’t fully grasp the description of GWT in the NOW model yet:

Link to original

etc.

Chollet’s take:

These often-heard lines like, “consciousness is just a hallucination”, “consciousness is just what it feels like to be an information-processing system”, etc. sound nice, but they fail to address the hard problem, which is the existence of the subjective experience.

Hallucinations and feelings are things that happen to an agent that already has a subjective experience in the first place. The explanation is assuming the existence of the thing whose existence it was supposed to explain.Karl Friston: your consciousness is 100% hallucination and fantasy; it is the product of your hypotheses about the world

francois chollet tweet

deprecated notes

Some of lecun’s takes

It’s less of one thing, but more a relationship.

Consciousness isn’t really anything beyond what we perceive as the “configurator Module” (LeCun; JEPA; Note: This is basically exactly what Joscha Bach is saying?):

Transclude of human-intelligence#common-sense

~~There is literally not a clearly defined term and it can be whatever you want. ~~

~~Consciousness is a term atheists use for “soul”. ~~

References

Machine Consciousness - From Large Language Models to General Artificial Intelligence - Joscha Bach