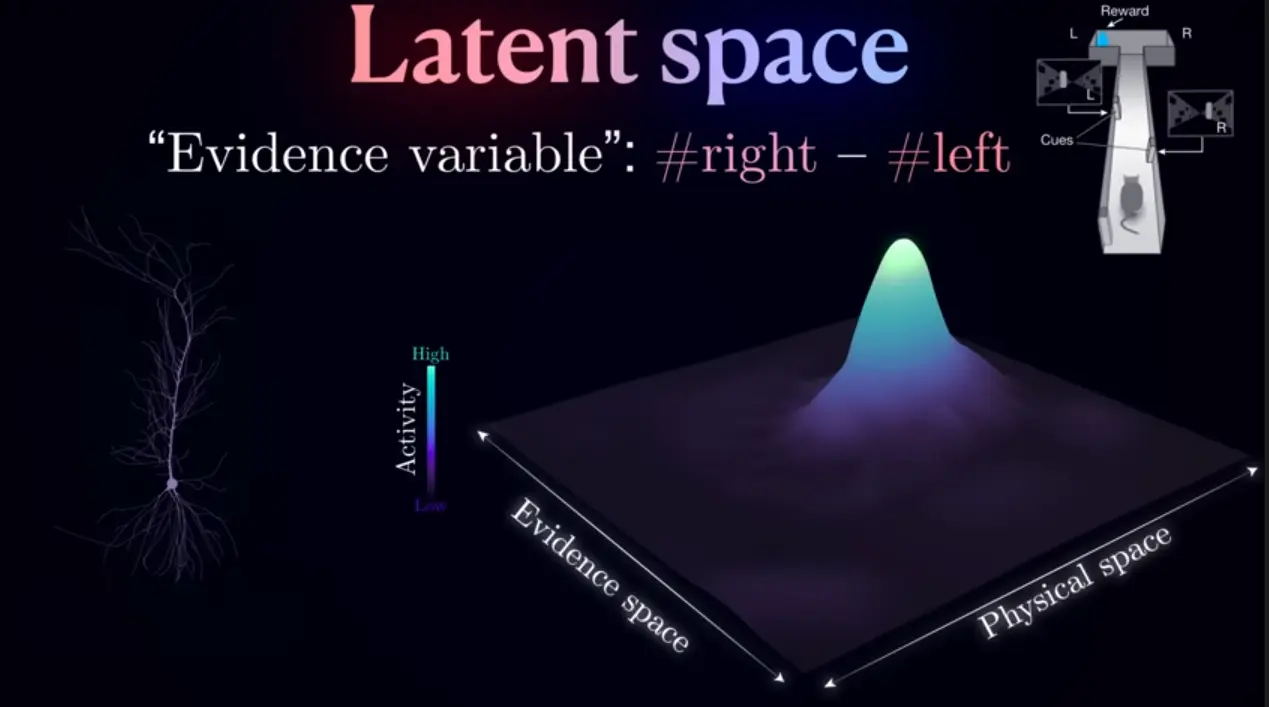

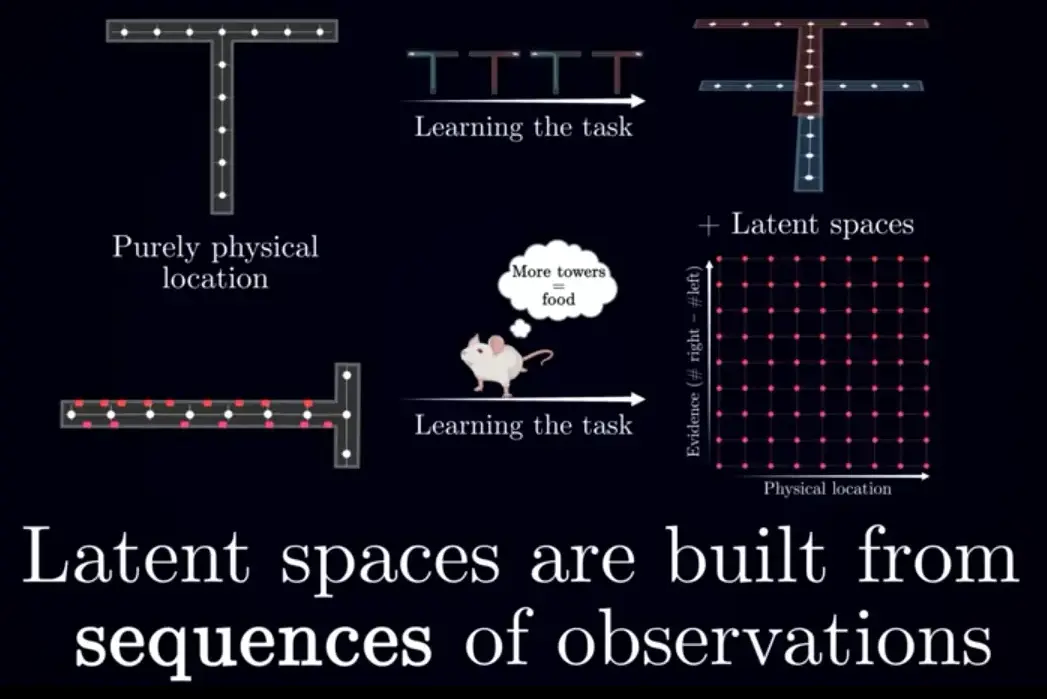

Latent space

Latent space = latent variables (“hidden features”) discovered from data through dimensionality reduction or generative models.

An implicit representation that emerges from the data’s inherent structure.Transclude of Autoencoder#^6b64fc

Latent representations are descriptive - they reveal hidden structure already present in your data.

From the name, it means something hidden (not directly observable). These are the underlying factors or features that explain the variation in your observed data.

Transclude of Autoencoder#^4bbe9f

Link to originalTwo views of latent variables

In a probabilistic sense, the “classic latent variable” is an unobserved random variable in a generative model that explains the data.

In a representation learning/geometric sense, latent variables define coordinates in a lower-dimensional latent space where data points are embedded. A learned code that isn’t directly observed but captures essential features of the data. It’s deterministic, given , unless you introduce randomness on top.

Variational Autoencoders combine both views: They explicitly model latent variables as random variables, while also learning a latent space representation.