This note refers to LLM scaling laws.

Power law scaling laws prlly come from scale-free / heavy-tailed distribution of (length of) correlations → heavy tails are important but hard to reach!

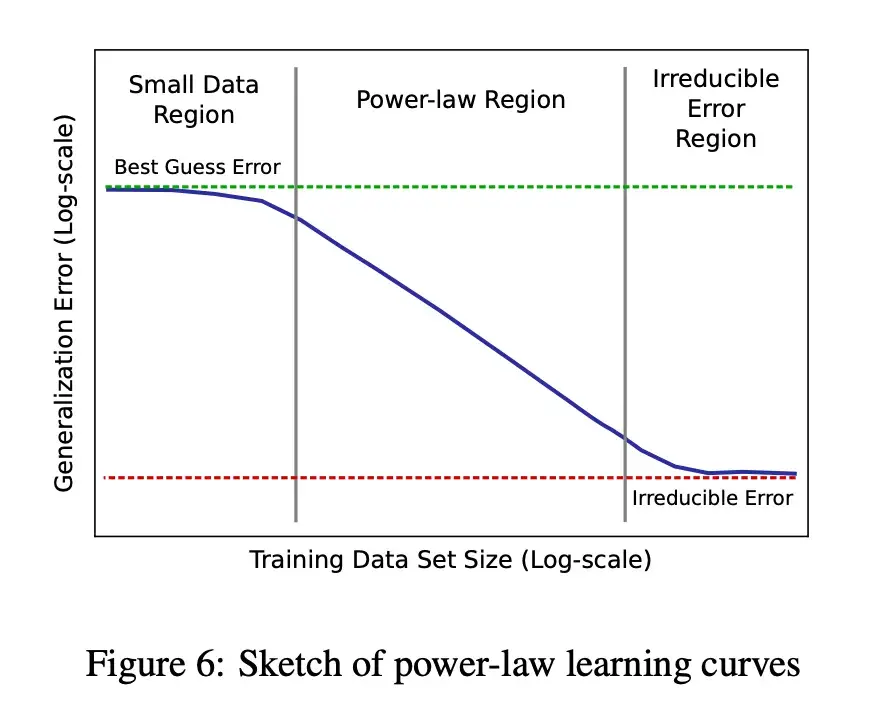

TLDR of some papers – in a nutshell: Adam and xLSTM improve scaling laws by some constant, but only data (distribution, dataest size) improves the power law exponent, data pruning can even do better than power law scaling: