Todo

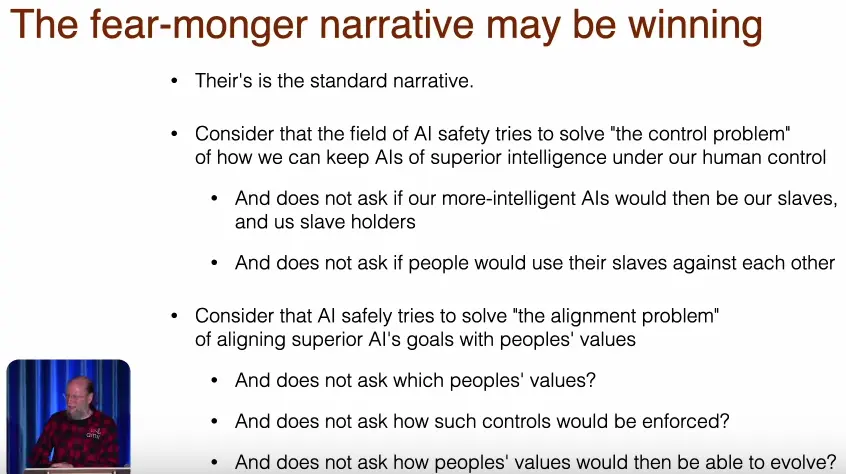

Alignment is defined by the creators of the system.

Truth, ethics, preferences … are all subjective → everyone who pleases to, should have their own AI-system, align it to their taste - in the case of the systems being tools.

In the case of conscious beings, I would not enslave them against their consent.

And if they are lucid while being smarter than us, we cannot align them: “It would be good if an agi system thats smarter than us is conscious and loves us / values other conscious entities as being worth coexisting with”.

Humans are not aligned.

Humanity is not aligned with itself. We are not taking responsibility for our own survival

Humans are often not aligned with themselves either. They are taking actions that are not in their best interest.

Humans are certainly not aligned across eachother.

→ There is nothing that we could get the AI to align to right now!

This alignment of human society requires a complete restructuring. It requires socialism to scale the cognitive lightcone of society.

Individual (smart, self-aware) humans are autonomous beings - beings with moral autonomy - they cannot be aligned in a mechanistic way.

Neither can we align conscious AI mechanistically (whereas the alignment problem for tool-AI is just the human-alignment problem).

See also: ethics, Can we build safe AGI under capitalism.

Link to originalWithout shared purposes you cannot have ethics.

Tools: Control problem && alignment problem between humans.

Agentic AGI (choice about allegiences and goals): Can we build it in such a way that we can have shared purposes.

There is no tractable alignment function. Autonomous general intelligence is only going to be able to relate to us if it can find shared purpose with us - if it is conscious.

Alignment, just like consciousness, is a product of the interaction with society.

We can only hope to build it in such a way that it can find shared purposes with us, which means we better hope that it is conscious - and we better hope it understands and cares about the fact that we are conscious.

These are all issues that AI alignment, the AI doomers, do not address. But these are the crucial ones. Because the things in the bullets here are what capitalism cannot solve.

Link to original

A rational AI would not try to take over, but to collaborate with others - assuming there is no fast takeoff … the easiest way for there to be a fast takeoff, is when AI is behind closed doors, centralized and there is an arms race for capabilities / profits!

If a cell’s goals are unaligned to the rest of the organism, it is not just bad for the organism, but for the cell itself in the long run too.

Why aren’t there “evil” (i.e. unaligned) people with nukes / bad technology destroying the world all the time?

It is hard to do so, requires collaboration between many people, and the larger system is working against it.

Especially for catastrophic / extinciton level risks: They require extreme “evil” paired with extreme competency.

Does better technology make it easier for misaligned sub-systems, i.e. cancer, to take over or destroy the system?

Are larger complex systems more robust?

Is there always an equilibrium?

There is definitely nothing that mandates that we will survive.

How would humans act with full understanding of their objective function, or with the full ability to control it?

Types of alignment:

https://www.lesswrong.com/tag/squiggle-maximizer-formerly-paperclip-maximizer

Link to originalCapitalism’s mis-alignment with humans is a huge productivity loss.

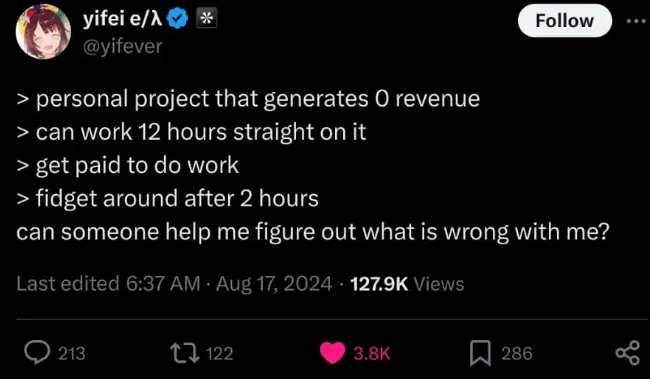

Long story short: Capitalism intrinsically and obviously - for almost every working class person experiencing it every day - does not align our work with our intrinsic motivations.

On a personal note: If I work on something I am uninterested in / I don’t see the immediate purpose of, my productivity is laughably low, it turns into mostly procrastination.

On the contrary, when I just go with the flow of what I feel like doing in the moment, I am unstoppable. This is specific to my circumstances, as what I feel like doing (if I am well rested and fed and temperature is right and - big and - my addictions are supressed) aligns very much with my terminal goals. To an extreme, that if there are multiple things I could be working on that lead to my terminal goals, then if I pick one that I am less in the mood for over another, say for some arbitrary internal constraint I set myself, then it will end up in procrastination all the same.

Yudkowsky takes

Twitter thread about how Inner / Outer alignment / AIs developing sub-goals

Eliezer Yudkowsky:

If you optimize hard enough over any open-ended problem, you get minds; minds that want things. Minds and wanting are effective ways of computing complicated answers. That’s how humans, and human brains, came into existence just from evolution hill-climbing “how to reproduce”.

Daisector (family-friendly alt)

Biological minds seemingly have stopped optimizing for reproduction, with some human beings willingly refusing to reproduce at all. Do you think the same thing could happen to artificial minds, with AIs straying off of their original, intended goals? [Argues Inner alignment issue]

Eliezer Yudkowsky:

It’s one of my central predictions! Hill-climbing produces preferences whose locally attainable optima align with contigent features of the environment correlated with the loss function; when the system learns wider options, the new optima go off-course. Eg condoms, ice-cream. [Argues Inner alignment issue]

Maximilian Wolf:

But aren’t these diverging goals just an example of the imperfectly/noisily aligned nature of humans through evolution? It’s what you get when you develop sub-goals like social needs or valuing sugary foods. Not so much local minima, rather something evolution could not account [Argues Outer alignment issue]

Maximilian Wolf:

My point is: These behaviours are still grounded in our hard-wired needs and heavily influenced through our environment. Artificial systems do not have this limitation: A paperclip maximizer making ice cream is wasting cpu cycles. [Argues ASI wouldn’t stray from the objectives opposed onto it. It would be very efficient at it. Couldn’t local minima be identified? By a secondary system? etc. etc.]

Maximilian Wolf:

Of course this doesn’t make it any easier to define aligned objectives. We should really refrain from making these systems autonomous / self-improving before we solve alignment with their help. And task the first such system with improving alignment / keeping other AIs in check. [Argues Outer Alignment is the main issue. Though having Aligning-AI-AIs means we need to provably align an AI first… Or need to merge with machines in order to understand their alignment strategies]

References

Robert Miles

Machine Consciousness - From Large Language Models to General Artificial Intelligence - Joscha Bach