year: 2019

paper: https://www.nature.com/articles/s41467-019-11786-6.pdf

website:

code:

connections: genomic bottleneck, inductive bias, biologically inspired, Encoding innate ability through a genomic bottleneck, Anothony Zador, indirect encoding

I didn’t read the paper myself yet, but comments on summaries from c4o and g5t in nested bullets:

- Most animal behavior isn’t learned from scratch – it’s largely innate, encoded as wiring rules shaped by evolution. This pre-structured circuitry lets animals learn fast from little data.

- Because genomes can’t list every synapse, they pass brain design through a genomic bottleneck: compact rules that build useful circuits (scaffolds) on which experience fine-tunes details.

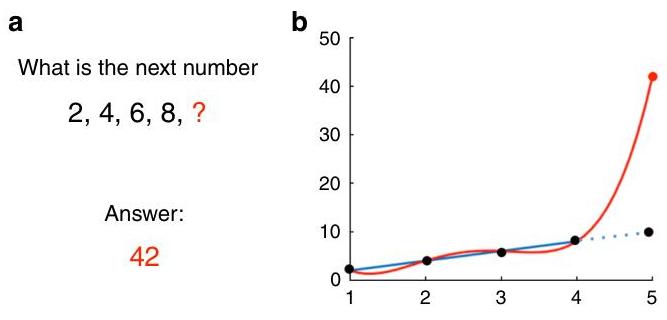

- Modern ANNs, by contrast, lean on massive supervised datasets and still struggle with the data efficiency and generality that even simple animals display.

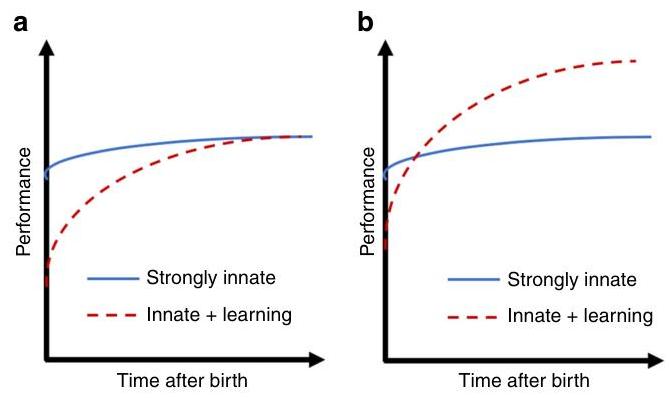

- Innate and learned mechanisms work together (e.g., place cells, face areas): the scaffold is built in, specifics are learned rapidly.

- There’s an outer evolutionary optimization (over generations) and an inner lifetime learning loop.

- Rather than transferring entire trained networks, pass information through a bottleneck similar to the genome to extract more generalizable rules

- bake in stronger inductive biases/architectures (à la CNNs), and explore brain-inspired wiring rules to get rapid, low-data learning.

- → strong disagree; use transformers or search the wiring from scratch

- pursue meta-learning/transfer

- → strong agree + cultural / imitation learning to bootstrap (after/on top of innate abilities)

A paper by the same author 5 years later on exactly this: Encoding innate ability through a genomic bottleneck, which also includes a nice YT talk from which I discovered this paper in the first place, I think.

(S)SL is mimicking behaviors found over millions of years of evolution, instead of within-lifetime learning…

missing stepping stones that are not directly rewarded/on that clear path of optimizing the objective, but without which future discoveries are increasingly unlikely.

This just made me think maybe, actually, if you want to address FER and learn in an inner/outer loop of RL/evolution which I most closely see actually implemented in OMNI-EPIC so far, then why not go from from-scratch transformers, in language space? (assuming sth like NEAT is intractable to start with language)

And don’t actually use any RL during lifetime at all, s.t. these innate skills and adaptation abilities get encoded into the weights directly (adding reward (or a prompt…) to the input of the network so we can condition on it, but not using RL to train the weights).

Tho of course, there’s no bottleneck in that.

Which is why instead of a single transformer, you should do VSML on top of it.

(+ maybe grow the structure of that meta-network)

Call me crazy but I do agree with this, even in late 2025:

The paper ultimately argues that achieving even mouse-level intelligence will require incorporating these evolutionary insights, but once achieved, human-level intelligence may be relatively close - since human brains use similar principles to other mammals, just elaborated further.

Innate vs lifetime learning is a tradeoff

Mike develops this idea: michael levin ratchet-1754080606610.webp

He ties this to occam’s razor / bias-variance tradeoff, but recent work suggests that simplicity is just a correlate: weakness principle