Key points from skimming slides again days after watching:

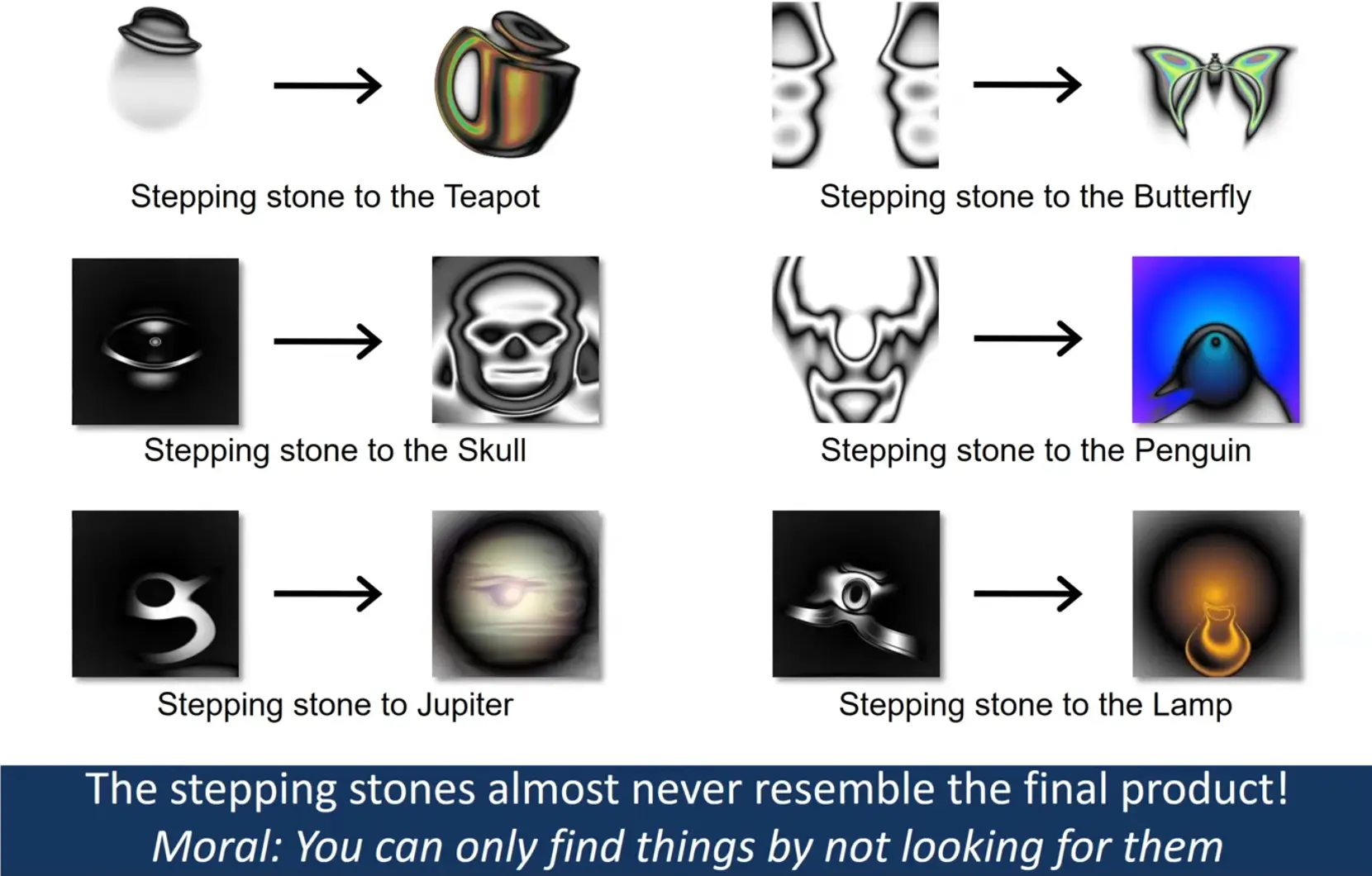

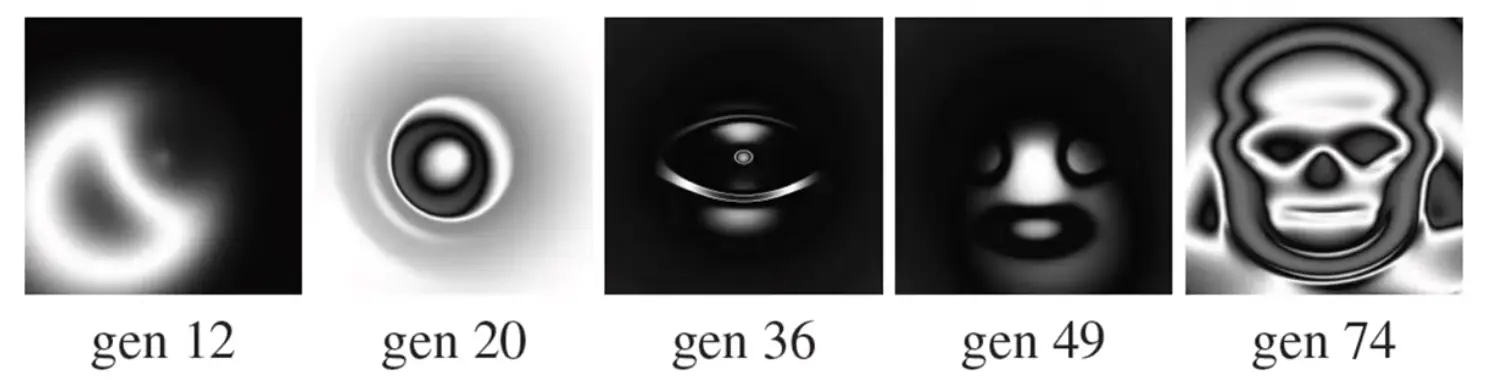

novelty search can often lead to simpler, more effective/general solutions than objective-based optimization, through the discovery of useful stepping stones that would otherwise be overlooked.

The stepping stones almost never resemble the final product

“you can only find things by not looking for them”

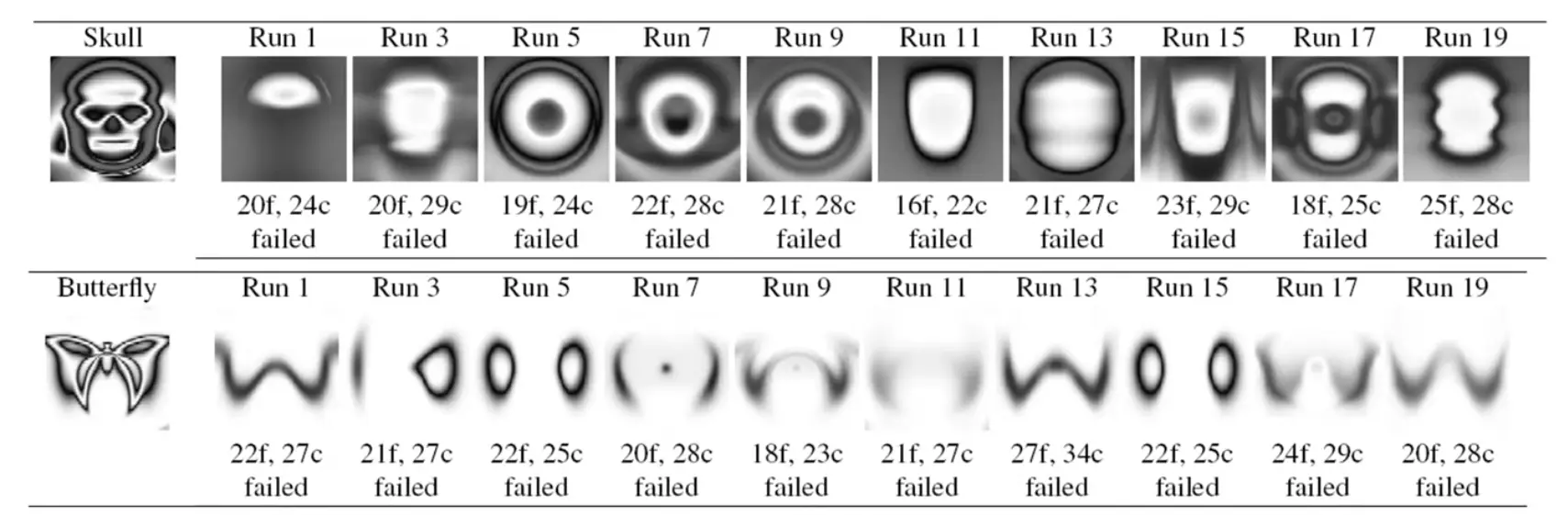

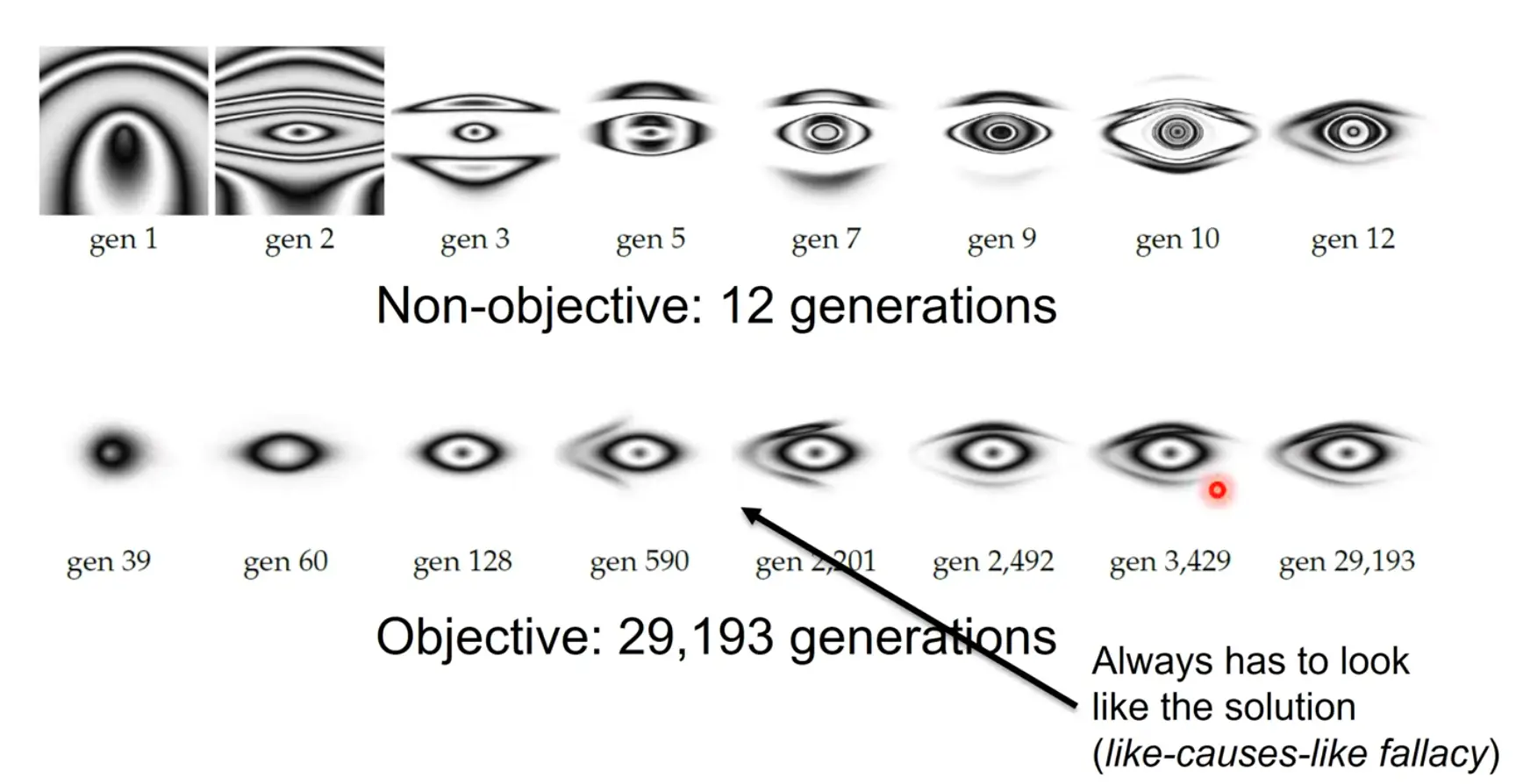

The paths are deceptive:

(Might look like we’re going in the wrong direction, or vice versa)

Like-causes-like fallacy

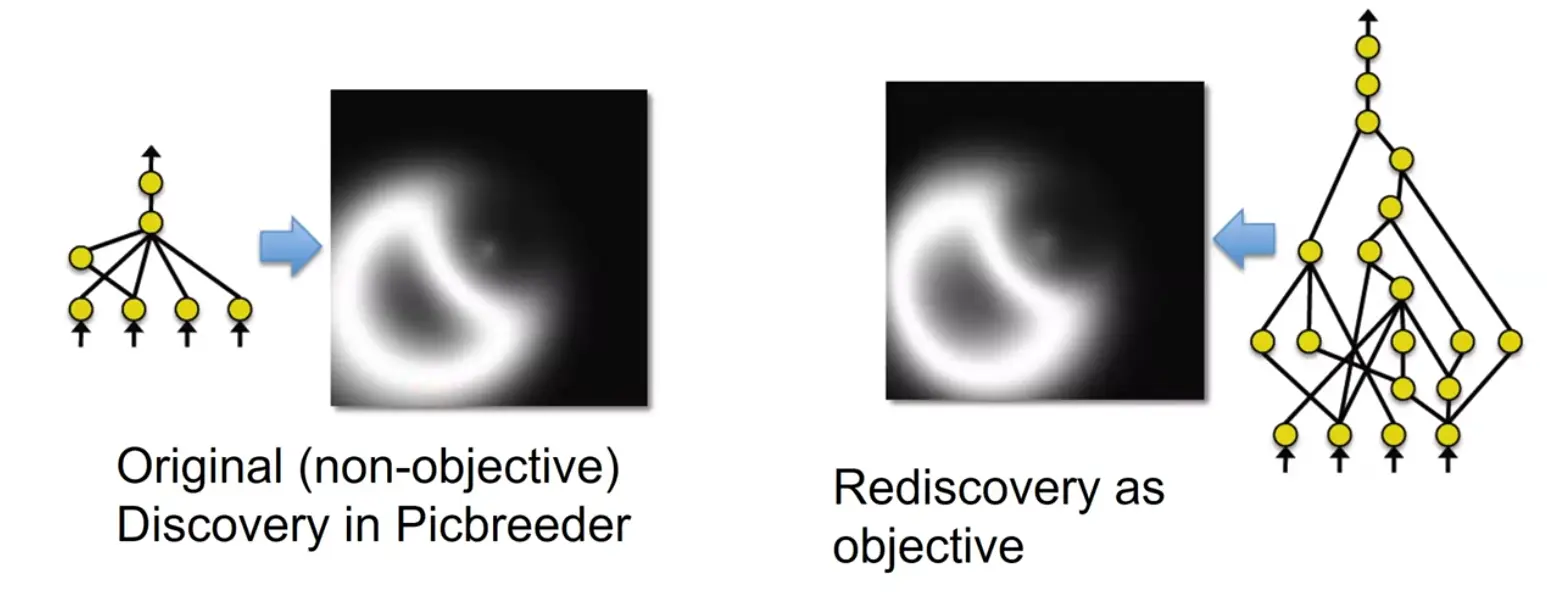

In picbreeder, complex images cannot be re-evolved at all (fitness=matching some specific picture).

NEAT is good at discovering all kinds of interesting things, but it’s not good at finding any single thing that’s good! (current benchmarks might be victim to deception)

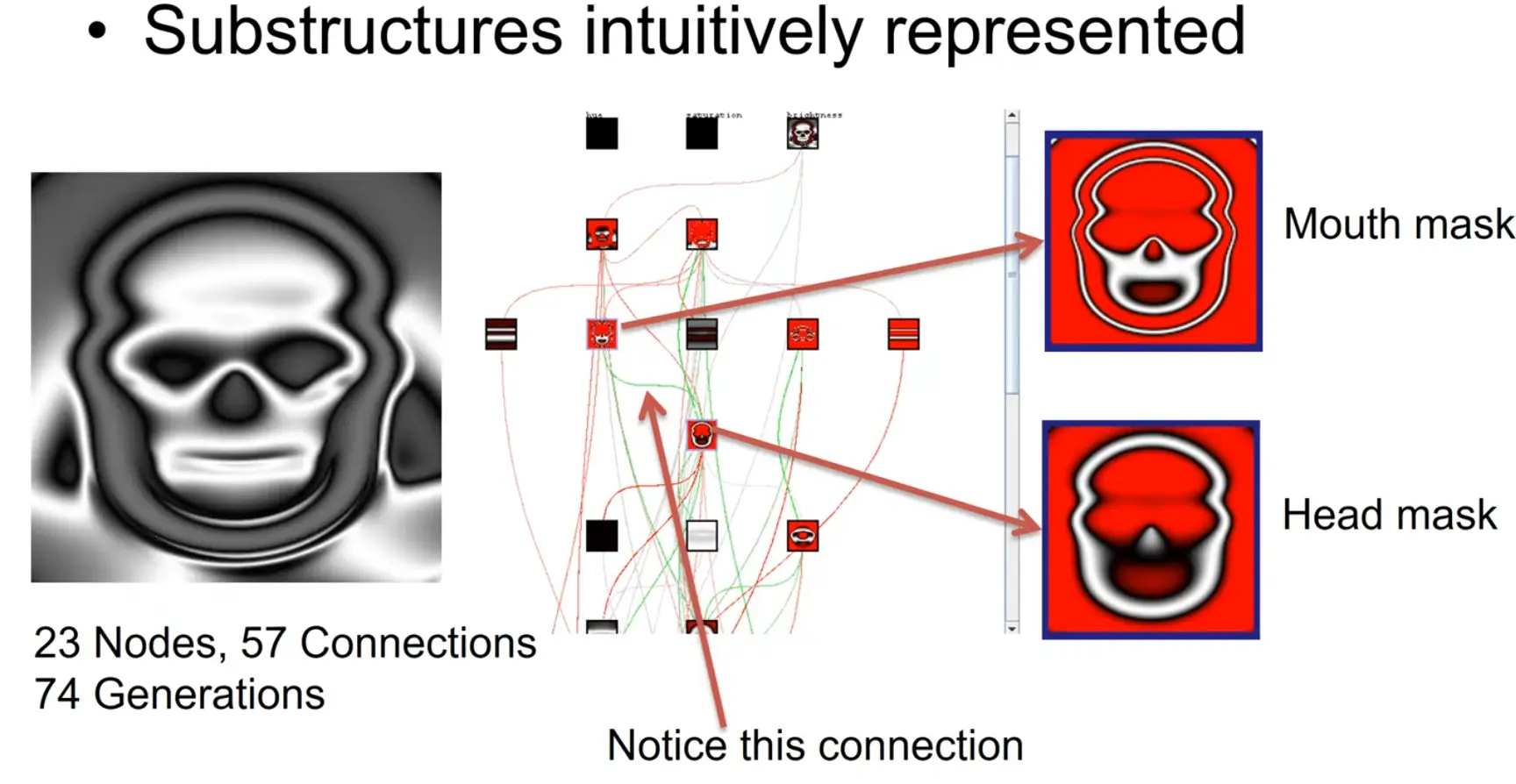

Open ended discovery leads to fundamentally different organization/ representation: Representations are more meaningful / composable / efficient / natural

Explains DNA to some extent: Perturbation is sensible / canalized.

Should representations look like DNA?

If you “think open-endedly” (not optimizing for one specific (end-)goal), do you obtain representations that are different than those who don’t? (broader / more organized / easier creative thinking)

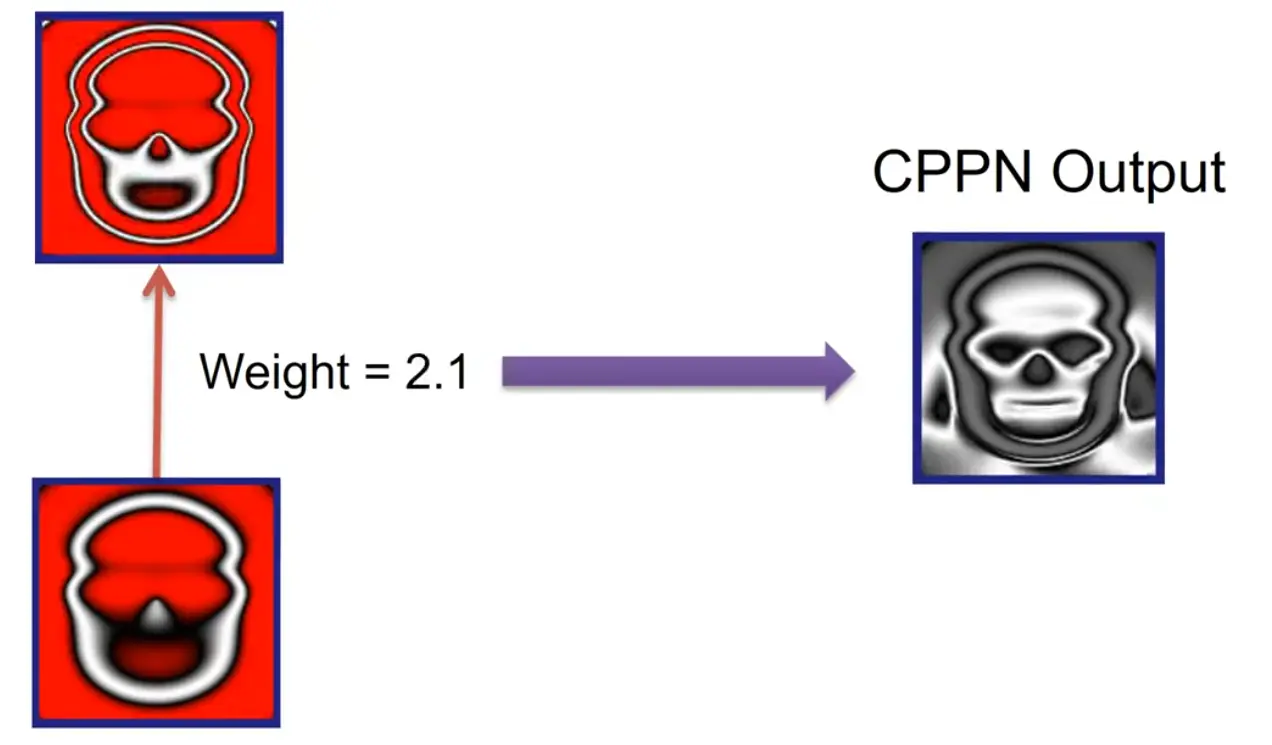

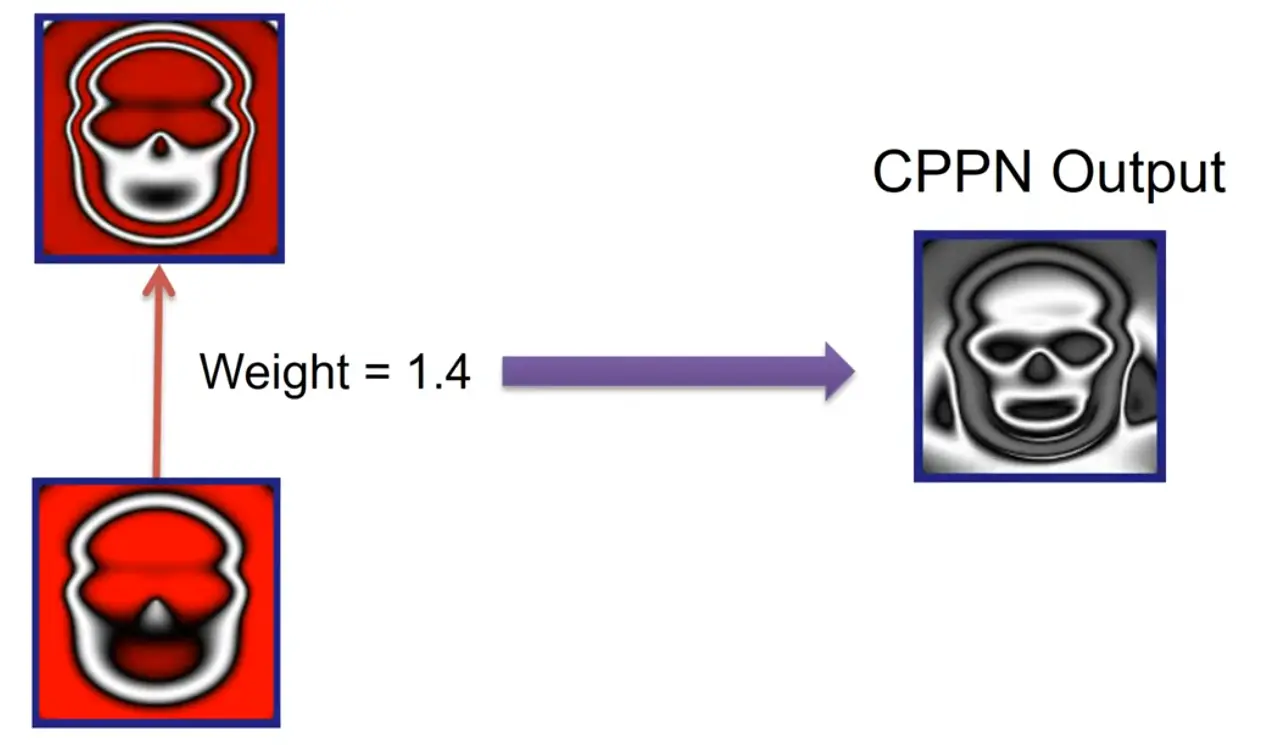

A single weight controls the mouth aperture:

(other example: single weight controls the swing of the stem of an apple)

The environment is always a brick wall.

Evolution through Large Models

Might LLMs be the bridge to reality as the env?

unboundedness of the universe

A place where anything can be expressed (universal computation) is a place where anything can happen

Computation is a metaphor for expression, but expression happens through a medium. In our universe the medium is physical reality.

Invention: When anything is possible, every end is a beginning.

The easiest way here again is code, inventing tools.

Current RL: immutable environments. Actions are about attached conditional things (mario jumps), but invention changes the outer environment and leaves behind a detached artifact (mario crafts a sword).

→ Endless possibility of detached conditional things (language is one of them).

The API of earth (particles in the ground) sucks.

→ Koding koding koding?

Link to originalMany naive things become possible in/with CLIP’s/LLM representation space, such as

- specifying goal states in natural language

- stopping simulation/training once change in latent space plateaus (magnitude of clip vector change)

- (heuristic metrics that fall short in other cases)

Why Greatness Cannot Be Planned

Kenneth O. Stanley

UCL (DARK)