year: 2024/01

paper: https://francesco215.github.io/Language_CA/

website: (YouTube) Unlocking the Secrets of Self-Organization: From Ising Models to Transformers

code:

connections: self-organization, michael levin, Hopfield Network, transformer, The extended mind

They also made a formal paper out of it, with some additional graphics: Topological constraints on self-organisation in locally interacting systems

Maybe come back to this again after reading Hopfield nets are all you need

Though I think I got 80% of the main points of the paper now, just not all the mathematical proofs and details.

Link to originalThe ability to self-organize into an ordered configuration only depends on the topology of the system, and not the interaciton strength.

The hamiltonian of the Hopfield Network is identical to the ising model, except that the interaction strength is not constant, and very similar to attention!

For a hopfield network:

is the weight / interaction matrix, which encodes how patterns are stored, rather than being a simple interaction constant.

In a simplified form, the update rule of the HFN is:And for attention:

Tokens in self-attention are just like spins in the ising model, but instead of spins, they are vectors in higher dimensional space, with all to all communication, self-organizing to compute the final representation.

See also: associative memory.

We want self-organizing systems / topologies, where each element only interacts with a few other elements of the system.

The generality of self-attention is great, however it comes at the cost of . In other SO systems like cells, society, or the internet, each node only interacts with a tiny fraction of the overall nodes.

The topology of self-attention with a finite interaction (context) window is not capable of self-organizing into a coherent structure.

For a system to be able to self-organize, it needs to be in the right topology, which we can test by taking an ising model with the same topology, and see if it has an ordered phase.Examples

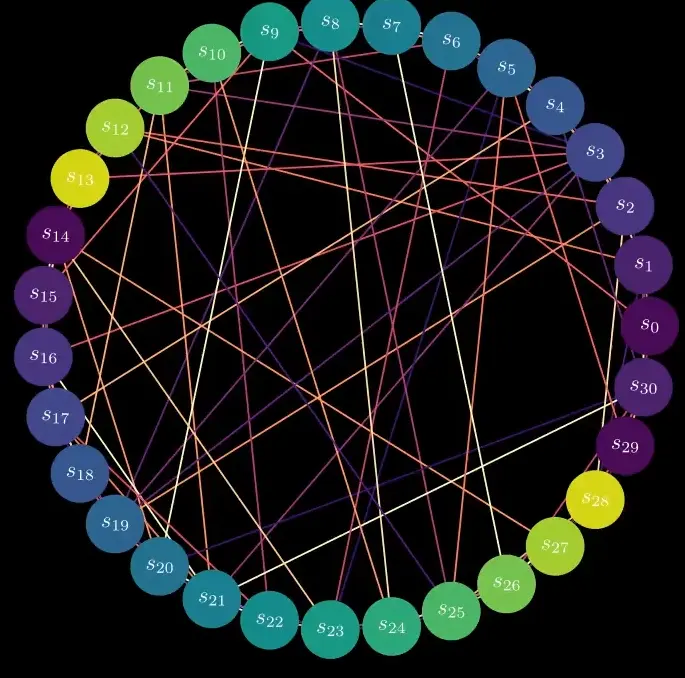

This model has no ordered phase → Sequence of tokens won’t scale to an arbitrary large size. This one has an ordered phase:

If every element of the sequence can only see a few of its neighbors, it is topologically equivialent to next-neighbour interactions on a ring.

”The only way to confirm whether a topology is effective for your model is by training it and observing the outcomes”

Small-world topologies like the connection between the neurons in the brain have an ordered phase.

They are capable of self-organization without all-to-all interaction.

Brain + Environment

Let’s start with a system inspired by how the brain is thought to work.

One can naively say that the way the state of the brain at the time depends from the state . We are assuming the time to be discretized, but this can be easily generalized.This effectively makes the brain an autoregressive model, thus it is incapable of retaining information for an indefinite amount of time. On one hand, we human beings do forget lots of things, but on the other hand we seem to be able to also have long-term memory and the ability to stick to a plan for even decades.

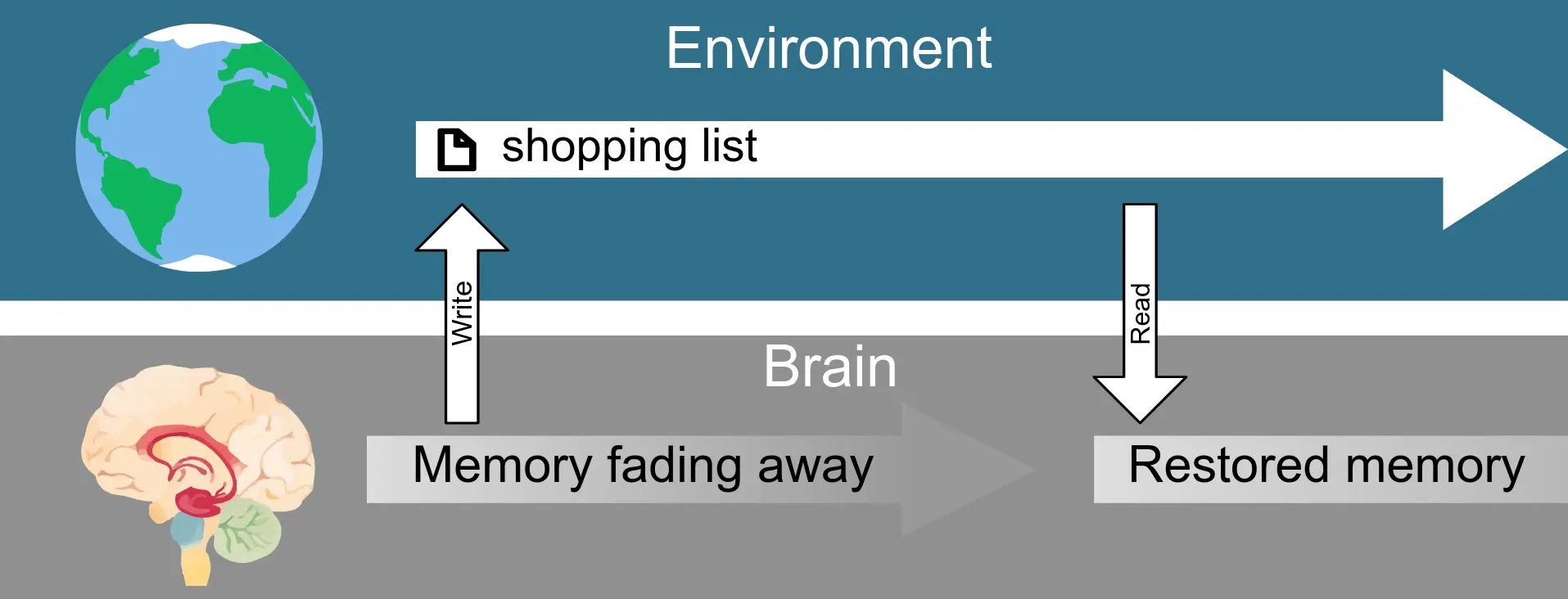

However we (and some other organisms) also augment our finite memory by deliberately interacting with the environment, this way we are able to remember stuff for much longer.

For example when you go shopping, you write down what you have to buy on a piece of paper, this is because information stays there longer and more reliably than if you try to remember it.

This example also raises an interesting observation: When you read your shopping list, you effectively “interact” with your past self though interacting with a piece of paper memories, whether internal or on paper, are messages or prompts from your past self. In some sense we can say that effectively where is when you are at the supermaket, and is when you are at home writing the shopping list.

This sort of thing happens all the time, our brain constantly interacts with the surrounding environment so much that it is impossible to study the brain independently from the environment (and reconstructs its state from engrams available to it) as such a thing doesn’t exist.

This concept echoes The extended mind thesis, a perspective that suggests our cognitive processes are intricately linked with our environment. According to this view, the study of the brain cannot be divorced from its ongoing interaction with the surrounding world, as it challenges the idea of a self-contained, isolated brain, highlighting the significant influence of external factors and tools on human cognition.

Modeling of Brain-Environment Coupling Using Spin Chains

Let be the state of the brain at time and the state of the environment, we can say that the way they evolve is ruled by a set of equations like this:

Note that this is simply an autoregressive model made of two coupled autoregressive models, however we can assume that the environment is capable of holding on to information for longer.

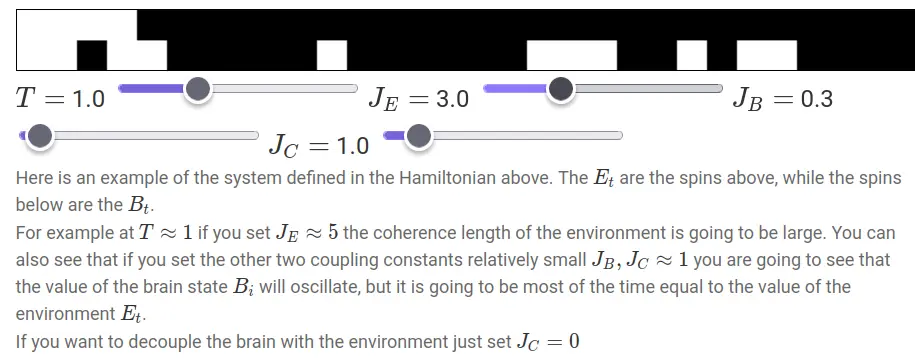

We can simplify a system like this by assuming that both the brain and the environment have only two possible states: 0 or 1. [lmao]

We can think of it as two spin chains with different coupling constants and , since the environment has a much bigger coherence length, we can assume that . We also assume that there is a coupling constant that couples the neighbouring spins of the two chains.

The hamiltonian becomes:Thus, what effectively happens when is not too small, the coherence length of the brain chain becomes equal to the one of the environment.

What does it mean for the brain and the environment to be coupled?

Increased Coherence: The brain’s coherence length (the range over which its states are correlated) increases (e.g. in the pic above ’s coherence is high, it has a continual string of s).

Memory Extension: The brain can retain information over longer periods due to the environment’s influence.

Unified System: The brain and environment function as a single, integrated system rather than two separate entities.Systems like this made from a model with limited context length + an external environment can be found in the language modeling + reinforcement learning literature … for example Voyager … the agent is capable of using skills and information that has been written in the distant past. This effectively increases the context length of the language model