This note is a reference for different kinds of attention.

Attention? Attention! (Blogpost) [Deep intro to attention]

See also:

self-attention (attention mostly refers to this nowadays)

scaled dot product attention

cross-attention

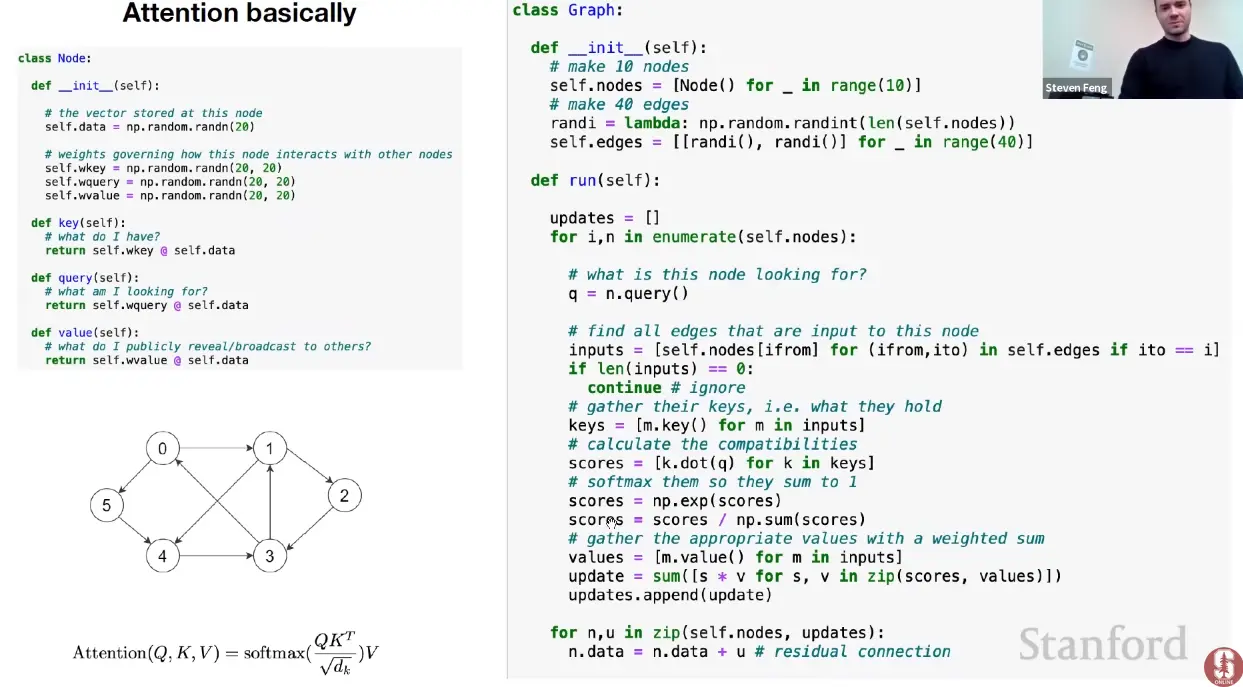

Link to originalAttention is a communication mechanism / message passing scheme between nodes.

Attention in principle be applied to any arbitrary directed graph.

Attention can be thought of as a message passing scheme.

Every node (token) has some vector of information () at each point in time (data-dependent) and it gets to aggregate information via a weighted sum from all the nodes that point to it.

Imagine looping through each node:

- The query - representing what this node is looking for - gathers the keys of all edges that are input to this node and then calculates the the unnormalized “interestingness” of information / compatibilities with other nodes by taking the dot-product between the keys and the queries.

- We then normalize these scores and multiply them with the value of the input nodes.

- This happens in every head in parallel, and every layer in sequence, with different weights (in both cases).

The attention graph of encoder-transformers is fully connected.

The attention graph of the decoder is fully connected to the encoder values, and tokens are fully connected to every token that came before them (triangular attention matrix structure).

Graph of autoregressive attention (self-loops are missing from the illustration):

Link to originalQKV?

We project the input nodes to Q,K,V, in order to give the scoring mechanism more expressivity: What you pay attention to and what you extract from it can be different.

In principle we could do without them, see message passing.Q: Here is what I’m interested in (like text in a search bar).

K: Here is what I have (like titles, description, etc.).

V: Here is what I will communicate to you (actual content, filtered / refined by value head).

For every query, all values are added in a weighted sum, weighted by similarity between that query and the keys.

Link to originalThe hamiltonian of the Hopfield Network is identical to the ising model, except that the interaction strength is not constant, and very similar to attention!

For a hopfield network:

is the weight / interaction matrix, which encodes how patterns are stored, rather than being a simple interaction constant.

In a simplified form, the update rule of the HFN is:And for attention:

Tokens in self-attention are just like spins in the ising model, but instead of spins, they are vectors in higher dimensional space, with all to all communication, self-organizing to compute the final representation.

See also: associative memory.

Link to originalAttention acts over a set of vectors in a graph: There is no notion of space.

Nodes have no notion of space, e.g. where they are relative to another.

This is why we need to encode them positionally:

Link to originalThe transformer has a very minimal inductive bias.

In the core transformer inductive biases are mostly factored out. Self-attention is a very minimal (and verry useful) inductive bias, with a most general connectivity, where everything attends to everything..

Without positional encodings, there is no notion of space.

If you want to have a notion of space, or other constraints, you need to specifically add them.

Positional encodings, for example, are a type of inductive bias, same as for example the Swin Transformer, where you limit the attention node-connectivity to local windows, somewhat like the biologically inspired inductive bias of CNNs.

Causal attention is another example of an inductive bias, where tokens can only attend to previous tokens in the sequence.

Causal, non-causal attention and cache: See causal attention.

Soft vs Hard Attention

Soft Attention:

- The alignment weights are learned and placed “softly” over all patches in the source image; essentially the same type of attention as in Bahdanau et al., 2015.

- Pro: the model is smooth and differentiable.

- Con: expensive when the source input is large.

Hard Attention (Not acutally used):

- only selects one patch of the image to attend to at a time.

- Pro: less calculation at the inference time.

- Con: the model is non-differentiable and requires more complicated techniques such as variance reduction or reinforcement learning to train. (Luong, et al., 2015)