Goldmine of papers: https://arxiv.org/search/cs?searchtype=author&query=Sutton,+R+S

talks & interviews

Edan Meyer Interview

- We can’t continue train our nets because the wrong parts change - the step size is the same for all neurons with sgd or even adam (which at least changes step size throughout). Keyword: Catastrophic forgetting.

- IDBID (meta learning) & continual backprop pay close attention to which parts should be stable or change a lot

- instead of figuring out how to overcome this problem, we avoid it by saving the data, training with batches, by using replay buffers, … , washing it out.

- And for online learning, we just say it doesn’t work… learning one by one as the data comes… like irl.

- “It’s harder to do, so we’re not gonna do it” - … because there is no immediate business value in doing fundamental research that would lead to actual breakthroughs in efficiency, effectiveness and areas of application. Stuff that would actually lead to AGI.

Something that is obvious to you but not to others will be the greatest contribution to the field.

What did he say about control theory again at the end?

Upper Bound 2023: Insights Into Intelligence, Keynote by Richard S. Sutton

- Moore’s law is underlying all our advances

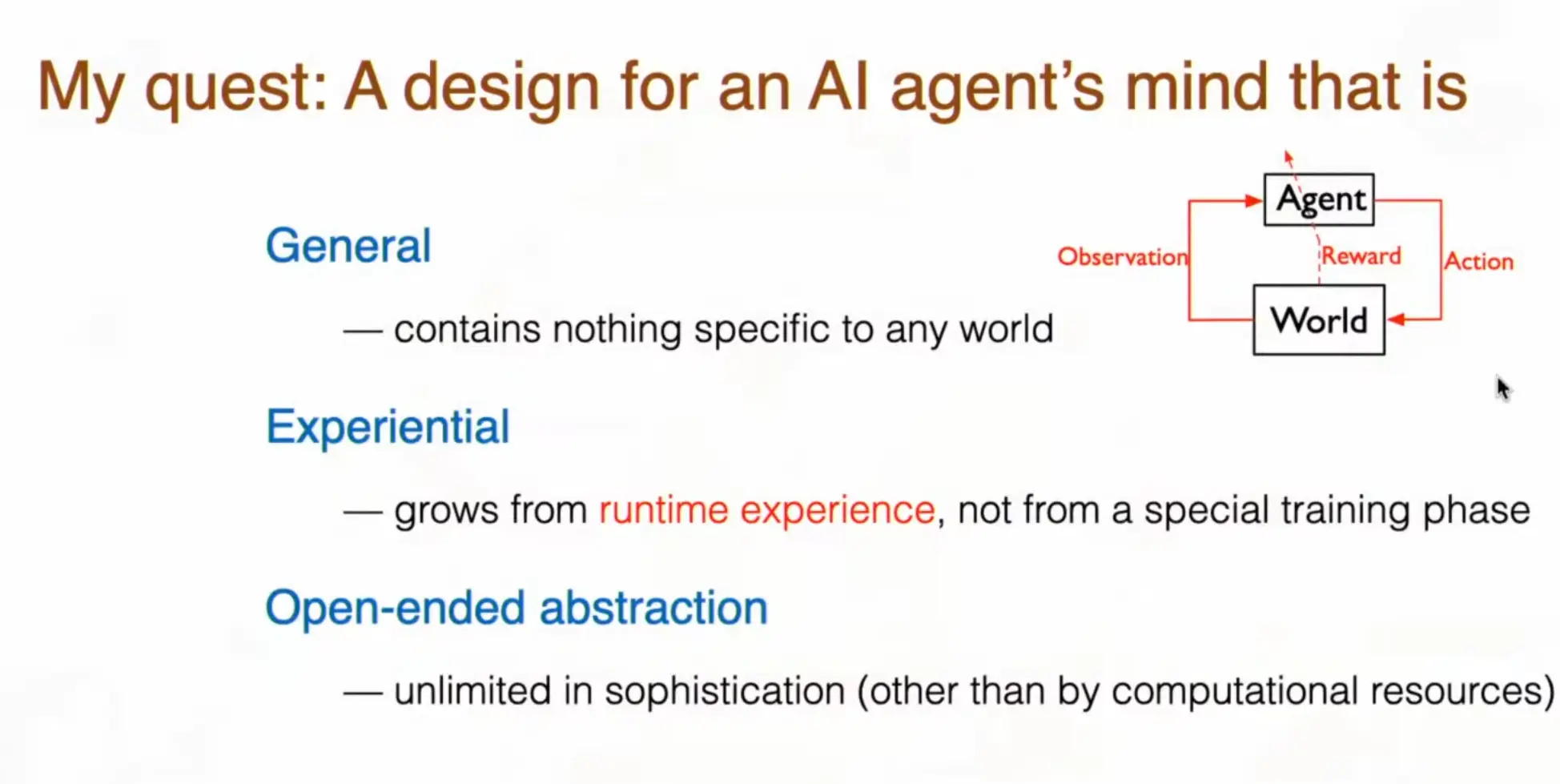

- Tool AI vs Agentic AI

- With complicated things, people tend to grab onto one thing that works and say that’s the only important thing:

- “Gradient Descent is all you need” (dismissing ideas from RL and Control)

- “Learning by prediction is all you need” / “All cognition is just prediction”

- “Scaling is all you need” (AI hype bubble: OpenAI & Co, magic, …)

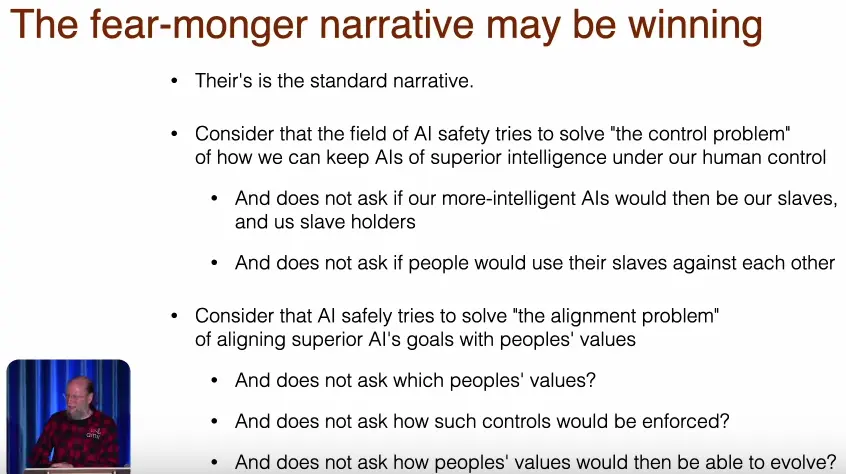

These are all issues that AI alignment, the AI doomers, do not address. But these are the crucial ones. Because the things in the bullets here are what capitalism cannot solve.

The Future of Artificial Intelligence Belongs to Search and Learning

The argument that once we reach AI as smart as humans, then it could just recursively self-improve itself over night and fooom off is completely unprincipled, since this recursive self-improvement is already priced into moore’s law (tools get smarter → smarter tools)

→ it always feels slow, since you have 18-24 months (→~10x in 5 years) for the doubling to occur by Moore’s law.

~1 order of magnitude increase in computation every 5 years.

if you can build an intelligent agent in 2030 for 1k .

Human / domain knowledge in whatever issue you are trying to solve may give the algorithm a temporary advantage, but ultimately, it will fail if it can’t scale

Using search for finding architectures within certain paradigms - even very meta, like a growth algorithm for an artificial network - will be superior to trying to understand how the brain does it exactly and trying to mimick it artificially.

So while we need to put human brain-power and innovate on the question of the fundamental principles of an intelligent systems (The Alberta Plan for AI Research, self-organization),

we need large-scale search to find “good defaults” ([[michael levin#[Toward AI-Driven Discovery of Electroceutials- Dr. Michael Levin](https //youtu.be/9pG6V4SagZE)|levin]]), which nature had all of evolution for.

symbolic vs. statistical

hand-crafted vs. learned

domain-specific vs. general-purpose

intelligence is the computational part of the ability to choose goals - Sutton

it is not really a thing, but more of a relationship

Sensorimotor view:

Agent’s knowledge = facts about the statistics of its sensorimotor data stream

→ interesting view, because it is reductionist and demystifies world knowledge, clear way of thinking about semantics, implies that knowledge can be verified and learned from the data - “the knowledge is in the data”

(intresting that he, who says we should focus on scaling and underlying principles, says that we need to figure out strategy and planing with off-policy learning and TD leadning…)

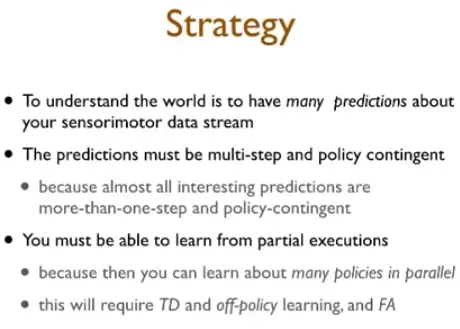

State is the summary of the agent’s past that it uses to predict the future.

State knowledge is to have a good summary, one that enables accurate predictions.

Predictions are dynamics knowledge.

The most important things to predict are the states and rewards, which depend on the actions.

Some approaches: (biased towards what rich is familiar with)

- general value functions

- options and option models (temporal abstraction)

- predictive state representations

- new off-policy learning algorithms (graident-TD, emphatic-TD)

- temporal-difference networks

- deep learning, representation search

- moore’s law

AGI-25 Conference

Experience = Observation, Action, Reward