A projection is an idempotent linear transformation that decomposes vectors onto orthogonal components.

In ML, "projection" often means learned linear transformation, not geometric projection.

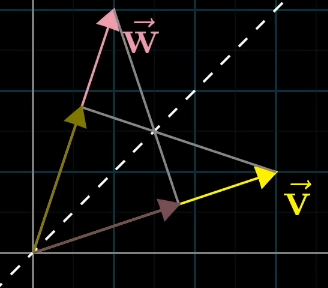

Vector decomposition via projection

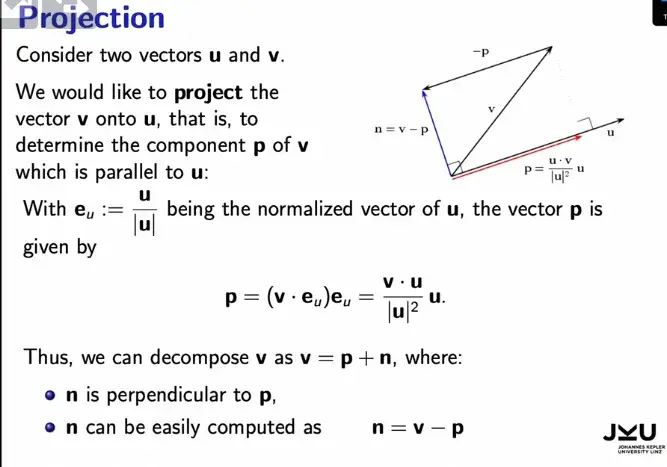

Any vector can be decomposed relative to a direction :

where points along and is orthogonal to it.

The projection is the foot of the perpendicular from to . The perpendicular component is:

The inner product measures how much points along . Scaling by gives the right coefficient:

If is already a unit vector, this simplifies:

For orthogonal basis vectors, any vector decomposes into projections onto each basis direction. Same vector in different bases or :

Each coefficient tells you how much of that basis vector appears in .

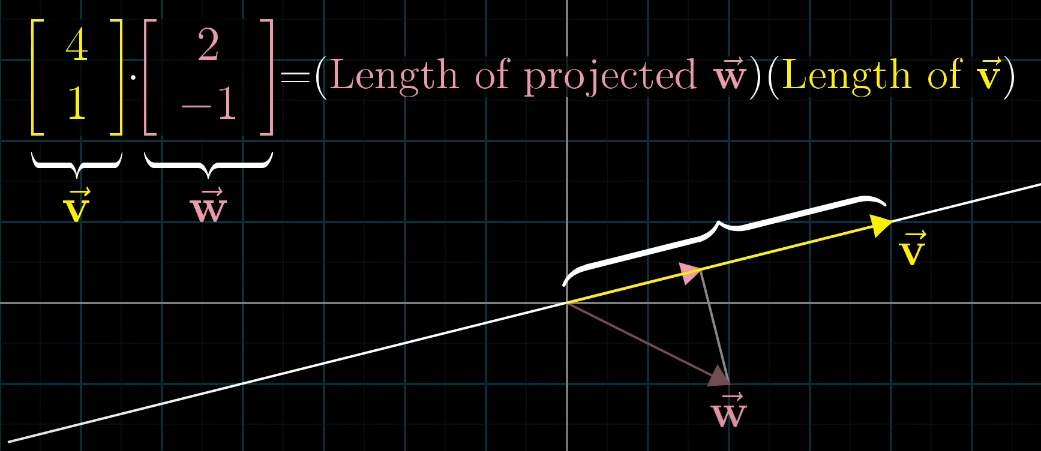

Link to originalThe relation to the cosine and many of the dot product's properties become clear geometrically

Project one vector onto another and multiply the length of the other with the resulting vector.

The head of the projected vector follows from drawing an orthogonal line from the head of one vector to another.

→ For two vectors on the unit circle, this projection is the cosine of the angle between them.

→ Order does not matter.Dot product is positive if they point into the same direction, negative for opposing directions:

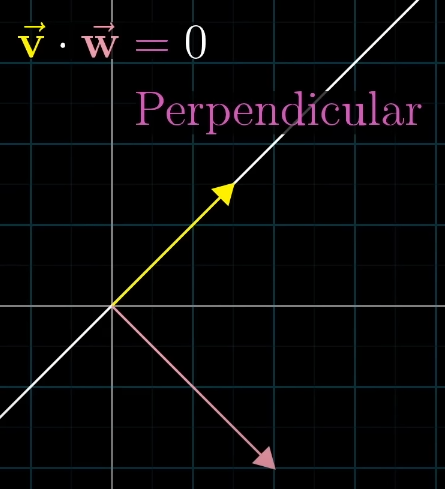

The projected vector has length if the vectors are perpendicular:

Indifference of order:

Scaling one of those vectors by 2 → dot product is exactly 2x bigger: