When making models of the world, you are looking for invariances.

To which degree is a thing a causal structure that is shaping the universe in a way for which we do not find a way for an alternative, better representation.

Resources

https://worldmodels.github.io/

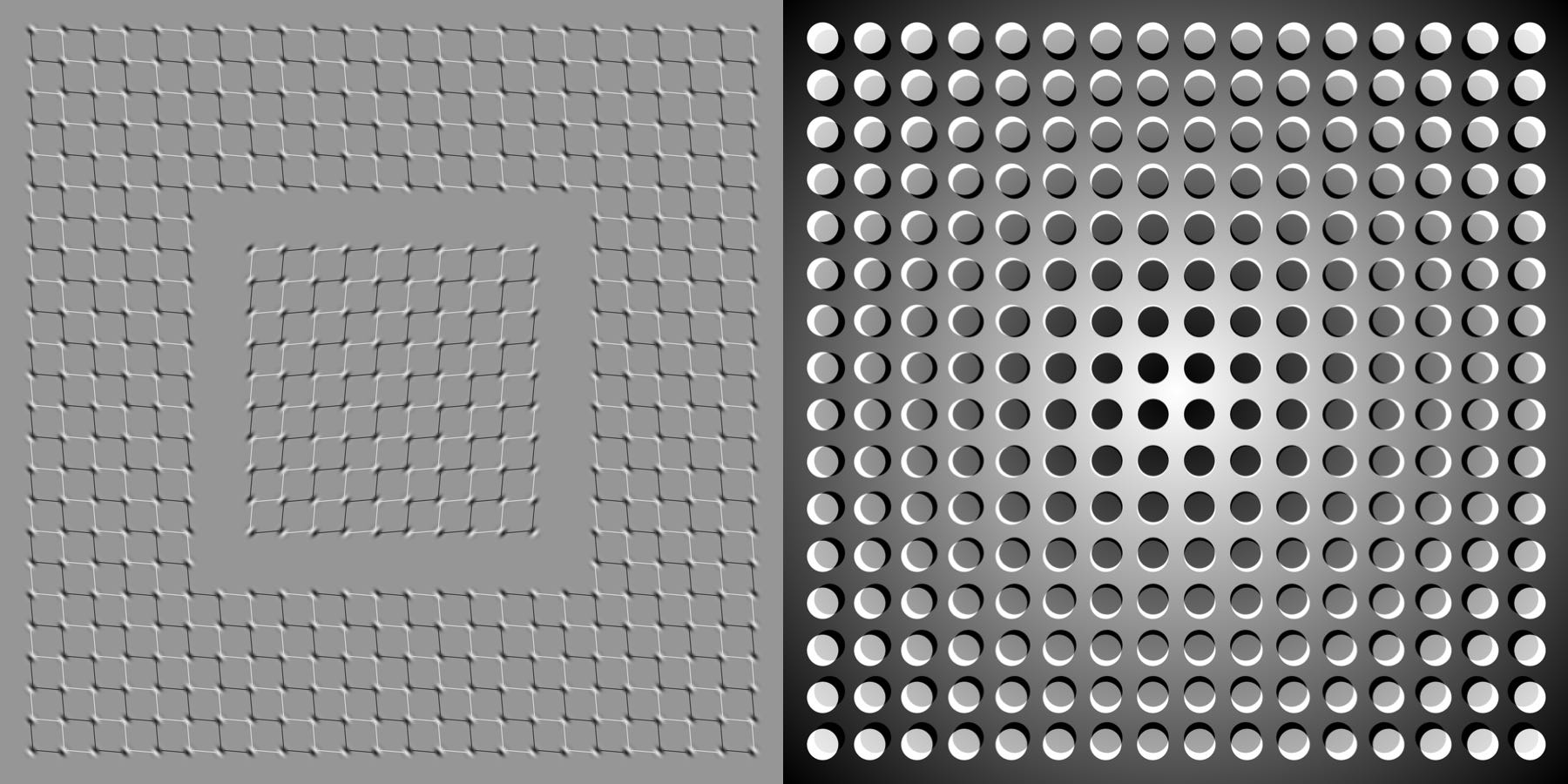

What we see is based on our brain’s prediction of the future.

Recurrent World Models Facilitate Policy Evolution

Link to originalOptimal decision making, at its core, requires considering counterfactuals.

If I did X instead of Y, would it be better? You can answer that question using a learned simulator, a value function, a reward model, … in the end it’s kinda all the same, as long as you have some mechanism for figuring out which counterfactual is better.

→ The key is not necessarily to do really good simulations, but how to answer counterfactuals.Simulation through a learned model seems to be the way the brain figures out counterfactuals (mostly during sleep).

There are different ways of achieving a world model:

Explicit world models, also called model-based (RL), like simulating rewards and probable next states in your head.

implicit world models (…)

Manipulating Chess-GPT’s World Model

Todo

Plan2Explore: Active Model-Building for Self-Supervised Visual Reinforcement Learning

GAIA-1: A Generative World Model for Autonomous Driving

Minecraft Diamon Challenge beaten

Mastering Diverse Domains through World Models - MineRL Diamond Challenge.pdf

Made a robot walk in < hour

DayDreamer World Models for Physical Robot Learning.pdf

Introduction to latent world models RL

DREAM TO CONTROL LEARNING BEHAVIORS BY LATENT IMAGINATION.pdf