Scaled dot product attention is at the heart of the self-attention and cross-attention mechanisms (refer to these notes for a full explanation).

Scaled dot product attention

Why do we want a variance of 1?

For softmax, we want the inputs to be fairly diffuse, otherwise it will converge towards one-hot vectors / argmax:

Link to originalTemperature is just the inverse of multyplying the input by a constant.

If the constant is large (temperature is small), then the input gets sharpened towards the max.

If the constant is small (temperature is high), then the distribution is more diffused / uniform.

See this visualization notebook of how scaling the inputs affects the distribution.

Otherwise, each token would only attend to a single other token, which is not what we want, especially not at initialization

Why is ?

If we assume that and are -dimensional vectors whose components are independent random variables with mean and variance , then their dot product has mean and variance .

Explanation:

and being independent random variables with mean zero and variance one gives us the following properties:

→

We are left with calculating the second moment of the joint distribution.

We split the calculation such that the diagonal entries are calculated seperately from the off-diagonal entries.

Why softmax?

It’s differentiable.

It converts arbitrary scores (logits) into a probabilities.

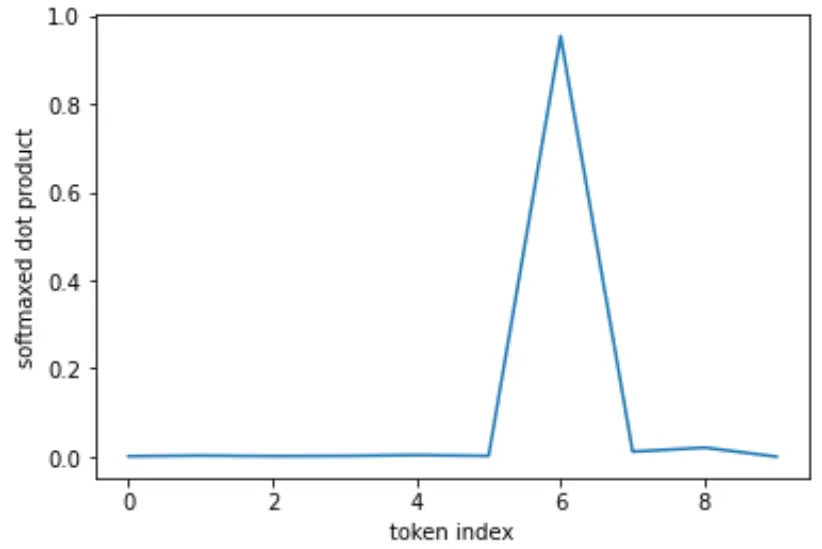

It has a non-linear “winner-take-all” characteristic: It amplifies strong signals (high correlation scores) and suppresses noise (low correlation scores), which seems useful for focusing attention on the most relevant tokens:

Link to originalSoftmax has noise-supression properties – like [max] – the highest value dominates, others are driven to .

This can be a useful feature for singling-out tokens in softmax scaled dot product attention.

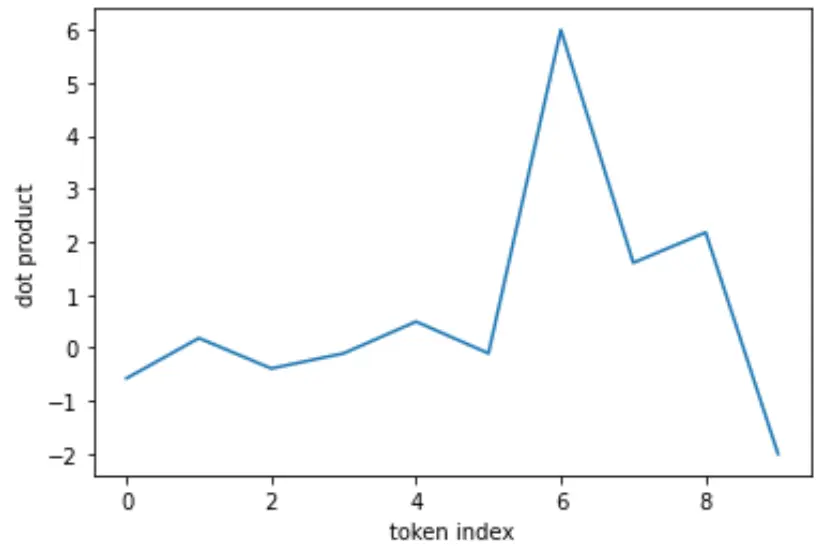

Raw values:

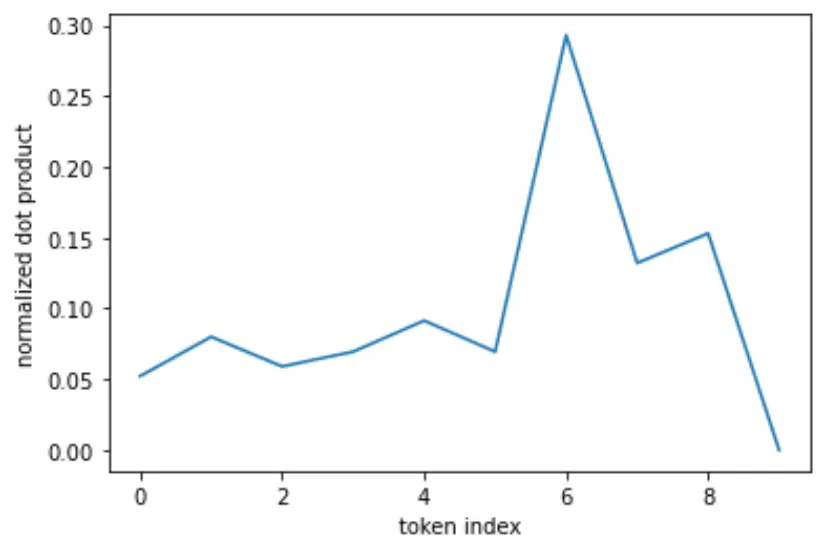

Normalized:

Softmax:

One issue with softmax is that for growing sequence lengths, the attention on individual tokens gets diffused, making it easier for signal to get lost in noise.

The distribution becomes progressively more flat, entropy increases, and the largest value becomes much smaller.

With growing sequence length so does the requirement to produce outlier-ly large values to overwhelm all the others and achieve sharpness. We end up with many small spikes rather than few large ones.

attention sinks might be a result of this, reducing the weights placed on other, informative tokens, just to redue magnitude of the output.

This and other issues prompt the search for alternatives to softmax for attention, or how to fix its bugs (Softmax attention is a fluke):

Softmax's Behavior with Variable Sequence Lengths

Entropy/Sharpness Drift: As the sequence length () increases, a standard softmax distribution tends to become flatter (higher entropy, smaller maximum value) for the same range of input logits. To maintain “sharp” attention (concentrated on a few tokens) in longer sequences, the model needs to produce disproportionately large logit values for the target tokens to overcome the larger denominator sum.

Difficulty Achieving “Null Attention”: Softmax forces the weights to sum to 1. A token cannot easily choose to “attend to nothing” or significantly reduce the overall magnitude of the attention layer’s contribution, as the denominator is directly tied to the numerators . Even if all correlations are low or negative, softmax will still produce a distribution summing to 1.

Emergence of “Attention Sinks”: As a maladaptive workaround for the lack of true null attention, models often learn to dump large amounts of attention weight onto semantically meaningless tokens (like BOS, padding tokens, or even punctuation). By attending heavily to a “dummy” token (whose value projection might also be learned towards zero), a token can effectively reduce the influence of other informative tokens while still satisfying the sum-to-1 constraint.

Training Instability Hypothesis in Causal LMs: During training of causal models (like GPT), different tokens effectively attend to sequences of different lengths (from 1 up to the context window size) simultaneously. However, they all share the same Q/K projection matrices. The optimal Q/K vector norms (to achieve desired attention sharpness) might differ depending on the effective sequence length. Short sequences might prefer lower norms (for softer attention), while long sequences might need higher norms (to fight entropy increase). These conflicting requirements on shared weights could lead to optimization challenges and instability.

Do We Need Softmax's Properties for Attention?

Why must weights be probabilities? Attention is about weighted mixing of information (value vectors). Does this mixing strategy have to be framed as a probability distribution? Other kernels (e.g., polynomials like ) can also provide noise suppression without the probabilistic interpretation.

Why must weights be positive? Could negative correlations be just as informative as positive ones? Perhaps the goal should be suppressing small (near-zero) correlations, rather than strictly enforcing positivity.

Why must weights sum to one? Does attention always need to output a convex combination of value vectors? Maybe allowing the layer to scale its output magnitude (e.g., weights summing to < 1 or > 1) could be beneficial, allowing the layer to control its influence.

Potential Fixes: Towards "Calibrated Attention"

The article proposes modifications to the attention mechanism to address these issues:

- Sequence-Length Dependent Scaling: Instead of a fixed scaling factor (like ), make the scaling dependent on the current sequence length . This could potentially stabilize the entropy/sharpness of the attention distribution across different lengths. This could be a fixed formula or a learned function of .

- Denominator Bias: Introduce an additive bias term (could be fixed, e.g.,

+1as in “Softmax-plus-one”, or learned, potentially also dependent on ) into the softmax denominator:This decouples the numerator and denominator slightly. If all are small relative to , the weights can become very small, approaching zero, allowing for true “null attention” or partial attention (weights summing to < 1). This removes the need for attention sinks.

There’s a paper claiming to address this:

Nevermind, doesn’t scale:

Softpick - No Attention Sink, No Massive Activations with Rectified Softmax