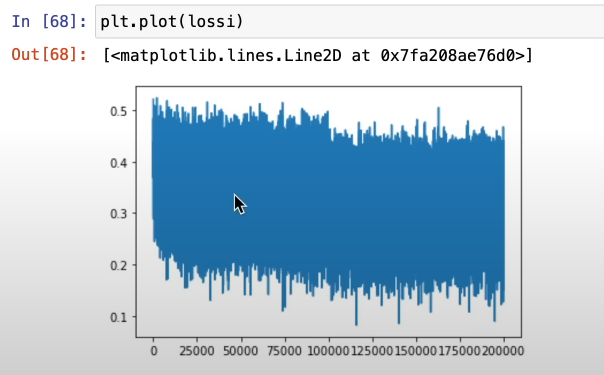

Having a good initialization prevents the characteristic “hockey shape” of the loss function, which is essentially just the NN shrinking down way to high weights

Good example:

Values should also be initialized close to 0, so that the initial values don’t overshoot, which can lead to dead neurons for activation functions like tanh, sigmoid, ReLU (Since if you’re too far from the middle, they are flat → 0 gradient → no learning). This can also happen due to too high learning rate.

What you want is a fairly homogenous, roughly gaussian initialization.

Initializing with a pretrained model’s parameteres (even for completely different tasks) often gives better performance than initializing randomly. A reason for this might be that pretraining offers initial weights that are not only closer to the optimum but also less likely to stuck in bad local optima than random initialization.

This observation seems to support something like The Platonic Representation Hypothesis.

Some papers this is observed in:

Randomly initialized neural network != random function!

References

Makemore (also plotting tanh activations, …)