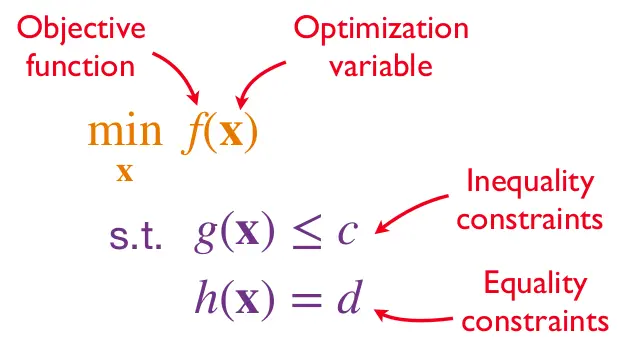

“s.t.” … subject to

Properties of that determine which optimization algorithm to employ

Is known or unknown?

If there’s no mathematical model for , we compute it by interaction with a simulation or experiment (black-box optimization).Can we compute the gradient ?

If we can, we can use first order optimization methods. If not, we must use zero order optimization methods, approximating gradients or using only function values.Is high dimensional?

If has high-dimensional decision variables (like millions of parameters), we must use algorithms that scale well with dimension, like SGD.Is convex or nonconvex?

If is convex, we can use efficient convex optimization algorithms with global convergence guarantees. Each local minimum is also a global minimum.

Deep learning objectives are typically nonconvex, so we use heuristics like SGD with momentum or Adam.Is linear of nonlinear?

If is linear, we can use linear programming techniques.Is expensive to compute or evaluate?

If is expensive, we may use surrogate models or bayesian optimization to minimize the number of evaluations.Does encode dynamics in time?

References

Intro to optimization in deep learning: Momentum, RMSProp and Adam