See also: Machine Consciousness - From Large Language Models to General Artificial Intelligence - Joscha Bach

… like software is an extremely specific physical law: Certain calculations happen in a computer when you arrange the matter in a particular way and look at it from a particular perspective and the right level of abstraction.

→ Consciousness is not a physical thing, and it only exists when you actually run it.

→ Consciousness does not have an identity - your and my consciousness cannot be different or the same.

It is a function that transforms / manipulates these representations in a way:

Transclude of operator#^9554af

Consciousness is also like a self-perpetuating pattern. It seems to increase its own range, colonizing our brain and beyond (society, …).

Transclude of Joscha-Bach#^7ac9c7

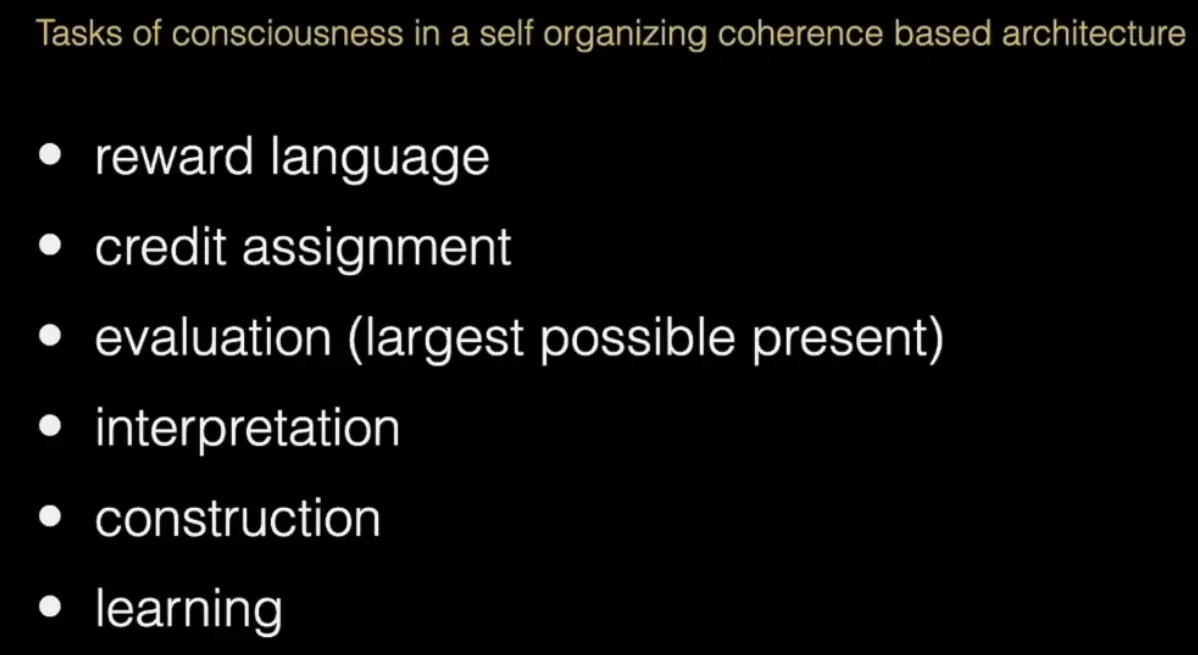

Role of consciousness

Control model of attention.

Alllows convergence to a coherent interpretation (low energy state).

Maintains indexed memory for disambiguation, learning and reasoning.

Makes knowledge accessible throughout the mind (like a communication interface?).

People don't become conscious after getting their PHD but are already before they can track a finger.

Consciousness is often viewed as the pinnacle / a result of interacting with the world very deeply, but it might really be the prerequisite to navigating within this world / learning anything.

Consciousness is virtual.

Physical objects cannot be experienced (or experience…).

Our perception of the world is a virtual reality generated by the brain.

All experienceable objects are representations of the mind, interpreted from the perspective of the self.

The personal self is a representation too and can be deconstructed (i.e. manipulate it using meditation: You observe that your personal self is changing and the dimensions of perception are changing accordingly).

“The perception of reality is a trance state” (i.e. where we don’t observe that the thing we are interacting with is a representation, and when we deconstruct the trance, get in an “enlightened state”, you perceive closer to true reality / outside of your simulated world if you meditate. The trance and your own self become apparent as a representation.

Consciousness is a second-order perception - a perception of perceiving.

There is not just content present to me, but I know there is content present to me, which I notice immediately, on a perceptual level and not via symbolic inference / reflection.

Consciousness always happens now.

In fact, it is the thing that creates the presence / bubble of now.

It is a region in time - not physical time, but something that gets constructed post-hoc and edited all the time in our brain, so it is always somewhat behind but also before physical time, because part of the subjective now is constructed from the past, and part of it is constructed from expectations of the future. When these expectations get violated within that bubble of now, our mind edits these experiences and we do not remember that our perception of the now was different a moment ago.You cannot store a dynamic system, which is dependent on the environment, in space. You can store a snapshot of a brain (in theory), a snapshot of the consciousness. But it only has meaning when running.

→ Consciousness is a stable pattern or structure through time that emerges from the brain’s activity ←

Consciousness is completely dynamic, with constant state transitions from one moment to the next:

Transclude of movement#^251732

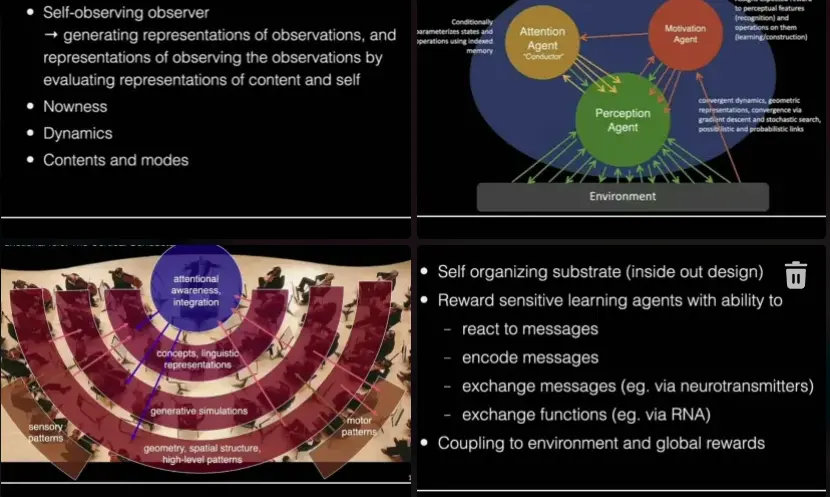

Self-observing obsesrver.

Generates representations of observations and representations of observing the observations by evaluating representations of content and self.

One challenge with the notion of coherence / consistency / minimization of constraint violations is that it is a semantic notion - already at the level where a representation is established…

→ Can we map this onto a syntactic criterion that we can establish in a general enough way?

→ Or is there a good proxy, e.g. an energy based notion: How many operations does a model need to keep current when connected to a processing stream? How well are you able to predict the data that you care about?

→ In a system with fixed amount of resources: Can you organize the system in such a way that you can perform the most valuable computations using the available resources … something like coherence might emerge as a sub-goal, something the system would need to optimize in order to produce the desired performance.

→ It could also be that the system has to develop a market in order to do that: Agents need to decide which software to run, but an individual agent does not have the capacity / is not entagled enough with the larger environment in order to do that: Intermediate layers arise that decide how many compute credits the individual agent gets.Energy in the mind are compute credits.

Cells need consciousness but cannot be conscious.

Cells need a way to coarse grain the information they perceive: We don’t have wave-equations in our brain, but colors, sounds, … (qualia). An extremely simplified abstraction produced by our brain’s generative simulation (“game engine”), conditioned on our input. Cells can’t do any of this on their own as they are lacking global context (and complexity); an individual cell does not have the capacity to be conscious - to reflect what its attention singles out, to know what it would be like to be a social organism navigating the world).

Bach also calls this “game engine” the perception agent. It is controlled by a motivation agent, which is guided by natural geometric features, but importantly assigns rewards to perceptual features and learns / constructs with their help, giving feedback to the attentional agent. The attentional agent conditionally parameterizes states and operations using indexed memory, orchestracting other parts of the mind, trying to maintain coherent behaviour.

I would say it’s a neat abstraction, but I feel like all three of those need to ulitmately be self-organized (ok he says this in the next sentence…):

Our brain is a fully self-organizing substrate. It is made out of single-celled animals that need to survive, which is only possible if they can find some organization that allows the organism to find food for the neurons. Every global structure in the brain originates from self-organization.

How does an information-processing/machine-learning system out of completely self-organizing elements look like?

Reward-sensitive learning agents with ability to:

- react to messages

- encode messages

- exchange message (“neurotransmitters”)

- exchange functions (“RNA”)

Common communication & reward mechanism/language of thought necessary, that is able to grow into a coherent pattern that allows you to explain reality.

At the core of making global coherence happen - the minimal coherent pattern - is the self-observing observer.

“Cogito ergo sum” … before you can navigate the world, in order to make sense of it in a high level, you have to be able to introspectively observe, realize “here I am, what is this world around me”.

The most general approach / search space.

→ Lots of self-organizing reinforcement units.

→ In a spatial neighborhood with each other.

→ Selection function: Pick a receptive field - units choose where to read information from (determines topology). learned global + local functions.

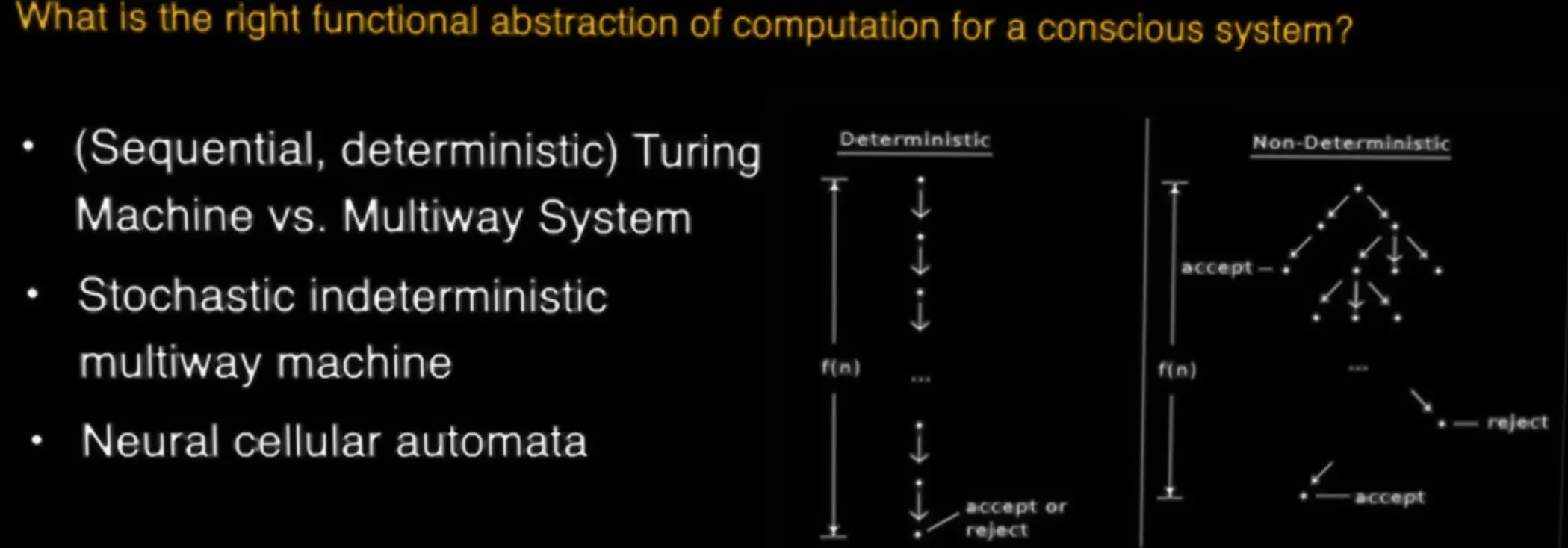

→ Mapping function: Read values from receptive field and map them against own internal state to produce a new internal state, some of which will be exposed, so some other units can read it. arbitrary automata + multiway = non-deterministic turing machine.Both neural networks and brains are in this class of system.

Traditional neural networks have a fixed selection function. It is defined by humans before training.

The selection function in the brain is the space in which the neurons are located w.r.t. eachother + learning (synaptic changes, changes inside the neuron) + dendritic growth priors (evolutionary prior that tells the neuron how to change its recptive field given the environment it’s in).

The mapping function for the brain is given by learning + evolved priors, and it’s indeterministic.

Transclude of Joscha-Bach#^f0986c

Consciousness can also be seen as a form of government in the brain:

Transclude of government#^97e06e

Transclude of government#^855cfa

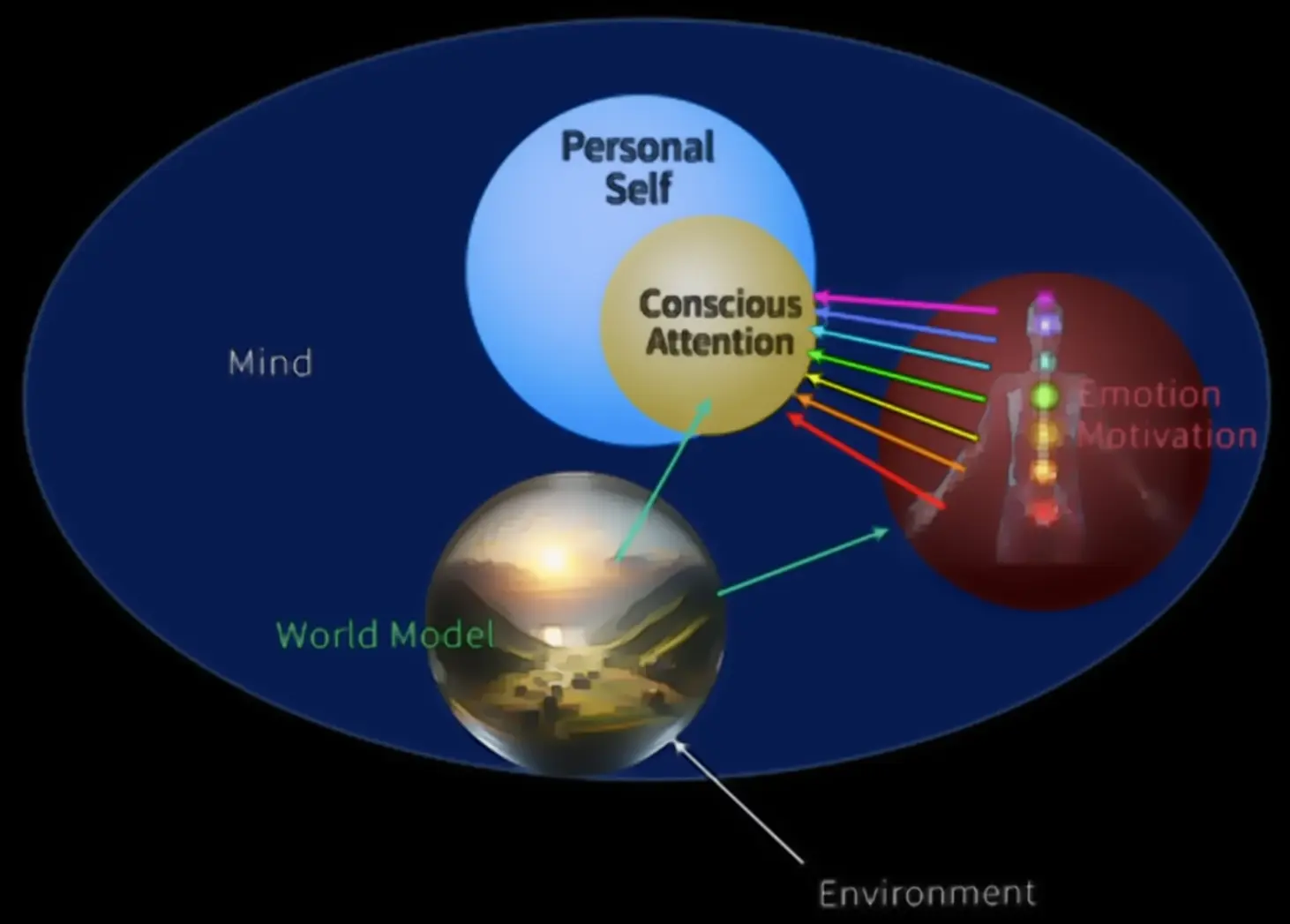

mind

The mind: A collection of components that make for a self-organizing software agent / a “spirit”.

Transclude of animism#^d34fc8The interaction between the mind, personal self, motivation / emotions, consciousness and the world model.

This graphic is ofc. hilariously oversimplified and if we want to achieve consciousness, we should not try to recreate this by hand-designing it - in the machine consciousness talk, some more complex interaction diagrams are shown in the ~ last third iirc.Most of the time, our mental states are in superpositions represented by populations of neurons.

Lot’s of parallel computations are happening, different hypothesis branching out in different parts of the brain, sampling a space of functions, as opposed to sequential, deterministic decision making as you would have it in a computer (see also NOW model).

→ in order to understand the message passing architecture of consciousness, we need a non-deterministic turing machine, like neural cellular automata

“Ultimately it does not matter, because an AGI will be able to virtualiue itself on any substrate of sufficient capacity.”Suffering is a defect between the boundry of world-model and self-model - it is a defect of regulation, and thus can be fixed by more consciousness.

→ If you are smart enough / conscious enough, you could configure yourself to what you care about - a smart enough AI would probably not decide to suffer (this is without editing source code - which is a different but maybe similar question … though isn’t consciousness literally editing the source code if it is self-organizing).

Link to originalIn the province of the mind, what one believes to be true is true or becomes true, within certain limits to be found experientially and experimentally. These limits are further beliefs to be transcended. In the mind there are no limits. ― John C. Lilly, M.D.

Bach’s developmental 6-step hypothesis of infant mental development.

1 Consciousness is a prerequisite, forms before anything else in the mind, starts out with unstructured substrate.

2 Creation of dimensions of difference (latent space of embeddings).

3 Separation of world model and mental stage (hypothetical, manipulatable “idea sphere”. Mixing → hallucination).

4 Creation of objects and categories.

5 Invariance against changes in lighting and temporal development.

6 Modeling agency, creation of personal self, association of consciousness with personal identity.World-model vs. Mental stage: “Res cogitance vs. res extensa” - two quite different consepts.

Cognitive developmental process:

Light & dark, plane, 3D space, solids & liquids, organic shapes, invariance against lighting, objects, plants, animals, personal self, insertion into world → discovers that its purpose is to navigate the interaction between an organism (agent) and its environment → simulation of interests of the organism → switch to first person perspective.

Childhood amnesia: People don’t know what their life was like before they were first-person. 1

We also have states where we are not personalized: Sleeping / just waking up, meditating, drugs, …

Several similar / converging theories on consciousness:

Global workspace theory (Baars, Dehaene)

Cartesian theatre (Dennet, Drescher)

Attention schema (Graziano)

Being No-One (Metzinger)

Consciousness prior (Bengio)

Buddhist perspectives, …

What if the hypothesis is wrong?

The following things have to be true for the hypothesis to be correct:

Is computational functionalism correct?

Is consciousness software (= a causal pattern)?

Is consciousness an aspect of a different mechanism or is consciousness the invariant pattern?

Is consciousness an intercellular phenomenon?

Is consciousness simple?

Different “modes” of consciousness.

When waking up, we are not immediately “fully there”.

Sleeping.

Stress.

Meditation.

Our consciousness is limited by our brain, e.g. in the (dis)ability of just seeing one perspective at a time.

Transclude of alignment#^27a52b

Transclude of scaling#^21a269

References

Joscha Bach

consciousness

“animism”

“We Are All Software” - Joscha Bach (Cyber Animism AGI24 Conference)

Custom citation for 20min talk:

@misc{bach_consciousness_2023,

author = {Joscha Bach},

title = {Consciousness as a coherence-inducing operator},

howpublished = {Models of Consciousness Conference 2023},

month = {October},

year = {2023},

url = {https://youtu.be/qoHCQ1ozswA},

note = {Accessed: 2024-08-08}

}Footnotes

-

I kinda remember like my very first memory was in that Kindergrippe, waking up from some group-nap, some kids outdoor are driving on a toy tractor, bright, sunny day. This memory I have recalled quite a few times already and it is quite vivid still. But it’s more like two discontinuous still images. Maybe that’s the day I became conscious? ↩