Paper of origin: Playing Atari with Deep Reinforcement Learning

environment (may be stochastic)

… set of legal game actions

observed image (vector of raw pixel values representing the screen)

reward (change in game score)

sequence of actions and rewards at time

… Approximate optimal action-value function with DQN

Flow: An action is sampled at each time step , passed to the environment, which modifies the internal state and game score.

The internal state is not observed by the agent; instead it observes an image of the current screen. Simmilar images can mean very different things however (environment states are perceptually aliased), e.g. when the position of a projectile is the same, but the speed different. So it is impossible to fully understand the current situation from only the current screen in the atari environmen → learn sequences of actions and observations → terminate in finite number of time-steps → finite MDP, each sequence is a distinct state → use each sequence as sate representation at time .

Agent interact with env to max future rewards.

Standard future discounted return at time as ,

where is the terminal time-step. Optimal action-value function: (maximum possible return when seeing some sequence , taking action , chosen by policy ).

Note

The game score may depend on the whole prior sequence of actions and observations; feedback about an action may only be received after many thousands of time-steps have elapsed.

Basic idea of the Bellman Equation:

If we know the optimal value of the sequence at the next time-step for all possible actions , then the optimal strategy is to choose the action, which maximizes the expected value of :

The idea behind Q-learning is to estimate this action-value function, by using the Bellman equation as an iterative update, which approximates the optimal action-value function as we approach infinite exploration steps:

I still don’t get this basic approach of how to do this with a Q-Table but nvm; Point is, it is obviously impractical and we have no generalization, as we estimate a value for each sequence separately!

This is why we use a NN to approximate the Q-function, a “Q-network” .

Train it by minimizing the sequence of loss functions , which changes at each iteration :

shorthand

behaviour distribution (probability distribution over sequences and actions)

Target for iteration :

The parameters from the previous iteration are fixed when optimising the loss function .

Targets depend on the network weights (in supervised learning, they are fixed before learning).

Dfferentiating the loss function with respect to the weights we arrive at the following gradient:

We obviously can’t sample the entire state and action space, esp. with they are large or continous, so we approximate it, by replacing the expectations by single samples form the behaviour distribution and the environment :

(also we update the weights after every time step )

This algorithm is model-free. The emulator / environment is necessary, solves it directly with samples from the emulator, without constructing an estimate of .

It is also off-policy: Without a policy greedily taking actions to max expected future return: , but it explores (“behaviour distribution for adequate exploration”): epsilon greedy.

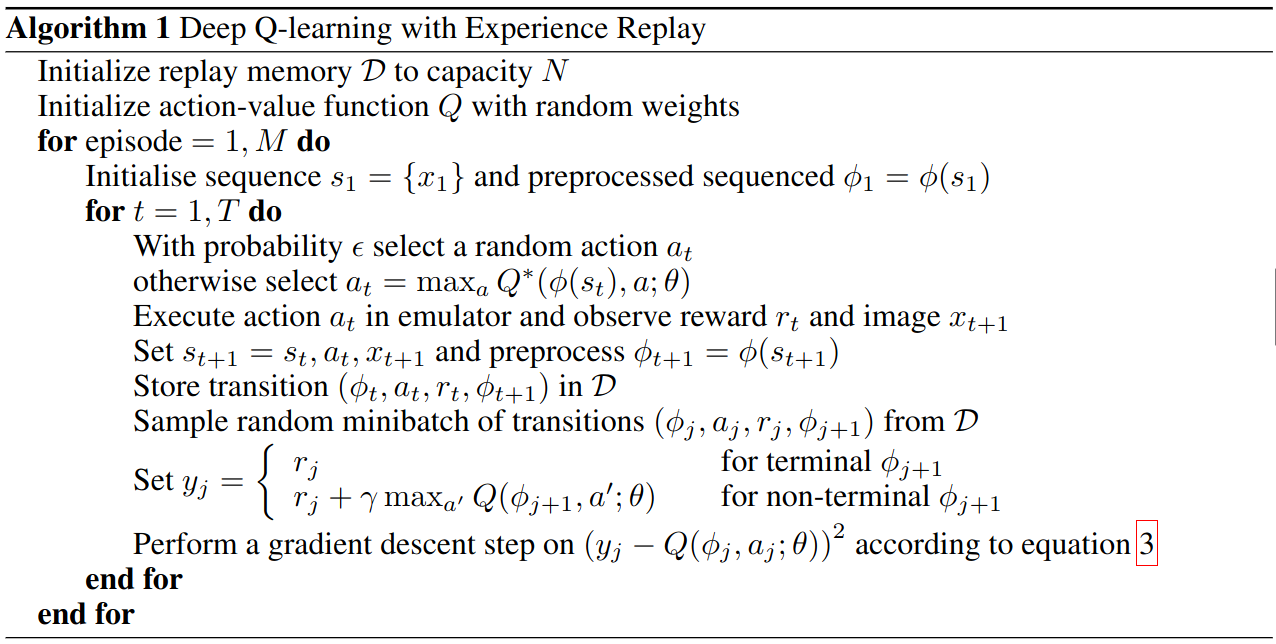

Uses experience replay.

Preprocessing applied:

Grey scale, downscaling, cropping relevant area (to make square for some optimizes conv)

Input to DQN:

4 frames stacked together (Not relevant for turn-based games)

They also “skipped” a frame every 3-4 frames, where just the last action was used instead of making the agent compute a new onee (Again doesn’t make sense for turn-based)

Learning:

2 networks, policy and target.

So the reward for any step is the difference between the Q-function of this and the next step. Q-learning is thus a form of temporal difference learning. We have a fixed set of predictions, which we update every steps (for stability reasons) with the weights of a second net which learns from new experiences.

Link to original

In short: The model improves by going through episodes (irl randomly sampled experiences from episodes) and comparing its reward estimates with the actual rewards

was temed a Deep Q Network in the Atari paper which kickstarted Deepmind in 2013.

Output:

value for each action (as opposed to encoding as input and having forward passes)

TODO / DOING: Implementations on different envs:

Idea: Try out more sophisticated sampling methods, might be especially relevant for 2048? Cause when u are in critical situaitons which are way rarer ofc.

Idea: Compare soft vs hard paramteter updates

Should I truncate if it makes an “invalid” / useless (does not change state) move?

References

There is a long thread (above and below this message)